Pervasive Computing Research

The Mobile and Pervasive Computing Lab is focused on systems research and experimental aspects of pervasive computing, emphasizing four main research thrusts: fundamentals, applications, enablers and smart space deployments

Fundamentals ❯ Sentience Abstractions in Pervasive Spaces

Our Programming Models for Pervasive Spaces Project shows how, once a device is integrated into a smart space, programmers will be able to connect and control the device as a software service in their programs (the Service-Oriented Device Architecture (SODA) programming model). However, programming smart spaces does not stop at getting access to individual devices, or dealing with sensors at their “raw values”. Applications could involve high-level contexts, such as important events and conditions, a specific human activity, or a phenomena. To fill in this gap, we investigate several extensions to the original SODA model, each enabling developers to program smart spaces at different levels of abstraction. At the Service Level, programmers are directly dealing with device services, translating primitive device signals into raw sensor data. At the Event Level, various sensor data are assembled as events, stimulating the system to react under specific selective conditions. At the Activity Level, raw sensor data, combined over time with other context data through machine learning and activity modeling techniques, could be used to detect specific human activities. We refer to programming models defined for such sentience abstractions as power programming models, as they empower and support programmers to develop truly complex pervasive applications easily. We discuss several sentience abstractions and their implementations and programming models further in the active projects, listed below.

Active Projects:

- Event-driven Programming in Smart Spaces

- Phenomena-driven Programming in Smart Spaces

- Streams-oriented Programming in Smart Spaces

- Activity-driven Programming in Smart Spaces

Event-Driven Programming in Smart Spaces

Events are a well-known abstractions in distributed systems and make for one of the most powerful programming models, despite the well-known anomalies and side effects. Under the event model, an application is programmed as a set of Event/Condition/Actions rules. Event and Condition evaluations assume a run-time system enabled by interrupt driven mechanisms. In smart spaces, such run-time support does not exist, and the concept of interrupt is not afforded. Hence, enabling the event model in the case of smart spaces is very challenging.

Initially motivated by the need to overcome the limitations of the SODA model, we have developed an event-based model extension of SODA that we call E-SODA. In this model, application logic is represented by a set of rules, each of which follows a typical Event/Condition/Action (ECA) structure. In this model, basic events correspond to SODA services representing sensors and other devices, whereas complex events are service compositions over basic and complex events. By constantly checking sensor readings and updating rule evaluations, the pervasive system listens to certain events in the space and responds to them by taking specific prescribed actions. Compared to pure SODA, this extension model enables a streamlined and constrained way for program logic formulation that is more stringent and less error-prone. Therefore, it is safe to say that E-SODA is a safer programming model than SODA. In addition, event composition creates a tighter programming space than unrestricted service composition, reducing the possibility of false, nonintentionally erroneous, or impermissible executions in the pervasive space. Furthermore, the rule-based nature of this extension model encourages a centralized reasoning engine where conflicting applications can be easily detected and resolved.

For the E-SODA model to work effectively in pervasive spaces, its event processing must be optimized to take into account the limitation on energy use by the sensor nodes and the sensor network. If the model is applied without optimizations, the continuous evaluation of events and rules would pose hefty computational penalty to the centralized data sinks. Additionally, the continuous data sampling by the sensor nodes and transmission through the network will incur substantial energy drain to the entire sensor network. It is therefore unthinkable to implement an event-driven model such as E-SODA over sensors and smart spaces without a framework of relaxation that provides meaningful opportunities for optimization to minimize both energy and computational cost. We have developed the Atlas Reactively Engine (ARE), an implementation of the E-SODA event-driven programming model within the Atlas architecture. ARE utilizes a Time-Frequency Modifier (TFM) operator, which allows a per event relaxation of rule evaluation.

The ARE engine with optimizers is available as open source from the Lab download web site (the Atlas Sensor Integration Platform Project).

Phenomena-Driven Programming in Smart Spaces

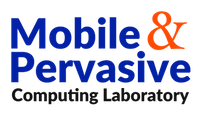

Phenomena clouds are another sentience abstractions that could be very helpful in programming smart spaces. They are characterized by nondeterministic, dynamic variations over time, from their shape, size, and direction of motion along multiple axes. In the past, the utility of phenomena detection and tracking has been limited to remote sensing applications such as tracking oil spills and gas clouds. However, through our experience with Smart Space deployments, we have discovered a great utility for phenomena clouds as a reliable alternative to raw sensor data”. For instance, in the smart floor of the Gator Tech Smart House, it is much more reliable for an application to tune in and attempt to recognize (read) a walking phenomenon than to read individual pressure sensors readings to then recognize a walking activity.

We developed a distributed sensor network algorithm which utilizes localized in-network processing to simultaneously detect and track multiple phenomena clouds in a smart space. Our algorithms not only ensure low processing and networking overhead, but also minimize the number of sensors which are actively involved in the detection and tracking processes at any given time.

Streams-Oriented Programming in Smart Spaces

Data streams are powerful abstractions that are particularly useful for applications with querying needs. Such queries are considered “continuous” and return when a result set is obtained. Queries are defined over smart space sensors that are organized as a linear table. To process continuous queries, push and pull strategies are employed. Our approach here is based on a refined cost model and on a novel strategy that utilizes the sensor network itself as an active participant in the query evaluation.

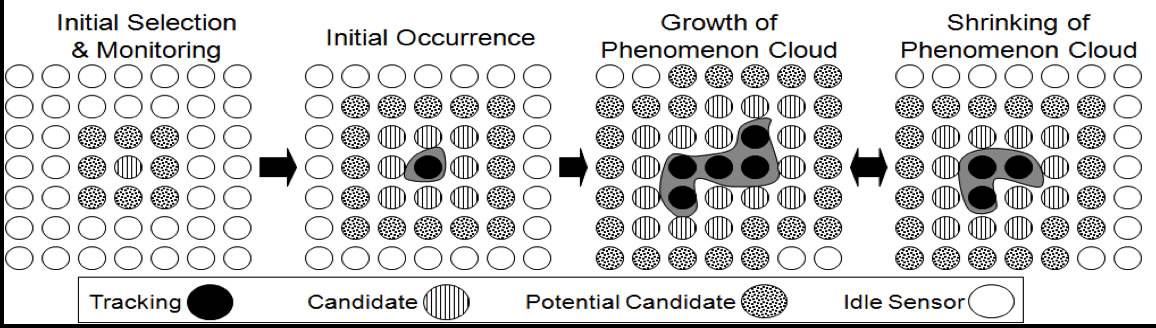

We have had several investigations to understand the energy cost of processing data from networked sensors in a smart space. We compared the cost of communication versus sensor sampling in Crossbow MICA2 and Atmel Zlink RCB (see the figure below). We found that communication cost is no longer dominant and that sensor sampling cost is the most dominant cost in information processing over sensor networks. This single experiment has led us to design continuous query algorithms based on a sound, basic cost model.

We developed an in-network query evaluation strategy involving the utilization of a push strategy and a modified (selective) pull approach with the goal of minimizing energy consumption of sensor nodes while ensuring that query response latency stays within user-specific bounds. Our algorithms not only optimize and choose plans tailored to the query requirements but also tune to the characteristics of the specific hardware platform being targeted to run it.

Activity-Driven Programming in Smart Spaces

Goal

The goal of activity recognition research is to develop highly practical activity recognition technology for real world applications. Even though activity recognition technology can significantly empower many human-centric applications, there is a high entry barrier for this technology to be added to the tool belt of application developers. This is due to low accuracy performance, low scalability, and very low programmability. The accuracy limitation is due to the nature of human activity, which is complex and diverse. Concurrent or interleaving activities add additional challenges and complexities. Our broad goal in this project is to search for alternate activity recognition approaches that can manage these challenges and achieve high accuracy, high scalability, and most certainly high programmability. Without significantly improving programmability, activity recognition will remain owned by researchers and will not benefit programmers and developers, which will limit the scope of its applicability and usefulness.

We now describe individual projects with specific goals.

Semantic-Based Generic Activity Model (SGAM)

Activity recognition performance is significantly dependent on the accuracy of the underlying activity model. However, many activity models and activity recognition algorithms, including probability-based or machine learning-based approaches, are not sufficient for practical applications because they do not adequately address complex, ambiguous, or variably-manifested human activities. Therefore, a new approach, which can capture and represent such complex characteristics of human activities more precisely, is needed.

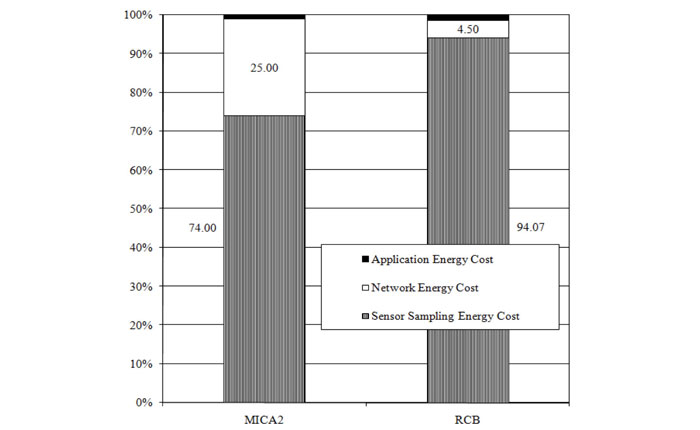

To achieve this goal, we develop a Semantic-Based Generic Activity Model (SGAM), which utilizes simple yet often ignored activity semantics. Activity semantics are highly evidential knowledge that can identify an activity more accurately in ambiguous or “noisy” situations. To exploit semantics, we first define eight (8) primitive activity components: subject, time, location, motive, tool, motion, object, and context. Existing activity models utilize only four primitive components. We adopt a generic activity framework, based on our eight primitive components that is a refined hierarchical composition structure of five layers including sensor, operation, action, activity, and meta-activity. Our SGAM model contains detailed activity semantic knowledge, such as the specific role of an activity component, and constraint or relationship with other components. We classify semantics into four types, such as dominance semantics, mutuality semantics, order semantics, and activity effect semantics. A summary of our SGAM model semantics and example models of selected activities of daily living are shown in the figure below.

We evaluated the inherent accuracy of our Semantic-Based Generic Activity model utilizing measures of certainty and positively validated our approach.

Fuzzy Logic Activity Recognition Algorithm and System Based on SGAM

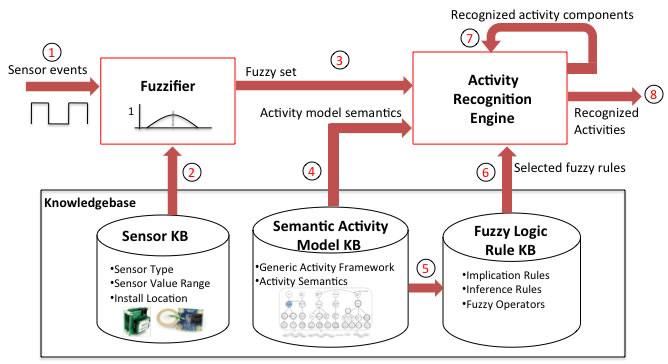

Our Semantic-Based Generic Activity Model (SGAM) has been shown to be capable of representing human activity more accurately than currently known models in the literature. The added semantic specificity increases accuracy in terms of reducing false recognitions (false positives). To address the other side of accuracy, in which false negatives should also be reduced, we must address the issue of variability of the human activity. To address variability, the activity recognition algorithm should be able to tolerate a certain amount of uncertainty. To achieve this goal, we have developed a specific fuzzy logic system especially designed for activities modeled using our SGAM model with its embedded semantics. A fuzzy logic activity recognition algorithm promises significant advantages for accurate activity recognition. First, it can support a semantic activity model well because fuzzy logic has fuzzy operators that can be easily extended to check activity semantics. Second, a fuzzy logic-based algorithm is more tolerant to environment uncertainty due to failure or sensor noise. This is because fuzzy logic-based systems can infer or imply an output activity from uncertain sensor observations. Lastly, because fuzzy logic-based algorithms can represent ambiguity, it is more suitable for handling intrinsic uncertainty due to a specific subject’s uncertain activity. We are currently developing a Fuzzy Logic-Based Activity recognition based on SGAM and utilizing activity knowledge bases as shown in the figure below.

Toward an Accurate Activity Recognition Performance Metric: Integrative Measure of Uncertainty from Multiple Sources

Uncertainty is a major problem for accurate activity recognition. Current activity recognition systems suffer from a convoluted accuracy problem due to the many sources of uncertainty that directly affect the system. We classified several sources of uncertainty, such as the complex and diverse nature of human activity, the limitations of state-of-the-art sensor technology, and the lack of activity representation. Accounting for all the sources requires complex analysis and challenging validation.

To simplify this problem and to find a tractable solution, we are currently working on finding the most influential sources of uncertainty in activity recognition systems through end-to-end uncertainty analysis. An activity recognition system is built in several steps, such as target domain selection, activity modeling, analysis of sensor function, and sensor use modality analysis. We are currently analyzing and modeling the potential uncertainties of each step, bearing in mind that at the end, we are able represent an integrative measure of all uncertainty whose complexity is acceptable and tractable.

People

Dr. Sumi Helal

Eunju Kim

Raja Bose

Chao Chen

Yi Xu

Publications

-

E.Kim, A. Helal, C. Nugent and J. Lee, "Assurance-Oriented Activity Recognition," Proceedings of the International Workshop on Situation, Activity and Goal Awareness," (Beijing, China). ACM, New York, NY, 2011. (pdf)

-

E.Kim and S. Helal, "Modeling Human Activity Semantics for Improved Recognition Performance," Proceedings of the 8th International Conference on Ubiquitous Intelligence and Computing, Banff, Canada, 2011. (pdf)

-

E.Kim and S. Helal, "Practical and Robust Activity Modeling and Recognition," Proceedings of the 8th international conference on wearable micro and nano technologies for personalized health, Lyon, France 2011 (pdf)

-

E.Kim and S. Helal, "Revisiting Human Activity Frameworks," In Proceedings of the 2nd International ICST Conference on Wireless Sensor Network Systems and Software, Miami, USA, 2010. (pdf)

-

E.Kim, S. Helal, J. Lee, and S. Hossain, "Making an activity dataset," Proceedings of the 7th International Conference on Ubiquitous Intelligence and Computing, Xi'an, China, 2010 (pdf)

-

E.Kim, S. Helal, D. Cook, "Human Activity Recognition and Pattern Discovery," IEEE Pervasive Computing vol. 9, no. 1, pp. 48-52, 2010. (pdf)

-

M. Thai, R. Bose, R. Tiwari, A. Helal, "Detection and Tracking of Phenomena Clouds," In revision, the ACM transactions on Sensor Networks.

-

R. Bose and A. Helal, "Sensor-aware Adaptive Push-Pull Query Processing in Wireless Sensor Networks," In proceedings of the 6th International Conference on Intelligent Environments (IE2010), July 19-21, Kuala Lumpur, Malaysia.

-

R. Bose and A. Helal, “Localized In-network Detection and Tracking of Phenomena Clouds using Wireless Sensor Networks,†in Proceedings of the International Conference on Intelligent Environments (IE), July 20-21, 2009, Barcelona, Spain.

-

R. Bose, A. Helal, "Observing Walking Behavior of Humans using Distributed Phenomenon Detection and Tracking Mechanisms," Proceedings of 2nd International Workshop on Practical Applications of Sensor Networks, held in conjunction with the International Symposium on Applications and the Internet (SAINT), Turku (Finland), July 2008.(pdf)

-

R. Bose, A. Helal, "Distributed Mechanisms for Enabling Virtual Sensors in Service Oriented Intelligent Environments," Proceedings of 4th IET International Conference on Intelligent Environments (IE '08), Seattle, July 2008.(pdf)

-

R. Bose, A. Helal, V. Sivakumar and S. Lim, "Virtual Sensors for Service Oriented Intelligent Environments," Proceedings of the Third IASTED International Conference on Advances in Computer Science and Technology, Phuket, Thailand, April 2-4, 2007. (pdf)

-

M. Ali, W. Aref, R. Bose, A. Elmagarmid, A. Helal, I. Kamel, M. Mokbel, "NILE-PDT: A Phenomenon Detection and Tracking Framework for Data Stream Management Systems", Proceedings of the Very Large Databases Conference (VLDB), Trondheim, Norway August 2005. (pdf)