Pervasive Computing Research

The Mobile and Pervasive Computing Lab is focused on systems research and experimental aspects of pervasive computing, emphasizing four main research thrusts: fundamentals, applications, enablers and smart space deployments

Applications

In principle, pervasive computing could be exploited to achieve great societal, organizational, and individual benefits. The high-level goals of such applications mostly center on Quality of Life, Quality of Experience, Convenience, Return on Investment, and Assistance, among others. The Mobile and Pervasive Computing Laboratory is a pioneer in this field and one of the world’s leading research centers in applying pervasive computing in support of Aging, Disabilities, and Independence (ADI), addressing Quality of Life for the elderly and individuals with special needs. ADI research is highly multidisciplinary and requires diversified research talents that cannot be attained without research collaborations (including international). The Lab also addresses proactive health applications of pervasive computing with an emphasis on persuasive tele-health systems, which is another multidisciplinary research area.

Active Projects:

- Aging, Disability, and Independence (ADI)

- Persuasive and Cyber-Analytic Tele-Health

- Blind Navigation in Campus Environments

- Cognitive Assistance for Children with Mucopolysaccharidosis

Aging, Disability, and Independence (ADI)

Goal

The first cohort of “baby boomers” are now (in 2012) 65 years or older, presaging a massive wave of aging “boomers” that could degrade health care and elder care over the next quarter-century. Cost-effective, high impact technologies for aging, disabilities, and independent living are urgently needed. The main goal of this project is to apply pervasive computing to realize the concept of “assistive environments” for older adults. By turning homes, apartments, and assisted living facilities into assistive environments, safety, independence, and in general, quality of life can be enhanced. Another goal of this project is understanding the impediments limiting the commercial proliferation of the assistive environment concepts and services.

The Matilda Smart House and the Gator Teach Smart House (GTSH) are two assistive environments that we developed within this project as research and validation platforms, within which we explored and developed several ADI systems and applications.

We now describe a number of assistive environment projects that we developed and deployed at the GTSH.

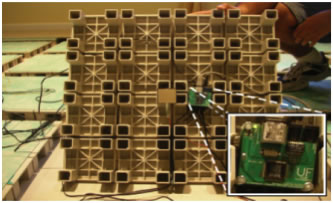

ADI Project: The Smart Floor

The primary location tracking system used in the house is the Smart Floor, which is built into a residential-grade, raised tile system. Each tile is approximately one square foot with a pressure sensor fitted underneath. Unlike other tracking methods, the Smart Floor requires no attention from the residents, any device to wear, or cameras that invade the residents’ privacy. To the user, the Smart Floor is very convenient as it is unencumbered and requires no user attention. To the Smart House, the Smart Floor is very powerful as it provides for rich sentience of location and activities, quietly and invisibly. The Smart Floor is utilized as a collection of location and activity services (API) to other smart house applications. Applications may need location contexts, in which case they invoke the location service. Some applications are entirely based on location, such as a daily activity counter, in which the total steps taken by the resident are counted and reported to the cloud and other subscribers (e.g., a care giver or a relative). A phenomena cloud implementation of the location service is also available, which provides for a higher level of reliability of the location service despite sensor failures and high levels of noise exhibited by the pressure sensors.

Tile blocks of residential-grade raised floor fitted with pressure sensors (carpet blocks removed) is shown in the figure above.

ADI Project: The SmartWave: Meal Preparation Assistant

The SmartWave consists of a microwave oven and other devices and services that provide assistance in meal preparation. An RFID reader mounted under a counter surface below the microwave allows appropriately tagged frozen meals to be recognized by the SmartWave. The resident is provided, via a monitor above the microwave, with the necessary instructions to ready the meal for cooking (remove film, stir ingredients, etc.). The SmartWave can handle multiple cooking cycles (e.g., thaw, low-power followed by high power) automatically. It sets power levels and cooking times automatically. Once the meal is ready, a notification is sent to the resident, wherever he or she is in the house. This technology assists a variety of residents, such as those with visual impairments who are unable to read the fine print on the frozen meals. It also assists residents with hand dexterity problems or with mild dementia.

A live-in trial resident using the SmartWave at the Gator Tech Smart House is shown in the figure above.

ADI Project: Cognitive Assistant

The main goal of the Cognitive Assistant (CA) project is to assist older adults with mild dementia overcome difficulties in carrying out basic daily activities by means of reminders, orientation, and context-sensitive triggering. Indoors, the cognitive assistant provides: (1) Attention Capture and (2) anywhere multimedia cueing capabilities. The assistant itself is a general service decoupled from the specific contents and events of interest. It can be utilized by any number of applications in the Smart House requiring any type of reminders, training, or cueing. The CA has been used proactively as a reminder for critical tasks (to take medications, to eat at meal times, to go see the doctor, to call a son on his birthday, or to feed the pet). It has also been used as a training tool to perform step-by-step tasks (specifically in retraining on hygiene tasks). Another purpose for training is behavior alteration, which we demonstrated in preparing a meal using the SmartWave and ensuring hydration. CA has also been used as a monitoring tool to record the activities performed by the elderly.

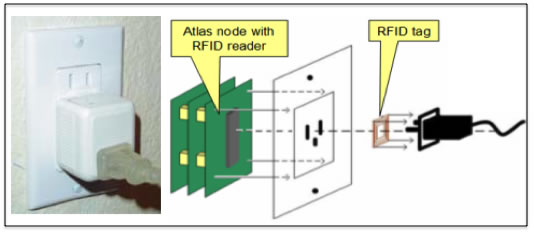

ADI Project: Smart Plug

An intelligent environment should be able to sense and recognize the devices and services it has available, interpret their status, and if needed interact with these devices to influence them. For example, in the Gator Tech Smart House, self-sensing is a service that provides a real-time model that reflects the status of all the appliances in the space. The SmartPlug is a prerequisite service that enables self-sensing. The Smart Plug is a standard power outlet invisibly fitted with a low-cost RFID reader housed inside the wall behind the plug hardware. Each electrical device, such as a lamp or a fan, is given an RFID tag that contains information about the device. Currently, the RFID tags are embedded in pass through plugs (the white cubical in the figure below). Once an appliance is plugged in (through the pass through cube), its RFID tag is read and its location identified. The house gets to know this dynamic information instantaneously. By attaching a sensor node to the plug, actuation becomes possible. Actuations are limited to on/off switching of the appliance.

An actual smart plug in the GTSH (left) and a diagram describing the concept (right) are shown in the figure above.

ADI Project: PerVision

Similar to the Smart Plug, PerVision is another project that aims at empowering self-sensing. While the Smart Plug covered the sentience of appliances and anything that plugs into a power socket in the house, PerVision targets dump objects that have no power or any other connectivity (e.g., chairs, tables, couches, beds, etc.). PerVision is a set of pervasive-assisted computer vision algorithms for the identification of objects based on computer vision and RFID technology. For instance, a real-world model of a living room can be created instantaneously in terms of furniture objects and their features and locations as learned by a running PerVision algorithm. If a piece of furniture is moved, the model is updated responsively and online.

Left: a table and a love seat tagged with RFID tags. Right: PerVision software showing the actual live scene (top left of screen, the vision algorithm stages and the pruning aided by the features obtained by the RFID tags, and finally the real world model (top right of screen).

In the figure above, the left (top and bottom) shows a table and a love seat tagged with RFID tags. At right: PerVision software showing the actual live scene (top left of screen, the vision algorithm stages and the pruning are aided by the features obtained by the RFID tags, and finally the real-world model (top right of screen).

ADI Project: SensoBot

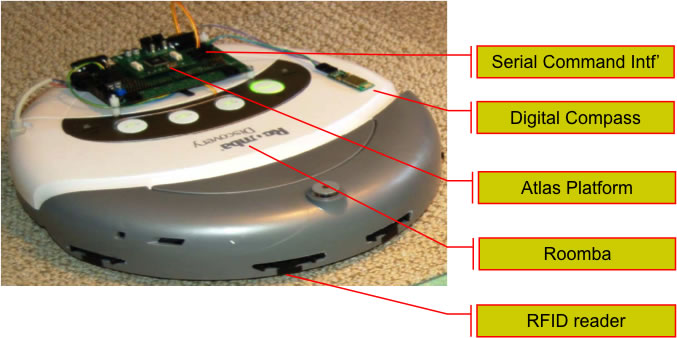

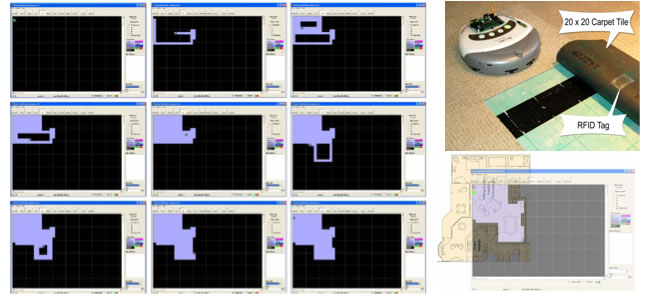

SensoBot is a novel approach and a system to mapping and sensing the footprint of smart spaces. It uses a mobile platform equipped with a range of sensors and actuators. SensoBot is currently based on a Roomba autonomous vacuum cleaner robot (with the vacuum system removed). It uses on-board RFID readers to locate RFID tags embedded in the carpet tiles of the GTSH’s flooring system, which is in the form of a grid. While driving around, SensoBot writes to the tags the corresponding coordinates, which can be used later to locate specific objects. In addition to mapping, SensoBot is used to identify and locate important landmarks and objects like doors, windows, and furniture. The goal of this project is to achieve a complete self-sensing of a space footprint automatically and in an energy efficient manner.

The SensoBot platform and onboard sensors and sensor nodes are shown above.

SensoBot’s incremental development of the floor plan of an area in the Gator Tech Smart House is shown in the figure above.

People

Dr. Sumi Helal

Choonhwa Lee

Youssef Kaddourah

Hicham Zabadani

Jeff King

James Russo

Andi Sukuju

Ahmed Al Kouche

Raja Bose

Chao Chen

Eunju Kim

Jae Woong Lee

Jung Wook Park

Publications

-

A. Helal, M. Mokhtari, and B. Abdulrazak, “The Engineering Handbook on Smart Technology for Aging, Disability and Independence,” John Wiley & Sons, ISBN 978-0-471-71155-1, Computer Engineering Series, 2009.

-

J. Park, C. Chen, H. El-zabadani, and A. Helal, “SmartPlug : Creating Self-Sensing Spaces Using the Atlas Middleware,” a demonstration and a short paper in the supplementary Proceedings of the 11th ACM International Conference on Ubiquitous Computing (Ubicomp), Orlando, Florida, 2009. (pdf)

-

R. Davenport, H. El-zabadani, J. Johnson, A. Helal, and W. Mann, “Pilot Live-In Trial at the Gator Tech Smart House,” Topics in Geriatric Rehabilitation, vol. 23, no.1, pp. 73-84, 2007.

-

H. El-Zabadani, A. Helal, and H. Yang, “A Mobile Sensor Platform Approach to Sensing and Mapping Pervasive Spaces and Their Contents,” in Proceedings of the International Conference on New Technologies of Distributed Systems, Morocco, Marrakesh, June 4-8, 2007.

-

W. Mann and S. Helal, “Technology and Chronic Conditions in Later Years: Reasons for New Hope,” in Hans-Werner Wahl, Clemens Tesch-Romer, and Andreas Hoff, editors, “New Dynamics in Old Age—Individual, Environmental and Societal Perspectives,” Baywood Publishing Company, Inc., ISBN: 0-89503-322-4, 2007.

-

H. El-Zabadani, A. Helal, W. Mann, and M. Schmaltz, “PerVision: An Integrated Pervasive Computing/Computer Vision Approach to Tracking Objects in a Self-Sensing Space,” the 4th IEEE International Conference on Pervasive Computing and Communications (PerCom), Pisa, Italy, 2006. (pdf)

-

W. Mann and A. Helal, editors “Promoting Independence for Older Persons with Disabilities,” Assistive Technology Research Series, IOS Press, ISBN 1-58603-587-8, Copyright 2006.

-

A. Helal, W. Mann, and C. Lee, “Assistive Environments for Individuals with Special Needs,” D. Cook and S. Das, editors, Smart Environments, John Wiley and Sons, Inc., pp. 361-383, ISBN 0-471-54448-5, 2005.

-

A. Helal, W. Mann, H. Elzabadani, J. King, Y. Kaddourah, and E. Jansen, “Gator Tech Smart House: A Programmable Pervasive Space,” IEEE Computer, pp. 64-74, March 2005.

-

H. El-Zabadani, A. Helal, B. Abudlrazak, and Erwin Jansen, “Self-Sensing Spaces: Smart Plugs for Smart Environments,” in Proceedings of the Third International Conference on Smart Homes and Health Telematics (ICOST), Sherbrooke, Québec, Canada, July 2005. (pdf)

-

J. Russo, A. Sukojo, S. Helal, R. Davenport, and W. Mann, “SmartWave Intelligent Meal Preparation System to Help Older People Live Independently,” in Proceedings of the Second International Conference on Smart Homes and Health Telematics (ICOST2004), Singapore, September 2004.

-

A. Helal, W. Mann, C. Giraldo, Y. Kaddoura, C. Lee, and H. El-Zabadani, “Smart Phone Based Cognitive Assistant,” in Proceedings of the 2nd International Workshop on Ubiquitous Computing for Pervasive Healthcare Applications, Seattle, WA, October 12, 2003.

-

A. Helal, C. Lee, C. Giraldo, Y. Kaddoura, H. El-Zabadani, R. Davenport, and W. Mann, “Assistive Environments for Successful Aging,” in Proceedings of the 1st International Conference on Smart Homes and Health Telematics (ICOST). Paris, France, pp. 104-112, September 2003.

-

C. Giraldo, S. Helal, and W. Mann, “mPCA—A Mobile Patient Care-Giving Assistant for Alzheimer Patients,” in First International Workshop on Ubiquitous Computing for Cognitive Aids (UbiCog’02), in conjunction with The Fourth International Conference on Ubiquitous Computing (UbiComp’02), Göteborg, Sweden, Oct 2002.

Persuasive and Cyber-Analytic Tele-Health

Goal

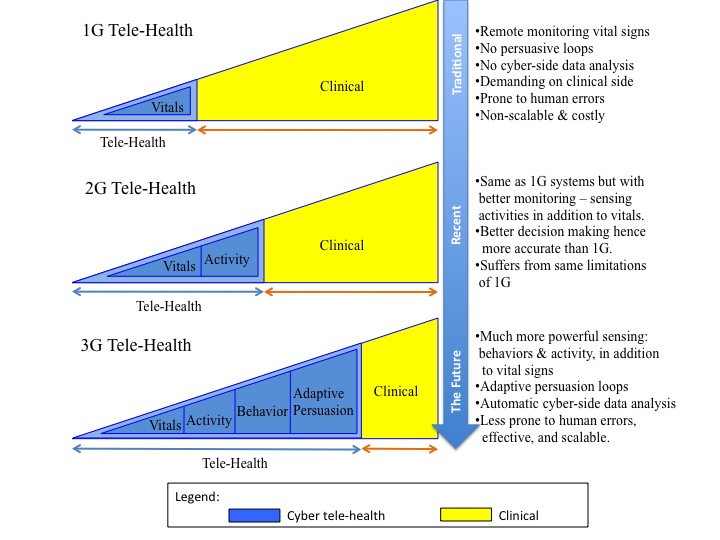

Researchers in the social and life sciences, as well as medical researchers and practitioners, have long sought the ability to continuously and automatically monitor research subjects or patients for a variety of conditions or disorders. Additionally, the use of monitoring data to influence treatment dosage or regimen within real-time constraints is an important objective of behavior modification practice for psychological and medical therapies. The Mobile and Pervasive Computing Lab has formed a multidisciplinary team to research tele-health systems in the domain of obesity and diabetes. We have analyzed the traditional model for tele-health and developed key advances to the model that we believe will invigorate tele-health as an effective delivery mode for health care. Our work so far addressed the need to extend tele-health systems with two powerful capabilities: (1) behavior recognition, which is powerful insight over and above vital signs and activity sensing, and (2) persuasion and adaptive persuasion loops, which is powerful (human) actuation. We believe that both additions (insight and actuation) will have a significant impact on the effectiveness and efficacy of tele-health systems. We are currently working on behavior recognition, Action Behavior Models for persuasion, and participatory tele-health. We are also planning for a major validation and quantification of our hypotheses.

We explain the differences between the traditional, recent, and future tele-health systems using the figure below. These differences clearly position our work.

We now describe individual projects.

A Smart Home Platform for Empowering Obese and Diabetic Individuals

Over the past two decades, the prevalence of the overweight and obese has increased at an alarming rate throughout the U.S. Recent data from the Centers for Disease Control and Prevention (2004) shows that 60% of all adults in Florida are overweight or obese. Excess body weight contributes to numerous harmful consequences, including diabetes, heart disease, high blood pressure, stroke, arthritis, and certain cancers. Sedentary lifestyle and high caloric intake are important contributors to the obesity epidemic and to the development of many chronic diseases. It is well-known that diet and exercise can significantly reduce the prevalence of obesity. However, lifestyle and economic factors makes it difficult for individuals to self-assess and self-modify their behavior. For example, studies in the United States and abroad have found that improved blood glucose control markedly benefits people with diabetes. Every percentage point decrease in A1C blood test results reduces the risk of eye, kidney, and central nervous system complications by 40 percent.

In response to these significant challenges in life sciences research and health care, a multidisciplinary research team led by Dr. Helal and consisting of members from Computer Science and Engineering College of Medicine and the School of Public Health and Health Professions is developing technology, science, and validation techniques to create monitoring and analysis platforms consisting of economically deployable connectivity technology and mobile and personal wearable devices. The developed system will facilitate the automatic gathering of rich vitals information in a manner transparent to the patient, which is then humanly analyzed and reported to care givers for analysis, and interpreted for behavior modification.

Participatory and Persuasive Tele-Health

Technological advances in tele-health systems are primarily focused on sensing and monitoring. However, these systems are limited in that they only rely on sensors and medical devices to obtain vital signs. New R&D is urgently needed to offer more effective and meaningful interactions between patients, medical professionals, and other individuals around the patients. Social networking with Web 2.0 technologies and methods can meet these demands, and help to develop a more complete view of the patient. Also, many people, including the elderly, may be resistant to change, which can reduce the efficacy of tele-health systems. Persuasion technology and mechanisms are urgently needed to counter this resistance and promote healthy lifestyles. The Mobile and Pervasive Computing Lab has been developing architectures, models, and prototypes for participatory and persuasive tele-health systems as a solution for these two limitations. By integrating connected health solutions with social networking, and adding persuasive influence, we hope to increase the chances for effective interventions and behavior alterations.

People

Dr. Sumi Helal

Dr. Steve Anton, PHHP

Dr. Mark Atkinson, MD

Duckki Lee

Chao Chen

Raja Bose

Jung Wook Park

Eunju Kim

Resources

Publications

D. Lee, A. Helal, S. Anton, S. De Deugd, and A. Smith, “Participatory and Persuasive Tele-Health,” The International Journal of Experimental, Clinical, Behavioral, Regenerative and Technological Gerontology, DOI: 10.1159/000329892, Karger AG, Bassel, Switzerland, August 2011.

D. Lee and A. Helal, “An Action-Based Behavior Model for Persuasive Telehealth,” in Proceedings of the 8th International Conference on Smart Homes and Health Telematics (ICOST), Seoul, S. Korea, June 22-24, 2010.

A. Helal, D. Cook, and M. Schmalz, “Smart Home-Based Health Platform for Behavioral Monitoring and Alteration of Diabetes Patients,” Journal of Diabetes Science and Technology, Volume 3, Number 1, January 2009.

M. Weitzel, A. Smith, D. Lee, S. de Deugd1, and A. Helal, “Participatory Medicine: Leveraging Social Networks in Telehealth Solutions,” in Proceedings of the 7th International Conference on Smart Homes and Health Telematics (ICOST), Tours, France, July 1-3, 2009.

H. Lee, J. W. Park, and A. Helal, “Estimation of Indoor Physical Activity Level Based on Footstep Vibration Signal Measured by MEMS Accelerometer for Personal Health Care Under Smart Home Environments,” in Proceedings of the Second International Workshop on Mobile Entity Localization and Tracking in GPS-less Environments (MELT), held in conjunction with Ubicomp 2009, Orlando, Florida.

S. Cadavid, M. Abdel-Mottaleb, and A. Helal, “Exploiting Visual Quasi-Periodicity for Real-Time Chewing Event Detection Using Active Appearance Models and Support Vector Machines,” accepted for publication June 2011, Personal and Ubiquitous Computing Journal, Springer Publishing.

M. Schmalz, A. Mendez-Vazquez, and A. Helal, “Algorithms for the Detection of Chewing Behavior in Dietary Monitoring Applications,” in Proceedings of SPIE Technical Symposium: Mathematics of Data/Image Coding, Compression, and Encryption with Applications XII, Volume 7444A, August 2009.

Blind Navigation in Campus Environments

Goal

Several ideas and approaches to blind navigation in campus environments have been tried by the Mobile and Pervasive Computing Lab. We had to navigate between the trade-offs of accuracy and user encumbrance. We also had to consider several practical issues about wireless network availability/reliability and about ease and cost of deployments. Finally, we looked at extreme contexts that require special systems (e.g., highly populated spaces, such as convention centers). The Lab has contributed three systems: (1) Drishti, high precision outdoor navigation requiring network all the time, (2) Indoor Drishti, augmenting dGPS with ultrasonic localizations, and (3) an alternative system that uses an RFID information grid, instead of a centralized server. Finally, we have developed an initial design to a social navigator in a convention setting to assist the blind user to identify the crowd around him/her and to identify and approach attendees selectively, as desired. DrishTag design is challenging and the project has not yet concluded or been tested in a real deployment. We intend to do so in the future.

We now describe individual projects.

Drishti: A Pedestrian Navigation System for the Blind in Dynamic Environments

When visually impaired people walk around campus and downtown areas, they do so lacking critical information that can affect their safety and travel experience. With limited awareness of their surroundings, blind people often find it difficult to perform the simplest daily chores or to even take a walk on a sunny day. The DRISHTI project at the Mobile and Pervasive Computing Lab aims to integrate advanced technologies and build a system and appropriate interfaces to navigate a visually impaired person from one room on campus to another.

A Geographical Information System (GIS) and Spatial Databases are two technologies used to capture information about buildings, rooms, sidewalks, stairs, construction areas, bike racks, water fountains, rest rooms, and doors. A Differential Global Positioning System, or DGPS, is another technology that is used by DRISHTI to provide the “constant” and precise location of the visually impaired person. Voice technology, including Speech Synthesis and Voice Recognition, is also used so the system and the user can communicate hands-free back and forth.

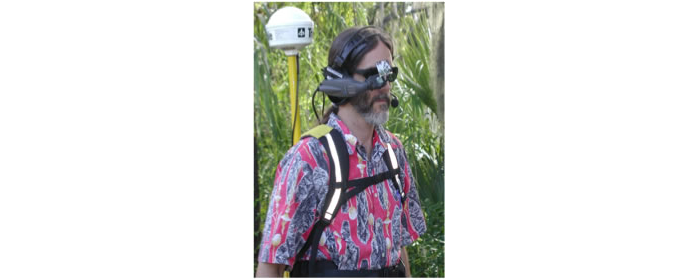

DRISHTI uses a wirelessly connected wearable computer to integrate the blind user to the network and to correlate GPS location with relevant spatial information. When worn by the blind navigator, DRISHTI connects to the network in preparation for answering queries such as “Take me to XYZ building”. In guiding the blind user, DRISHTI generates precision prompts to keep the user on the sidewalks of the shortest path to the destination.

DRISHTI has been covered by several news rooms, most notably an article in the Technology Circle Section of the New York Times that appeared in October 17, 2002.

We have developed an extension of DRISHTI to work inside buildings and visited spaces, such as hotel rooms, by utilizing ultrasonic location positioning technology. The objective was to provide the blind user seamless and continuous operation indoors and outdoors (see figure below).

RFID InfoGrid for Blind Navigation and Wayfinding in Campus Environments

An alternative design to DRISHTI is to avoid reliance on a centralized GIS database and navigation service. This is motivated by the concern of how the blind user could possibly navigate if the network is disconnected, or if there is no network coverage at all. A navigation and location determination system for the blind is designed based on an RFID tag grid. Each RFID tag is programmed upon installation with spatial coordinates and information describing the surroundings. This allows for a self-describing, localized information system with no dependency on a centralized database or wireless infrastructure for communications. The system could be integrated into building code requirements as part of ADA (Americans with Disabilities Act) //guidelines?// at a cost of less than $1 per square foot. With an established RFID grid infrastructure, blind students will gain the freedom to explore and participate in activities without external assistance. An established RFID grid infrastructure will also enable advances in robotics which will benefit from knowing the precise location of the user. We have developed an RFID-based information grid system with an RFID reader integrated into the user’s shoe and walking cane with a Bluetooth connection to the user’s cell phone (see figure below). To assist in navigation, user feedback and communication via a NAVCOM belt worn around the user’s waste is introduced that features a sonic range finder and a series of pager motors for distance feedback and a form of vibrational Braille. An emphasis is placed on the architecture and design, allowing for a truly integrated pervasive environment with minimal visual indicators of the system to the outside observer.

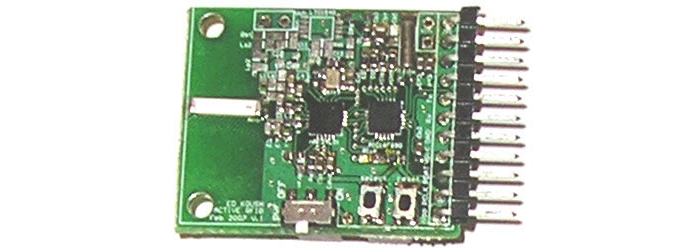

DrishTag—A Smart Tag for Blind-Accessible Conventions

While the InfoGrid is an alternative to DRISHTI, DrishTag is a complementary concept to both DRISHTI and InfoGrid. DrishTags address the blind user's needs in an environment saturated with human noise. DrishTags are small-sized, active RFID devices hidden behind standard plastic name tags (see figure below). DrishTags are worn by both sighted and blind attendees. Blind users have text-to-speech capability added to their tags. Tag antennas are being designed in such a way that limits the scope and range of discovery of peer tags. This way, a blind attendee is able to discover people (and their names and other attributes) within a limited space, predefined cone length, and scope as he or she walks in a convention floor. The system is still underway with many antenna design challenges, and is considered at this point incomplete.

People

Dr. Sumi Helal

Steve More

Lisa Ran

Scooter Wellis

Balaji Ramachandran

Ed Kouche

Heni Yang

Raja Bose

Publications

-

S. Willis and A. Helal, "RFID Information Grid and Wearable Computing Solution to the Problem of Wayfinding for the Blind User in a Campus Environment" Proceedings of the ninth annual IEEE International Symposium on Wearable Computers, Osaka, Japan, October 2005. ( pdf). More detailed version of the paper ( pdf).

-

L. Ran, A. Helal and S. Moore, "Drishti: An Integrated Indoor/Outdoor Blind Navigation System and Service", Proceedings of the 2nd IEEE Pervasive Computing Conference, Orlando, Florida, March 2004. ( pdf)

-

A. Helal, S. Moore, and B. Ramachandran, "Drishti: An Integrated Navigation System for Visually Impaired and Disabled," Proceedings of the 5th International Symposium on Wearable Computer, October 2001, Zurich, Switzerland (pdf)

-

A. Kouche, H. Yang, R. Bose, and A. Helal, "A Smart Tag Design for Blind-Accessible Conventions" Internal Report, March 2007. (pdf).

Cognitive Assistance for Children with Mucopolysaccharidosis

Goal

Developmental disabilities, such as Autism and Mucopolysaccharidosis (MPS), require minute-to-minute, burdensome care on the part of the parents. Often, one of the parents has to be dedicated 100%, 24/7 to support and sustain their child’s life. To enhance children’s cognitive ability in expressing some of their desires, we designed a simple DVD controller in a specific form factor suitable to MPS children. Our design was participatory, allowing one family with MPS children to provide guidelines. Our system, called TouchView, is intended to provide intuitive ways for MPS children to express their wishes and desires for watching a particular movie. The project is in early stage of investigations.

People

Dr. Sumi Helal

Jung Wook Park

End users' mother (name removed for privacy

Publications

-

J. W. Park and A. Helal, “TouchView: Cognitive Assistance for MPS Children,” Poster and Demonstration program, 11th ACM International Conference on Ubiquitous Computing (Ubicomp 09), Orlando, Florida. (pdf)