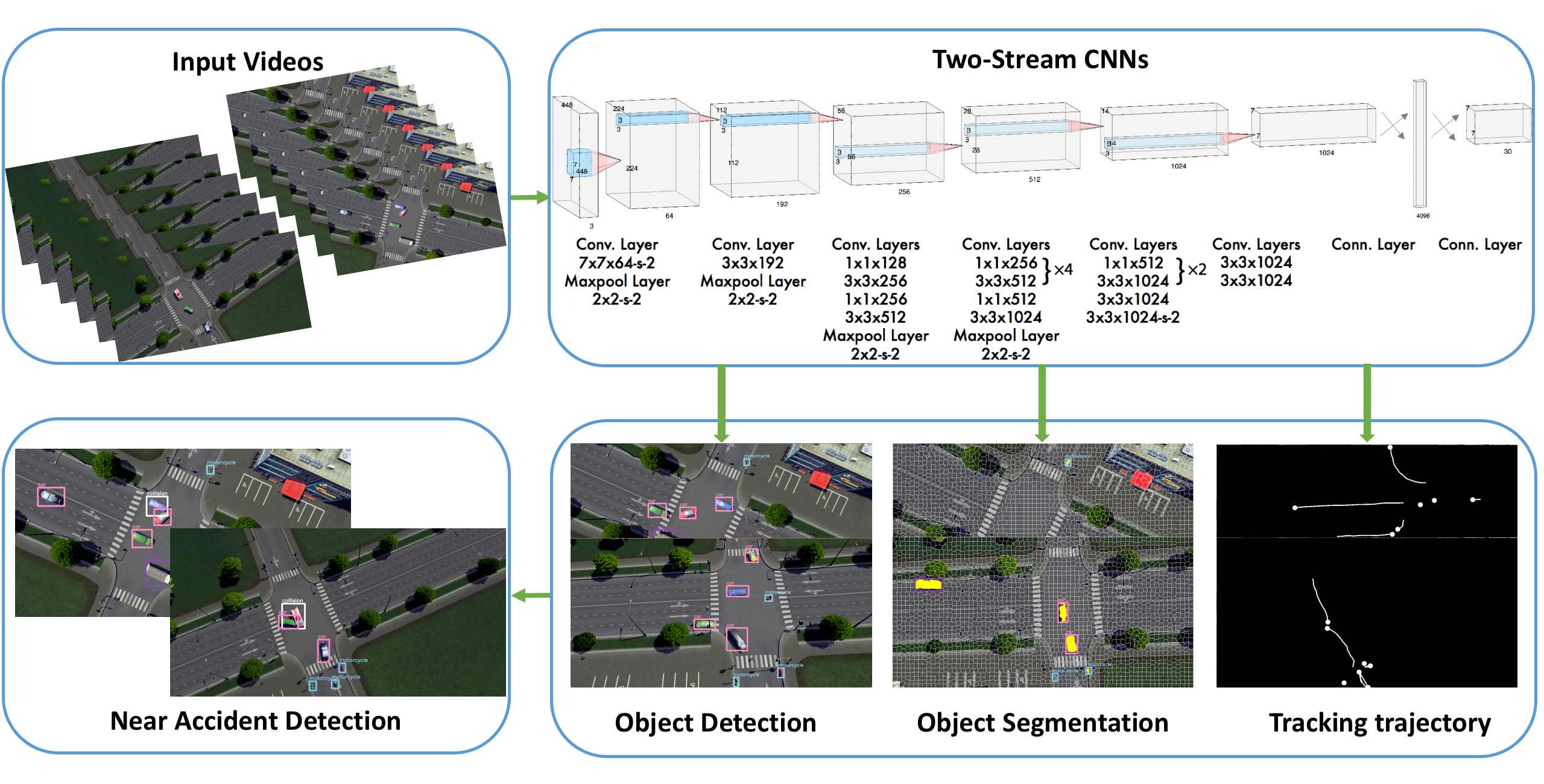

| Figure 2.1.: | The overall pipeline of our two-stream convolutional neural networks for near-miss detection. |

| Xiaohui Huang |

| Aotian Wu |

| Ke Chen |

| Anand Rangarajan |

| Sanjay Ranka |

This book is intended for pedagogical purposes and the authors have taken care to provide details based on their experience. However, the authors do not guarantee that the material in this book is accurate or will be effective in practice, nor are they responsible for any statement, material or formula that may have negative consequences, injuries, death etc. to the readers or the users of the book.

Rapid urbanization worldwide, the growing volume of vehicular traffic, and the increasing complexity of roadway networks have led to congestion, traffic jams, and traffic incidents [1, 2]], which negatively affect productivity [3], the well-being of the society [4], and the environment [5]. Therefore, keeping traffic flowing smoothly and safely is essential for traffic engineers.

Significant advances in electronics, sensing, computing, data storage, and communications technologies in intelligent transportation systems (ITS) [6, 7] have led to some commonly seen aspects of ITS [8] which include:

This book presents algorithms and methods for using video analytics to process traffic data. Edge-based real-time machine learning (ML) techniques and video stream processing have several advantages. (1) There is no need to store copious amounts of video (a few minutes typically suffice for edge-based processing), thus addressing concerns of public agencies who do not want person-identifiable information to be stored for reasons of citizen privacy and legality. (2) The processing of the video stream at the edge will allow for the use of low bandwidth communication using wireline and wireless networks to a central system such as a cloud, resulting in a compressed and holistic picture of the entire city. (3) The real-time processing enables a wide variety of novel transportation applications at the intersection, street, and system levels that were not possible hitherto, significantly impacting safety and mobility.

The existing monitoring systems and decision-making for this purpose have several limitations:

In Section 1.2, we will describe the data sources from which we collect and store loop recorder data and video data. Section 1.3 summarizes the rest of the chapters in the book.

| Table 1.1.: | Raw event logs from signal controllers. Most modern controllers generate these data at a frequency of 10 Hz. |

| SignalID | Timestamp | EventCode | EventParam |

| 1490 | 2018-08-01 00:00:00.000100 | 82 | 3 |

| 1490 | 2018-08-01 00:00:00.000300 | 82 | 8 |

| 1490 | 2018-08-01 00:00:00.000300 | 0 | 2 |

| 1490 | 2018-08-01 00:00:00.000300 | 0 | 6 |

| 1490 | 2018-08-01 00:00:00.000300 | 46 | 1 |

| 1490 | 2018-08-01 00:00:00.000300 | 46 | 2 |

| 1490 | 2018-08-01 00:00:00.000300 | 46 | 3 |

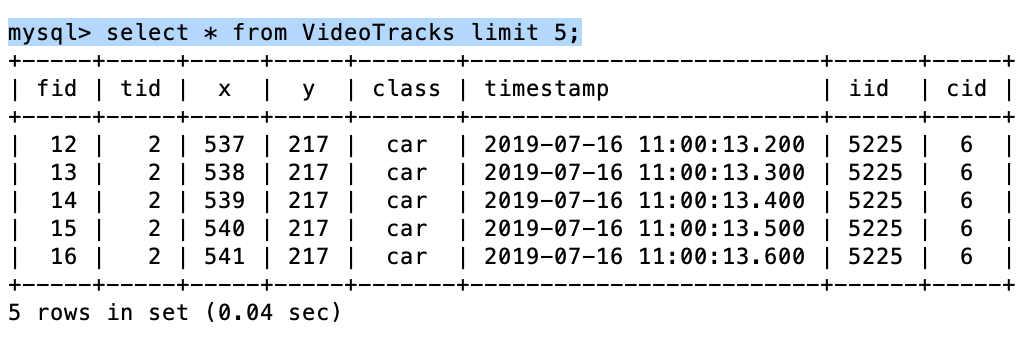

Signal controllers, based on the latest Advanced Transportation Controller (ATC)1 standards, are capable of recording signal events (e.g., vehicle arrival and departure events) at a high data rate (10 Hz). The different attributes in the data include intersection identifier, timestamp, and EventCode and EventParam. The accompanying metadata describes what different EventCode and EventParam indicate. For instance, EventCode 82 denotes vehicle arrival, and the corresponding EventParam represents the detector channel that captured the event. The other necessary metadata is the detector channel to lane/phase mapping, which helps to identify the lane corresponding to a specific detector channel. Different performance measures of interest, such as arrivals on red, arrivals on green, or demand-based split failures, can be derived on a granular level (cycle-by-cycle) using these data.

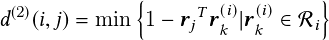

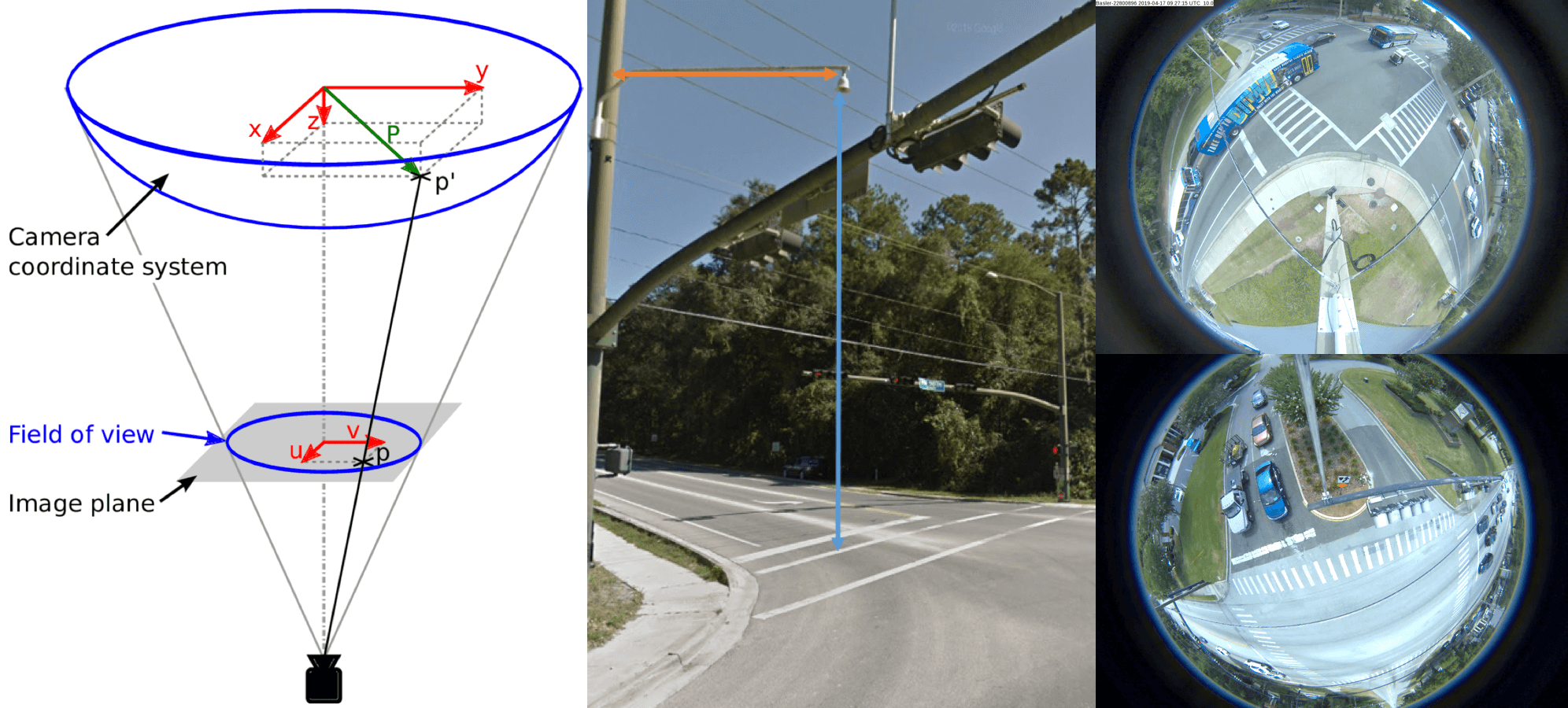

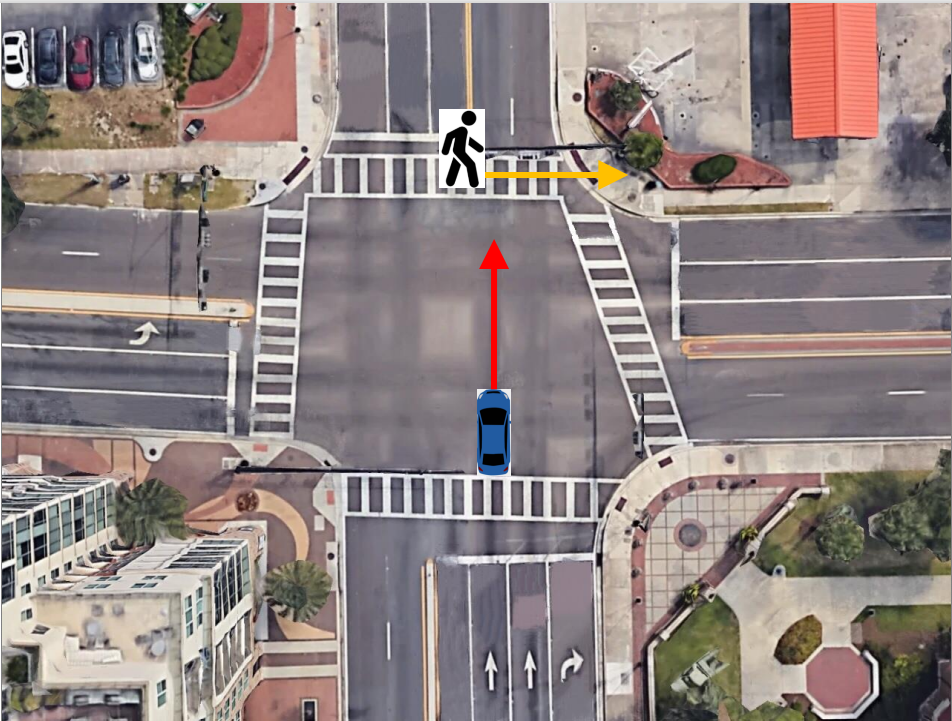

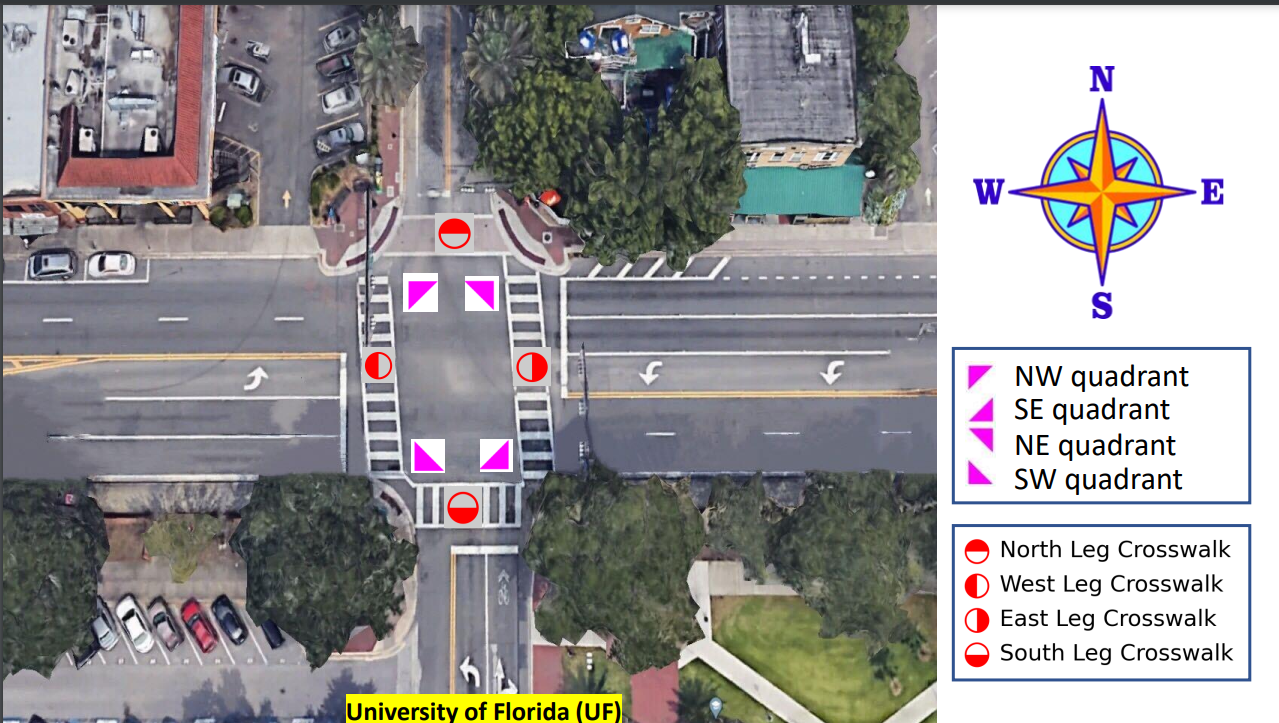

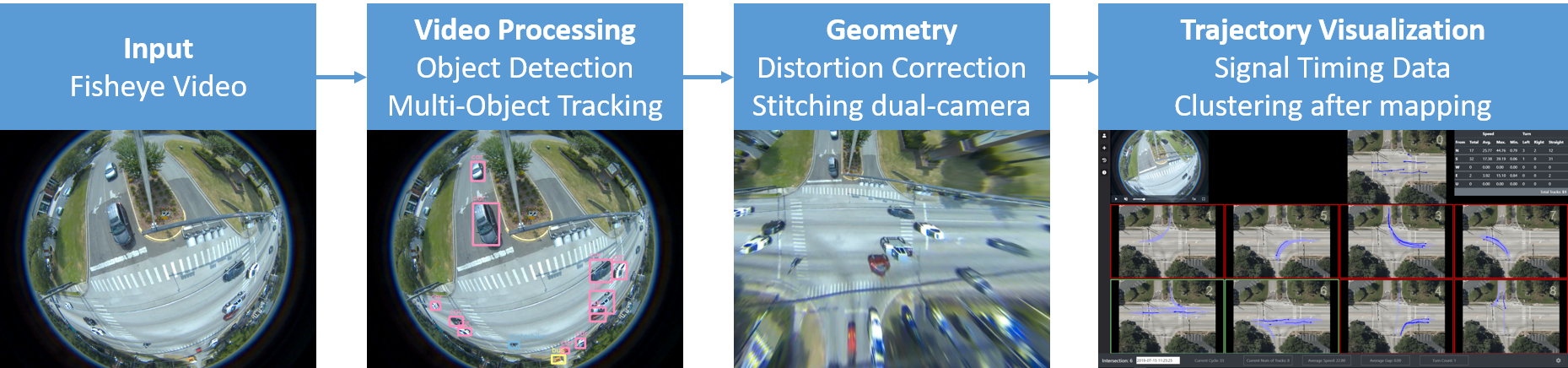

We process videos captured by fisheye cameras installed at intersections. A fisheye (bell) camera has an ultrawide angle lens, resulting in a wide panoramic view of the nonrectilinear image. Acquiring locations with a fisheye camera is advantageous because it can obtain a complete picture of the entire intersection.

The fisheye intersection videos are more challenging than videos collected by surveillance cameras for reasons including fisheye distortion, multiple object types (pedestrians and vehicles), and diverse lighting conditions. We annotated the spatial location (bounding boxes) and temporal location (frames) for each object and their vehicle class in videos for each intersection to generate ground truth for object detection, tracking, and near-miss detection.

The chapters are organized as follows. In Chapter 2, we propose an integrated two-stream convolutional network architecture that performs real-time detection, tracking, and near-miss detection of road users in traffic video data. The two-stream model consists of a spatial stream network for object detection and a temporal stream network to leverage motion features for multiple object tracking. We detect near-misses by incorporating appearance features and motion features from these two networks. Further, we demonstrate that our approaches can be executed in real-time and at a frame rate higher than the video frame rate on various videos.

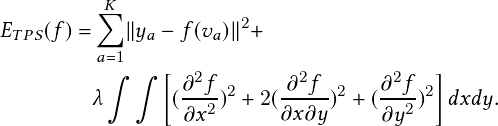

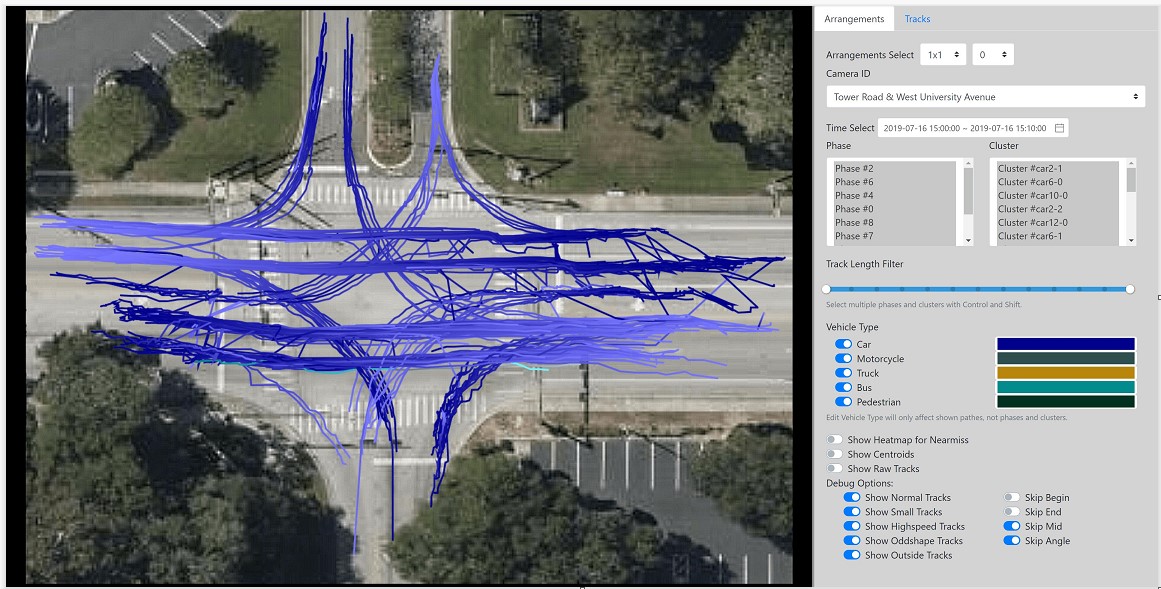

In Chapter 3, we introduce trajectory clustering and anomaly detection algorithms. We develop real-time or near real-time algorithms for detecting near-misses for intersection video collected using fisheye cameras. We propose a novel method consisting of the following steps: (1) extracting objects and multiple object tracking features using convolutional neural networks; (2) densely mapping object coordinates to an overhead map; and (3) learning to detect near-misses by new distance measures and temporal motion. The experiments demonstrate the effectiveness of our approach with a real-time performance at 40 fps and high specificity.

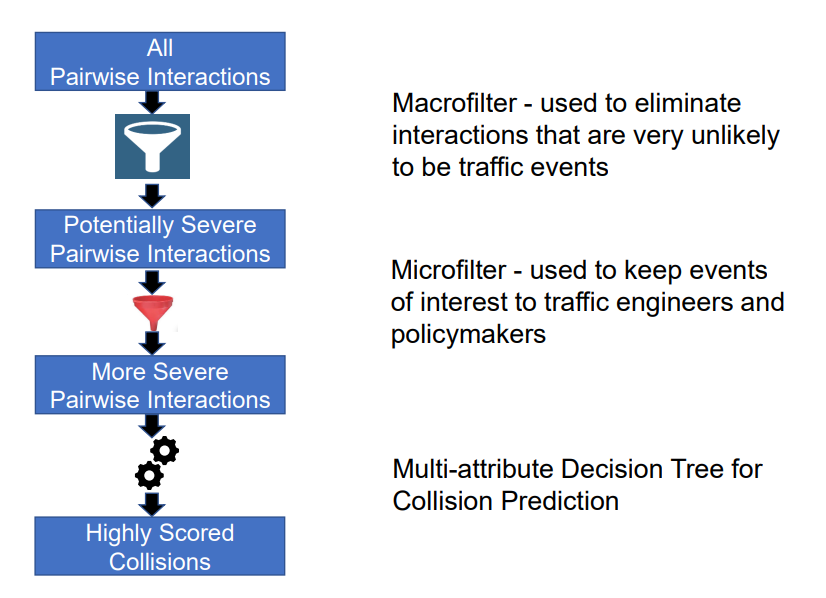

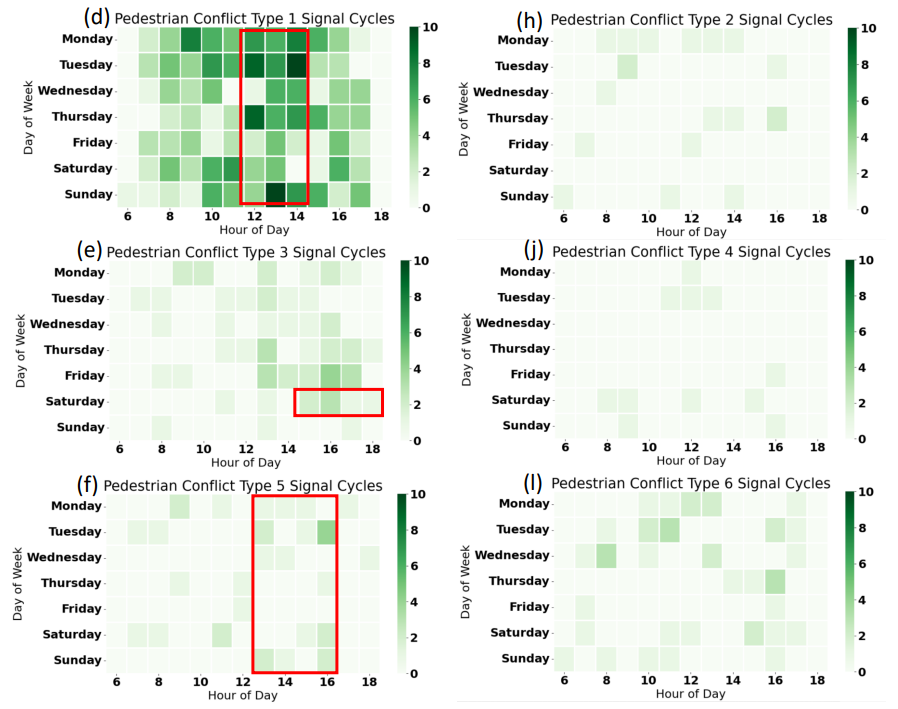

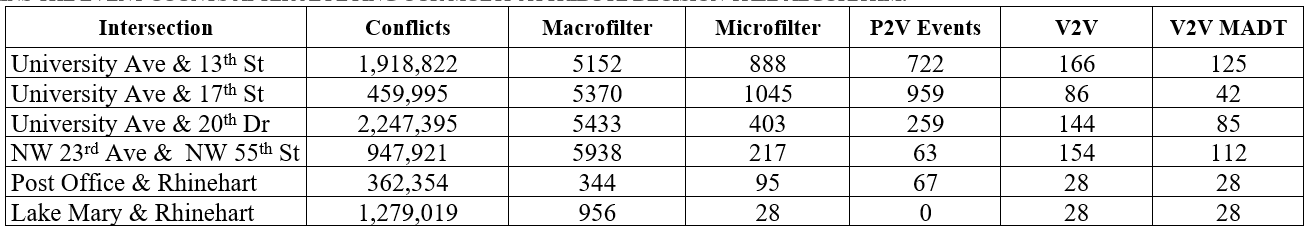

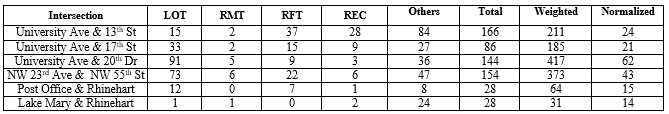

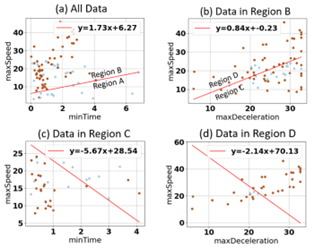

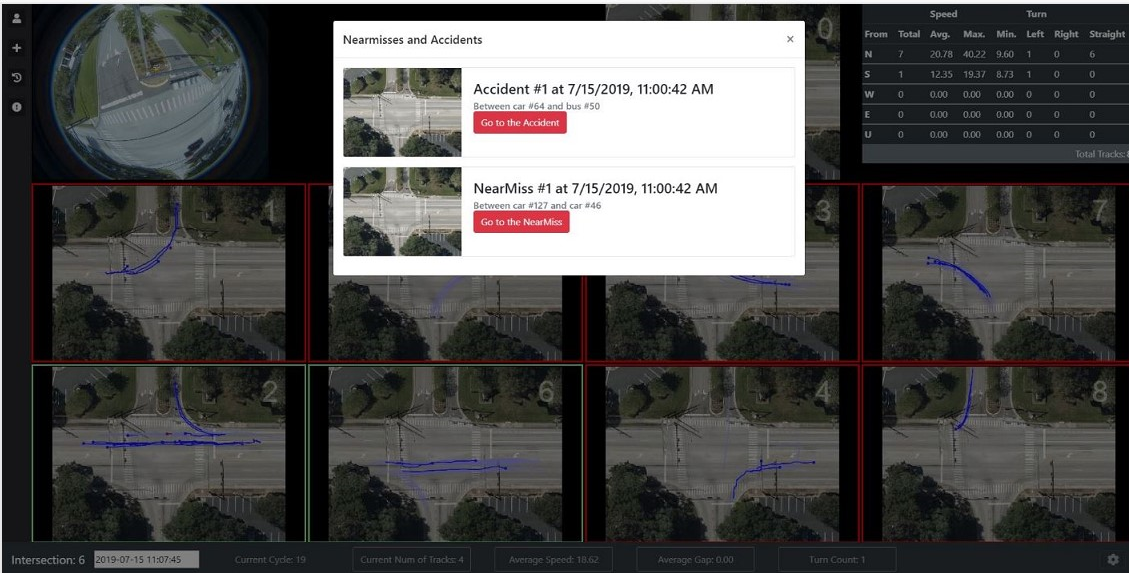

Chapter 4 presents an end-to-end software pipeline for processing traffic videos and running a safety analysis based on surrogate safety measures. As a part of road safety initiatives, surrogate road safety approaches have gained popularity due to the rapid advancement of video collection and processing technologies. We developed algorithms and software to determine trajectory movement and phases that, when combined with signal timing data, enable us to perform accurate event detection and categorization regarding the type of conflict for both pedestrian-vehicle and vehicle-vehicle interactions. Using this information, we introduce a new surrogate safety measure, “severe event,” which is quantified by multiple existing metrics such as time-to-collision (TTC) and post-encroachment time (PET) as recorded in the event, deceleration, and speed. We present an efficient multistage event-filtering approach followed by a multi-attribute decision tree algorithm that prunes the extensive set of conflicting interactions to a robust set of severe events. The above pipeline was used to process traffic videos from several intersections in multiple cities to measure and compare pedestrian and vehicle safety. Detailed experimental results are presented to demonstrate the effectiveness of this pipeline.

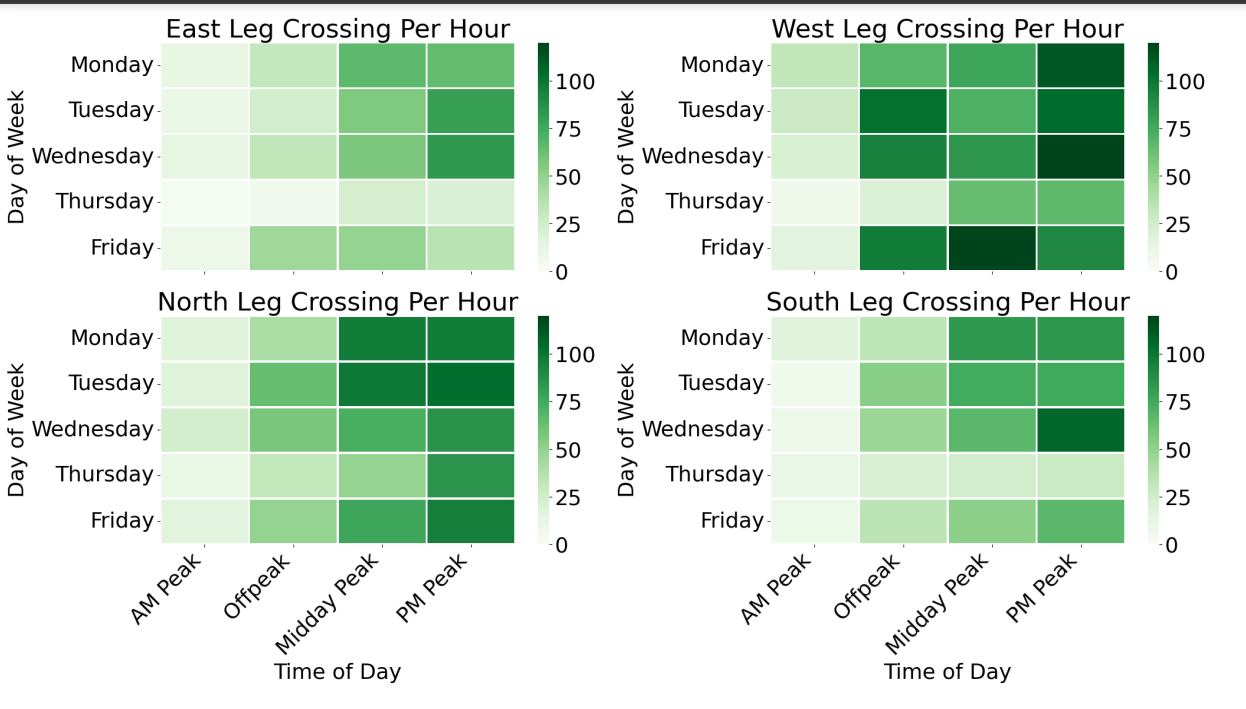

Chapter 5 illustrates cutting-edge methods by which conflict hotspots can be detected in various situations and conditions. Both pedestrian-vehicle and vehicle-vehicle conflict hotspots can be discovered, and we present an original technique for including more information in the graphs with shapes. Conflict hotspot detection, volume hotspot detection, and intersection-service evaluation allow us to comprehensively understand the safety and performance issues and test countermeasures. The selection of appropriate countermeasures is demonstrated by extensive analysis and discussion of two intersections in Gainesville, Florida, USA. Just as important is the evaluation of the efficacy of countermeasures. This chapter advocates for selection from a menu of countermeasures at the municipal level, with safety as the top priority. Performance is also considered, and we present a novel concept of a performance-safety trade-off at intersections.

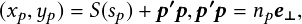

In Chapter 6, we propose to perform trajectory prediction using surveillance camera images. As vehicle-to-infrastructure (V2I) technology enables low-latency wireless communication, warnings from our prediction algorithm can be sent to vehicles in real-time. Our approach consists of an offline learning phase and an online prediction phase. The offline phase learns common motion patterns from clustering, finds prototype trajectories for each cluster, and updates the prediction model. The online phase predicts the future trajectories for incoming vehicles, assuming they follow one of the motion patterns learned from the offline phase. We adopted a long short-term memory encoder-decoder (LSTM-ED) model for trajectory prediction. We also explored using a curvilinear coordinate system (CCS) which utilizes the learned prototype and simplifies the trajectory representation. Our model is also able to handle noisy data and variable-length trajectories. Our proposed approach outperforms the baseline Gaussian process (GP) model and shows sufficient reliability when evaluated on collected intersection data.

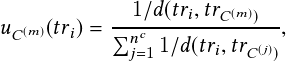

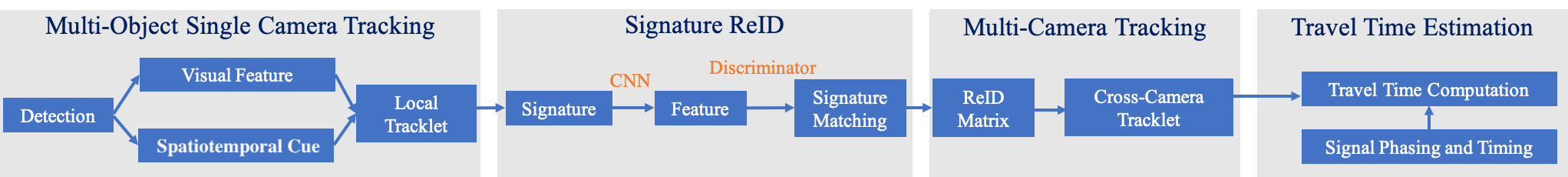

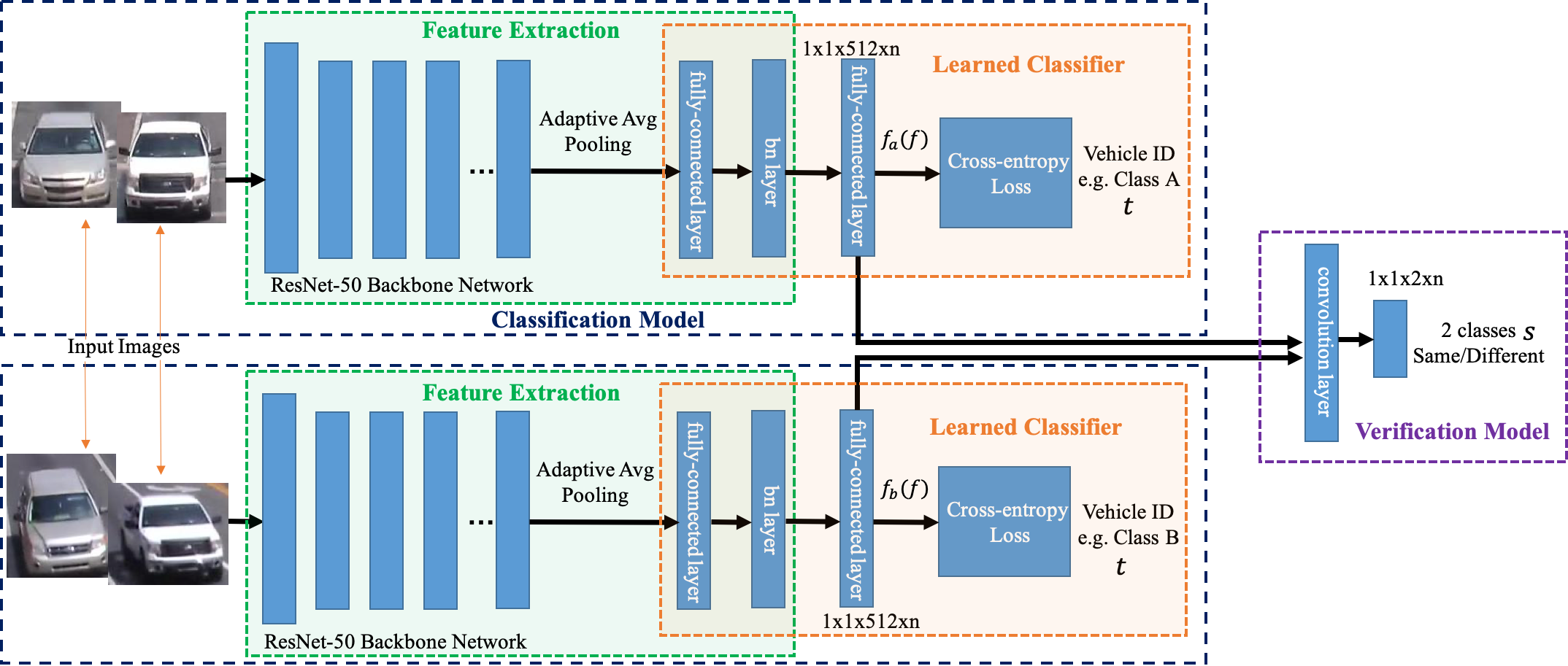

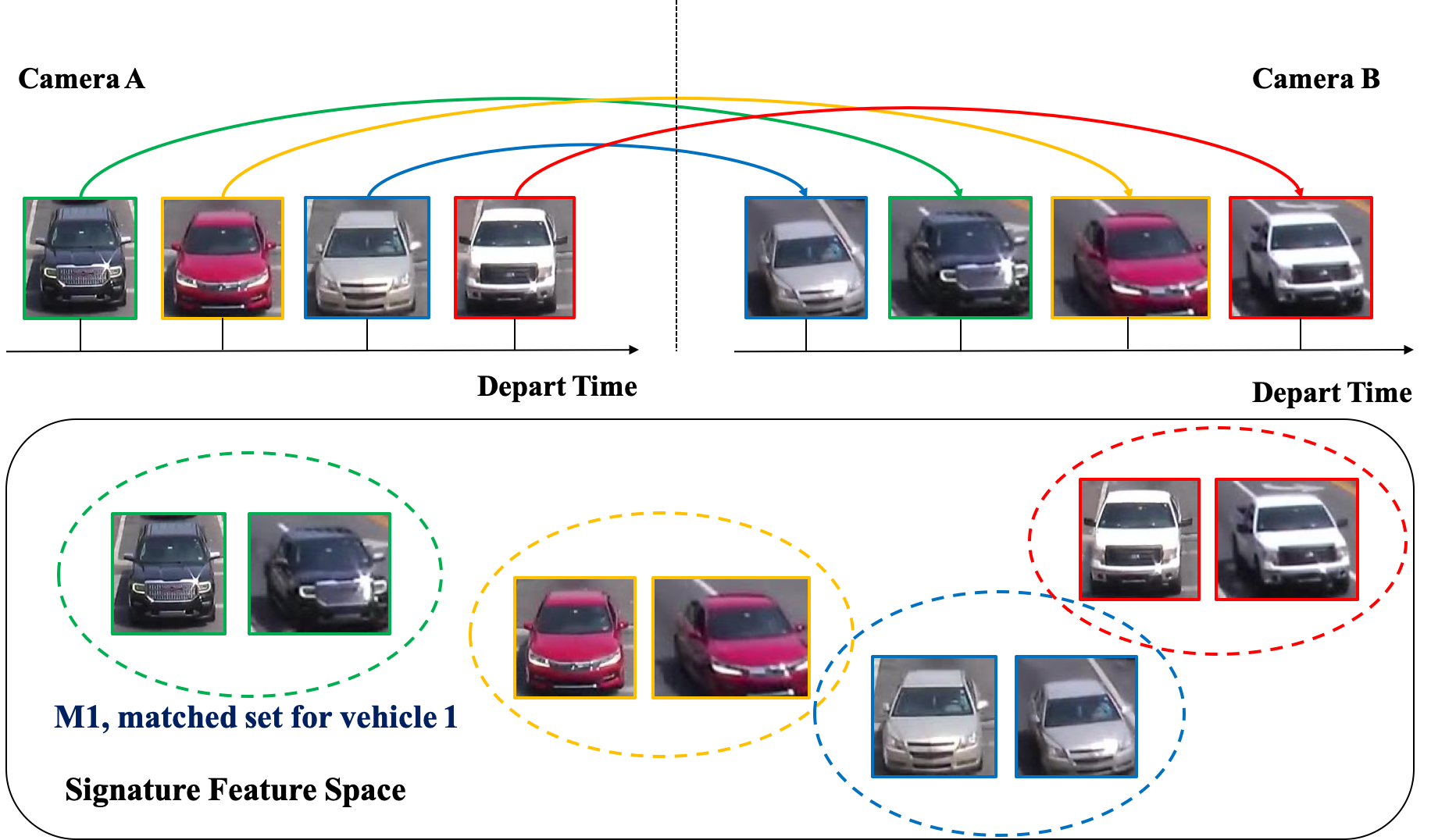

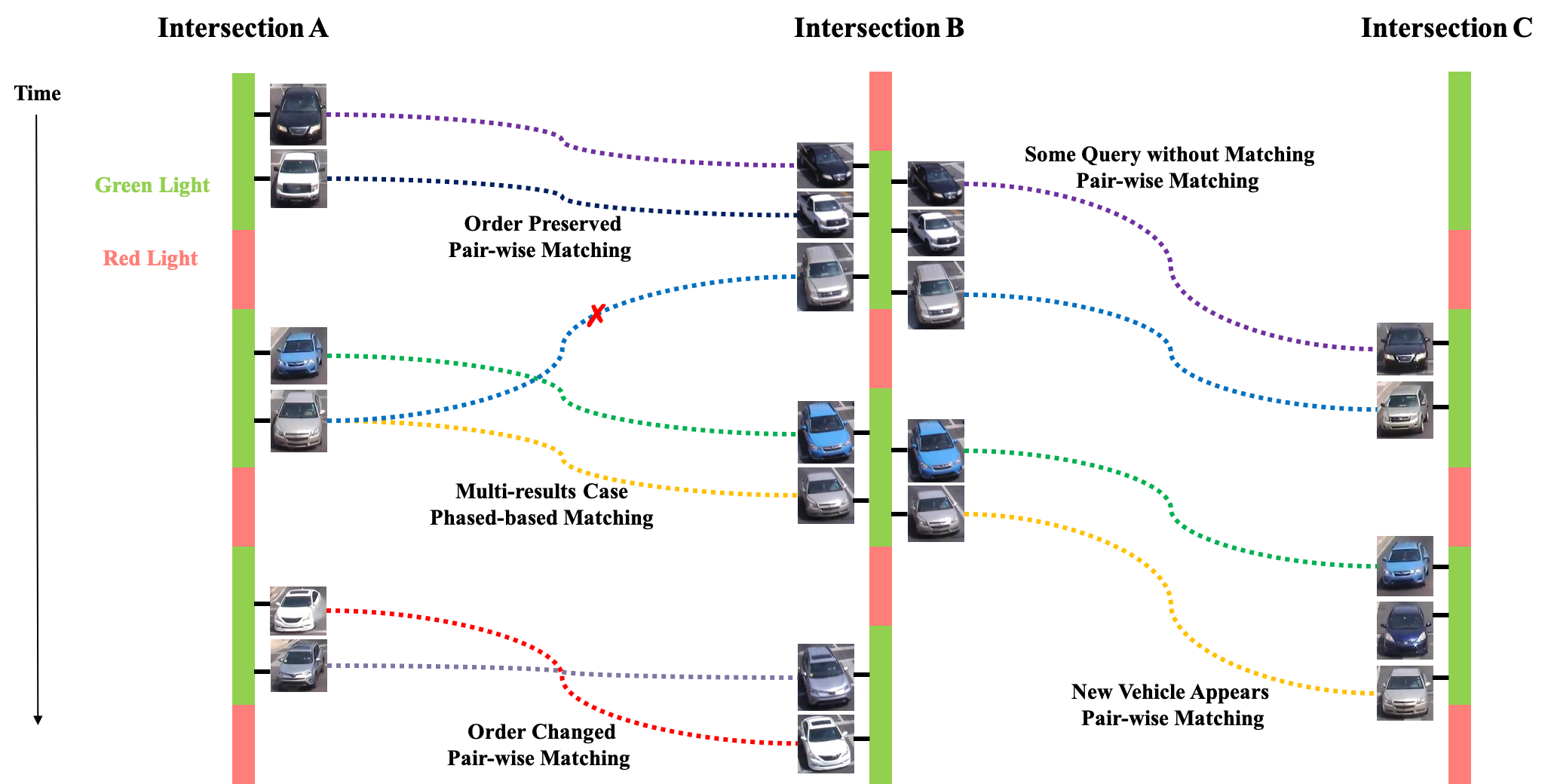

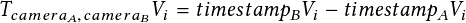

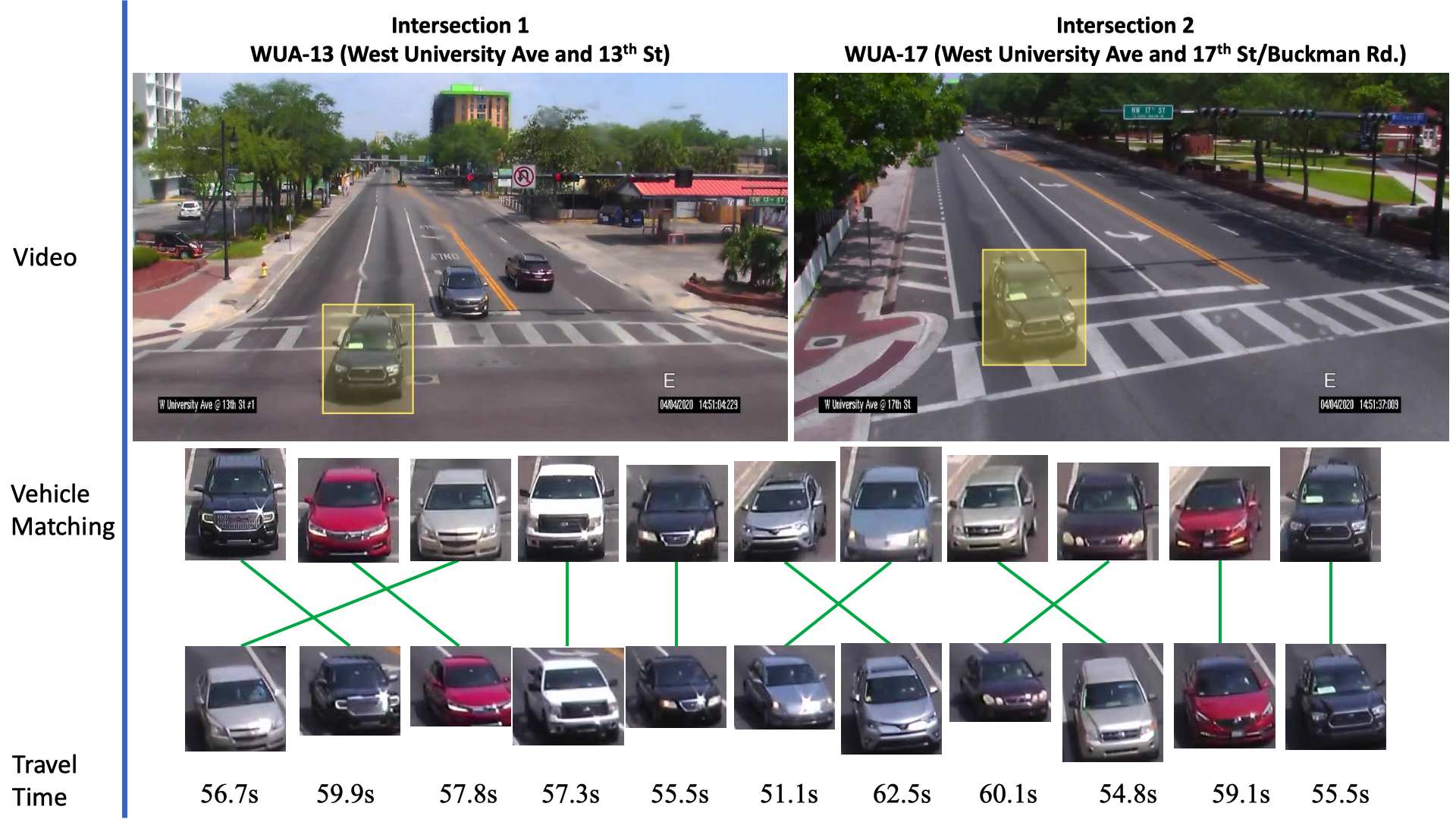

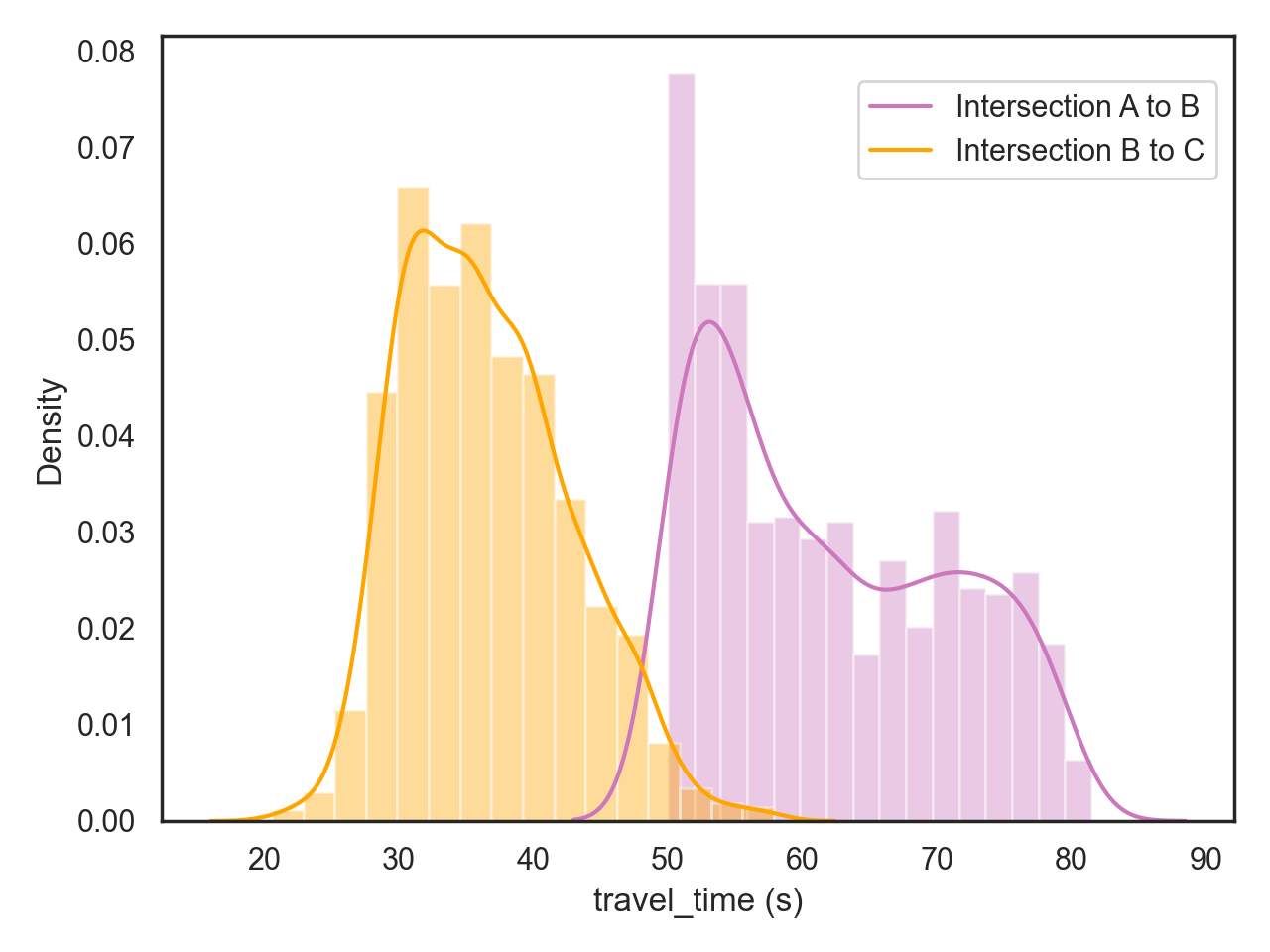

In Chapter 7, we propose a methodology for travel-time estimation of traffic flow, an important problem with critical implications for traffic congestion analysis. We developed techniques for using intersection videos to identify vehicle trajectories across multiple cameras and analyze corridor travel time. Our approach consists of (1) multi-object single-camera tracking, (2) vehicle re-identification among different cameras, (3) multi-object multi-camera tracking, and (4) travel-time estimation. We evaluated the proposed framework on real intersections in Florida with pan and fisheye cameras. The experimental results demonstrate the viability and effectiveness of our method.

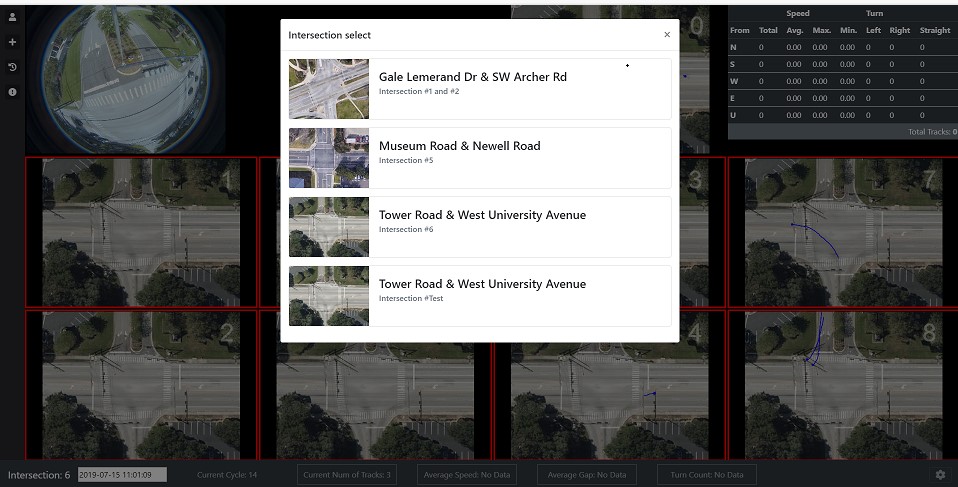

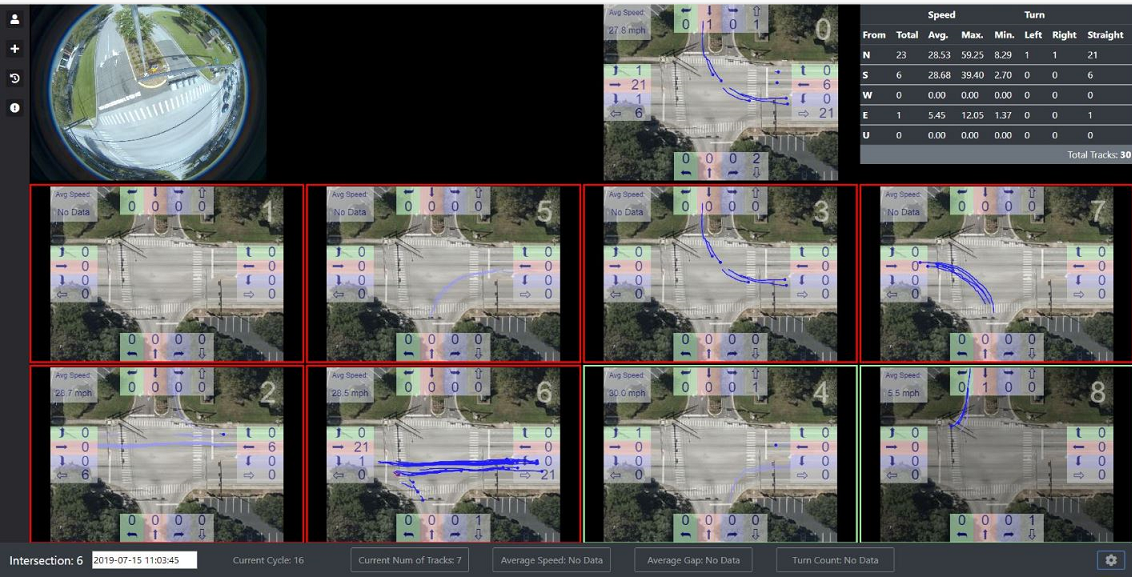

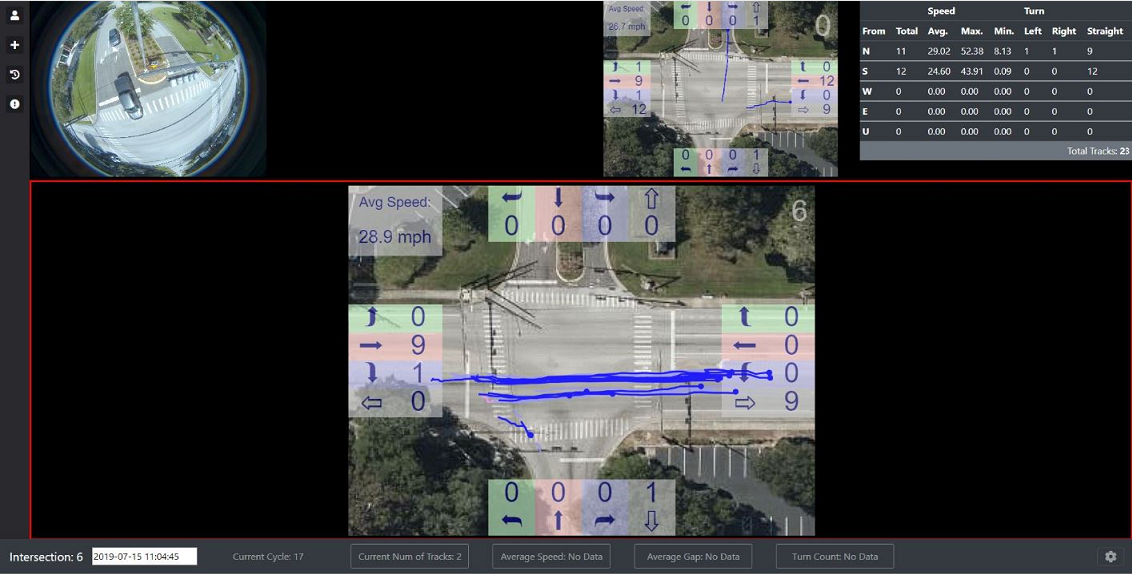

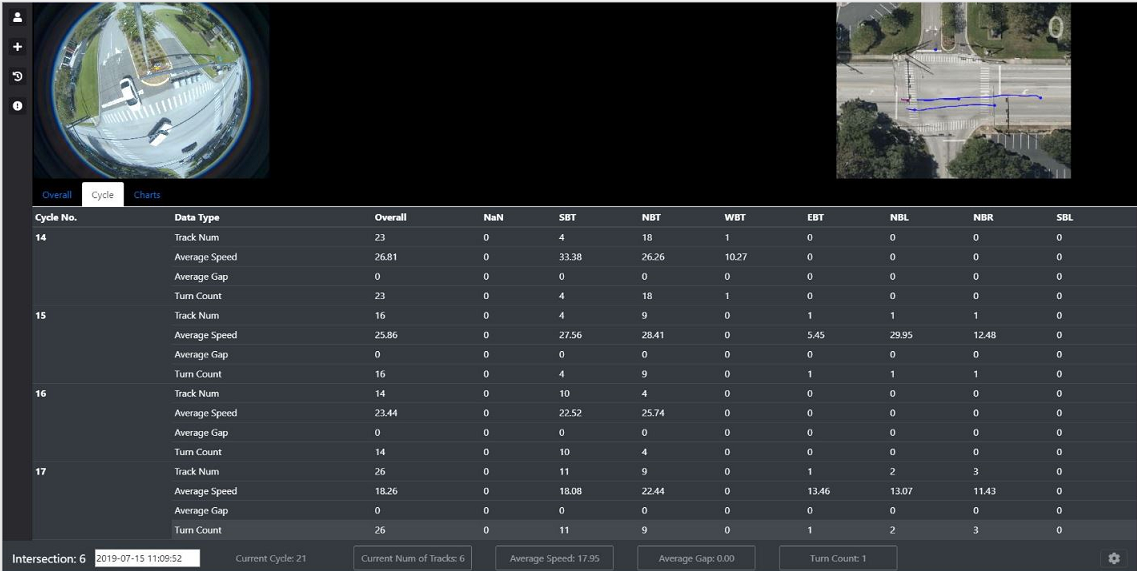

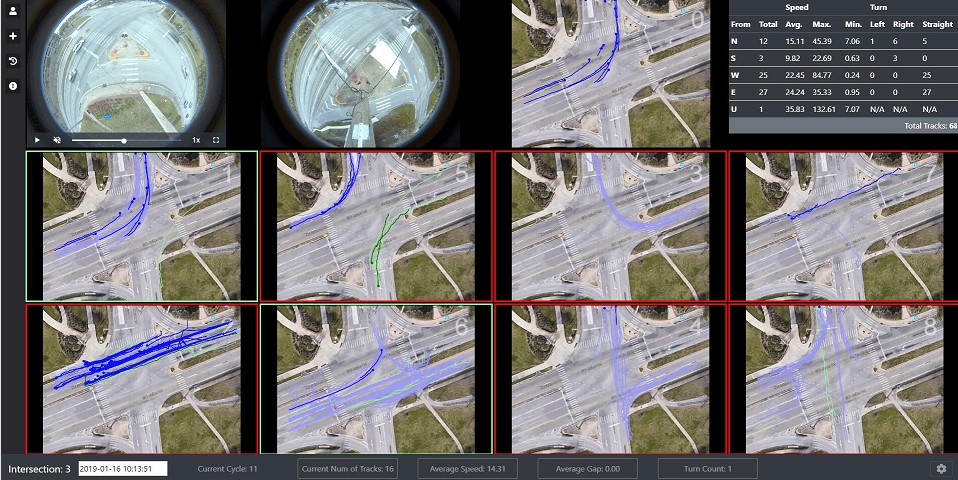

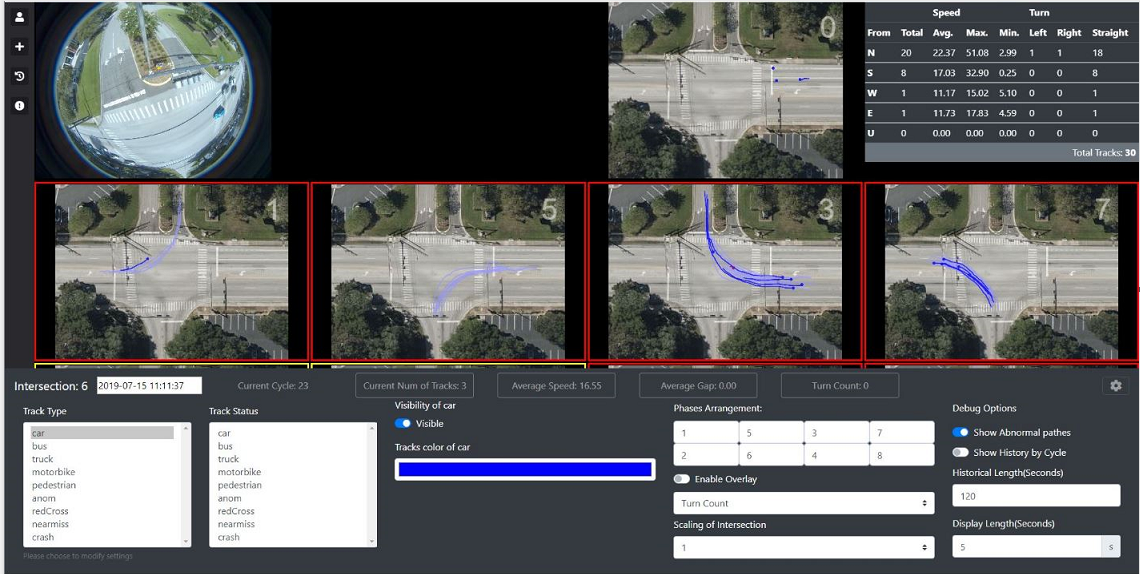

In Chapter 8, we present a visual analytics framework that traffic engineers may use to analyze the events and performance at an intersection. The tool ingests streaming videos collected from a fisheye camera, cleans the data, and runs analytics. The tool presented here has two modes: streaming and historical modes. The streaming mode may be used to analyze data close to real-time with a latency set by the user. In the historical mode, the user can run a variety of trend analyses on historical data.

In Chapter 9, we summarize the contributions of the present work.

The rapid changes in the growth of exploitable and, in many cases, open data can mitigate traffic congestion and improve safety. Despite significant advances in vehicle technology, traffic engineering practices, and analytics based on crash data, the number of traffic crashes and fatalities is still too many. Many drivers are frustrated due to prolonged (potentially preventable) intersection delays. Using video or light detection and ranging (LiDAR) processing, big data analytics, artificial intelligence, and machine learning can profoundly improve the ability to address these challenges. Collecting and exploiting large datasets are familiar to the transportation sector. However, the confluence of ubiquitous digital devices and sensors, significantly lower hardware costs for computing and storage, enhanced sensing and communication technologies, and open-source analytics solutions have enabled novel applications. The latter may involve insights into otherwise unobserved patterns that positively influence individuals and society.

The technologies of artificial intelligence (AI) and the Internet of Things (IoT) are ushering in a new promising era of “smart cities” where billions of people around the world can improve the quality of their lives in aspects of transportation, security, information, communications, etc. One example of data-centric AI solutions is computer vision technologies that enable vision-based intelligence for edge devices across multiple architectures. Sensor data from smart devices or video cameras can be analyzed immediately to provide real-time analysis for intelligent transportation systems (ITS). At traffic intersections, there is a greater volume of road users (pedestrians and vehicles), traffic movement, dynamic traffic events, near-accidents, etc. It is a critically important application to enable global monitoring of traffic flow, local analysis of road users, and automatic near-miss detection.

As a new technology, vision-based intelligence has many applications in traffic surveillance and management [9, 10, 11, 12, 13, 14]. Many research works have focused on traffic data acquisition with aerial videos [15, 16]; the aerial view provides better perspectives to cover a large area and focus resources for surveillance tasks. Unmanned aerial vehicles (UAVs) and omnidirectional cameras can acquire helpful aerial videos for traffic surveillance, especially at intersections, with a broader perspective of the traffic scene and the advantage of being mobile and spatiotemporal. A recent trend in vision-based intelligence is to apply computer vision technologies to these acquired intersection aerial videos [17, 18] and process them at the edge across multiple ITS architectures.

Object detection and multiple object tracking are widely used applications in transportation, and real-time solutions are significant, especially for the emerging area of big transportation data. A near-miss is an event that has the potential to develop into a collision between two vehicles or between a vehicle and a pedestrian or bicyclist. These events are important to monitor and analyze to prevent crashes in the future. They are also a proxy for potential timing and design issues at the intersection. Camera-monitored intersections produce video data in gigabytes per camera per day. Analyzing thousands of trajectories collected per hour at an intersection from different sources to identify near-miss events quickly becomes challenging, given the amount of data to be examined and the relatively rare occurrence of such events.

In this work, we investigate using traffic video data for near-miss detection. However, to the best of our knowledge, a unified system that performs real-time detection and tracking of road users and near-miss detection for aerial videos is not available. Therefore, we have collected video datasets and presented a real-time deep learning-based method to tackle these problems.

Generally, a vision-based surveillance tool for ITS should meet several requirements: (1) segment vehicles from their surroundings (including other road objects and the background) to detect all road objects (still or moving); (2) classify detected vehicles into categories: cars, buses, trucks, motorbikes, etc.; (3) extract spatial and temporal features (motion, velocity, and trajectory) to enable more specific tasks, including vehicle tracking, trajectory analysis, near-miss detection, anomaly detection, etc.; (4) function under various traffic conditions and lighting conditions; and (5) operate in real-time. Over the decades, although increasing research on vision-based systems for traffic surveillance has been proposed, many of the criteria listed above still need to be met. Early solutions [19] do not identify individual vehicles as unique targets and progressively track their movements. Methods have been proposed to address individual vehicle detection and vehicle tracking problems [20, 21, 9] with tracking strategies and optical flow deployment. Compared to traditional hand-crafted features, deep learning methods [22, 23, 24, 25, 26, 27] in object detection have illustrated the robustness of specialization of the generic detector to a specific scene. Recently, automatic traffic accident detection has become an important topic. Before detecting accident events [28, 12, 29, 30, 31], one typical approach is to apply object detection or tracking methods using a histogram of flow gradient (HFG), hidden Markov model (HMM) or Gaussian mixture model (GMM). Other approaches [32, 33, 34, 35, 36, 37, 38, 39, 40] use low-level features (e.g., motion features) to demonstrate better robustness. Neural networks have also been employed for automatic accident detection [41, 42, 43, 44].

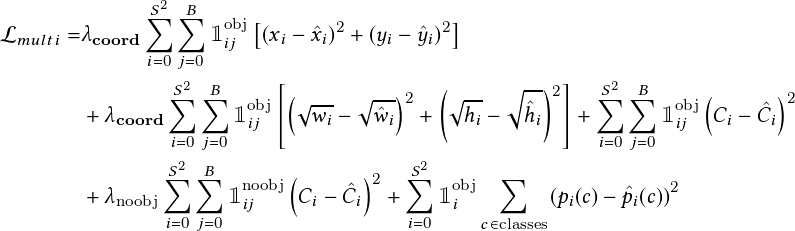

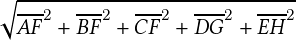

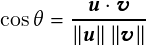

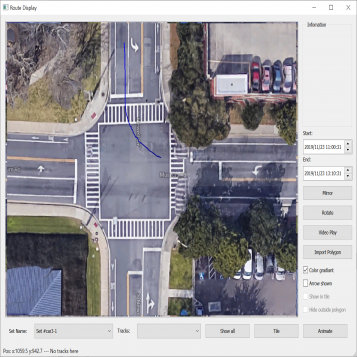

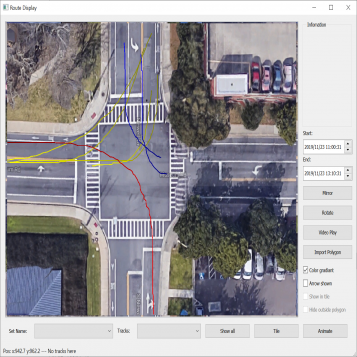

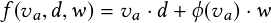

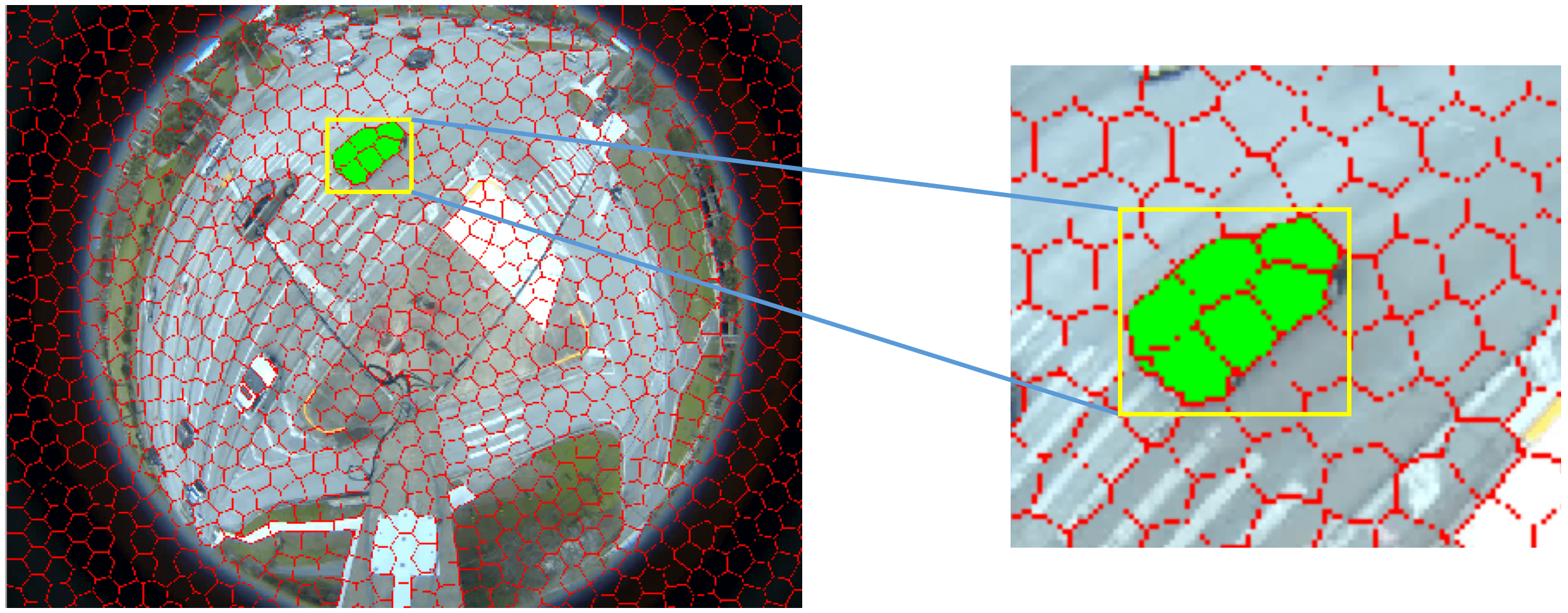

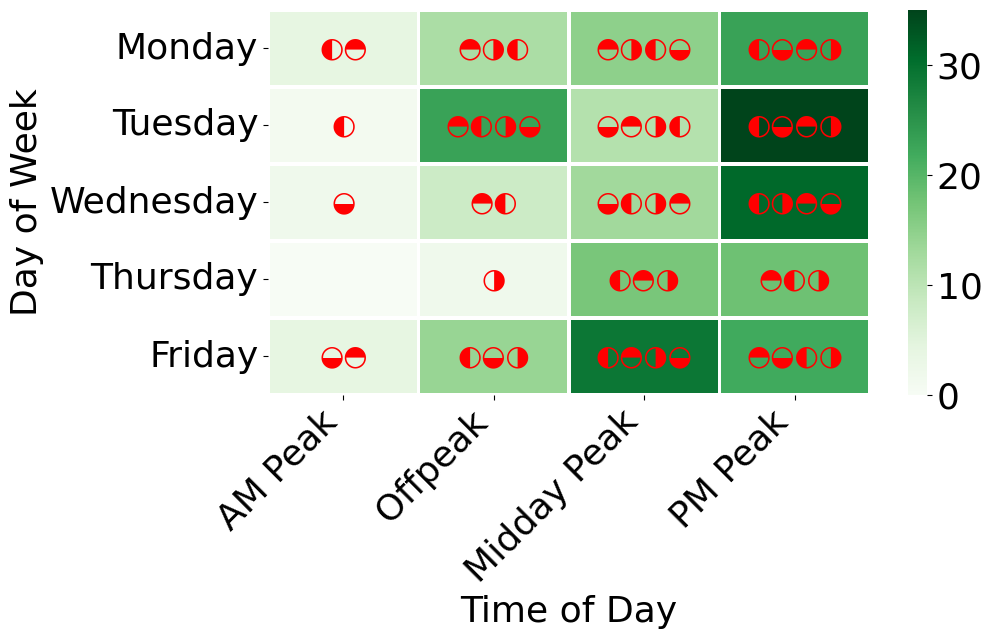

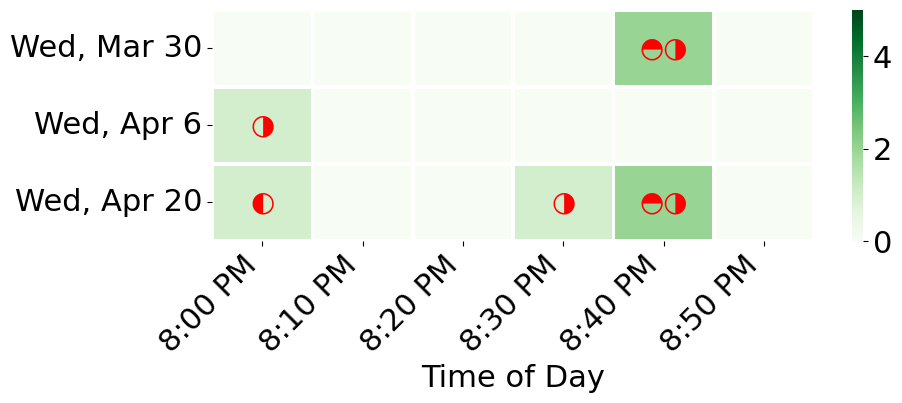

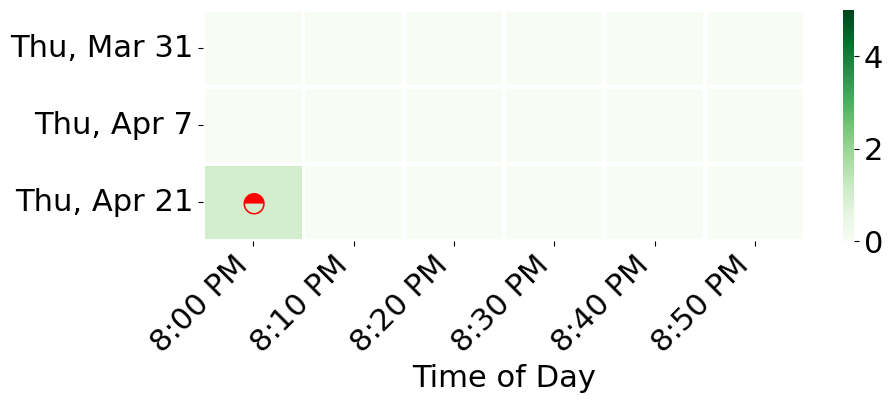

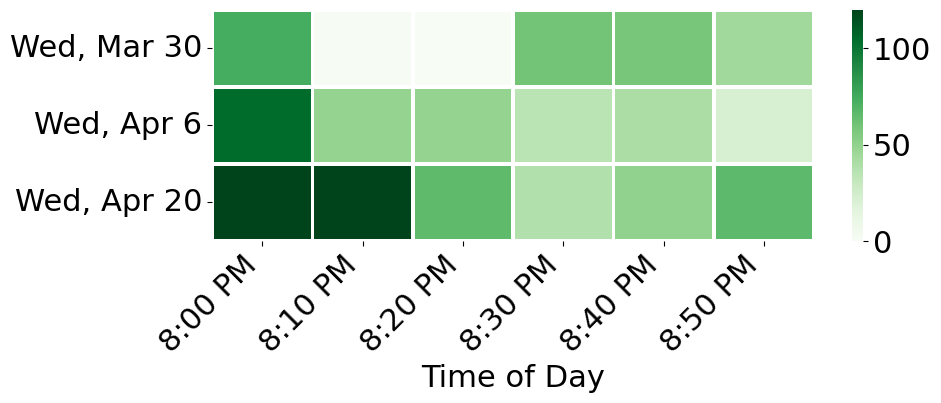

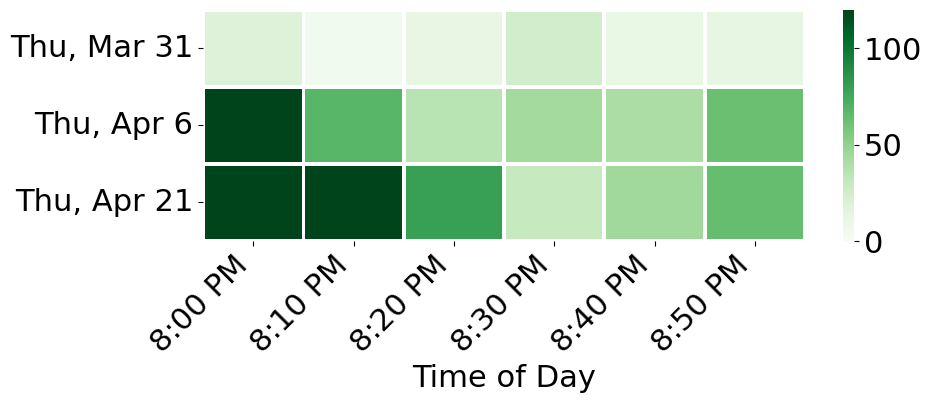

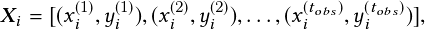

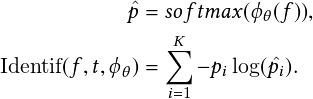

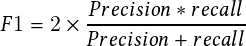

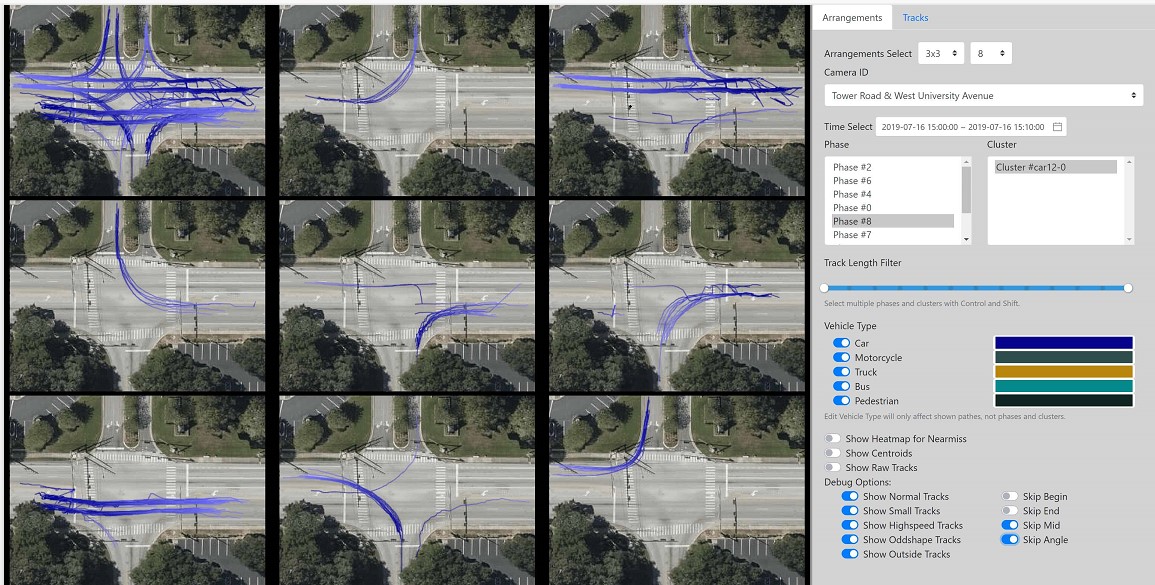

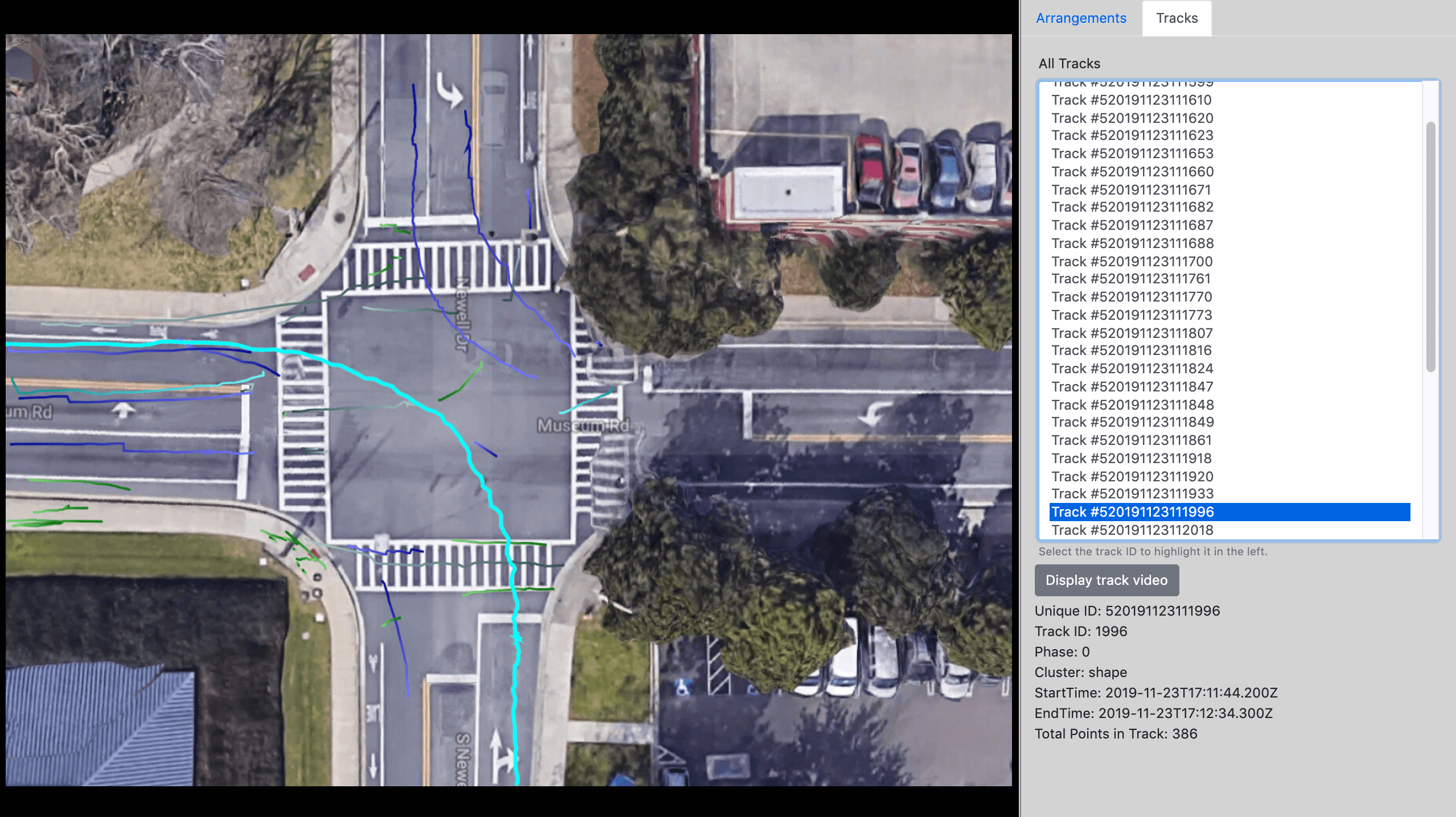

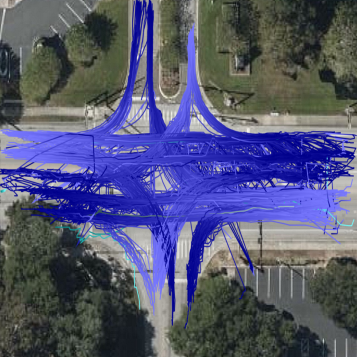

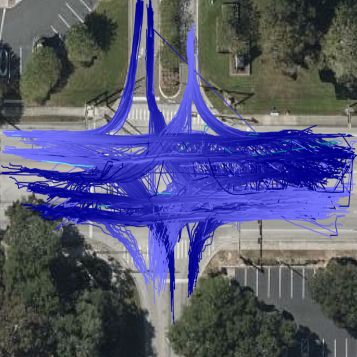

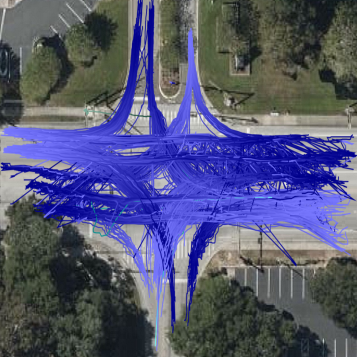

| Figure 2.1.: | The overall pipeline of our two-stream convolutional neural networks for near-miss detection. |

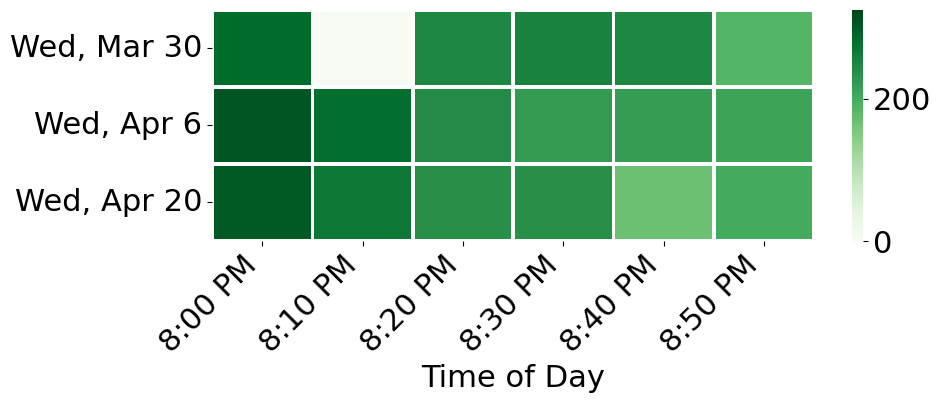

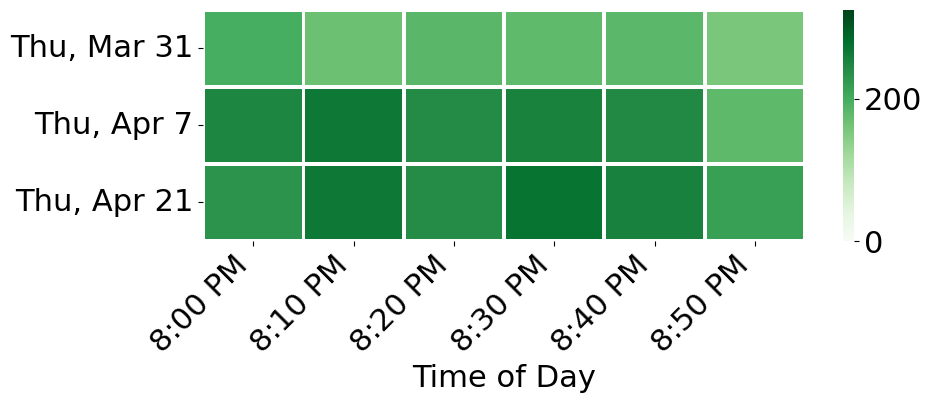

The overall pipeline of our method is depicted in Figure 2.1. The organization of this chapter is as follows. Section 2.2 describes the background of convolutional neural networks, object detection, and multiple object tracking methods. Section 2.3 describes our method’s overall architecture, methodologies, and implementation. This is followed in Section 2.4 by introducing our traffic near-accident detection dataset (TNAD) and presenting a comprehensive evaluation of our approach and other state-of-the-art near-accident detection methods, both qualitatively and quantitatively. Section 2.5 summarizes our contributions and discusses future work’s scope.

CNNs have shown strong capabilities in representing objects, thereby boosting the performance of numerous vision tasks, especially compared to traditional features [45]. A CNN is a class of deep neural networks which is widely applied in image analysis and computer vision. A standard CNN usually consists of both the input layer and the output layer, as well as multiple hidden layers (e.g., convolutional layers, fully connected layers, pooling layers), as shown in Figure 2.2. The input to a convolutional layer is an original image X. We denote the feature map of the i-th convolutional layer as Hi, and H0 = X. Then Hi can be described as

| (2.1) |

where W i is the weight for the i-th convolutional kernel for the i � 1-th image or feature map and � is the convolution operation. The output of the convolution operation includes a bias, bi. Then, the feature map for the i-th layer can be computed by applying a standard nonlinear activation function. We briefly describe a 32 � 32 RGB image with a simple ConvNet for CIFAR-10 image classification [46]:

In this way, CNNs transform the original image into multiple high-level feature representations layer by layer, obtaining class-specific outputs or scores.

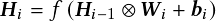

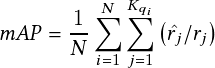

The real-time You Only Look Once (YOLO) detector, proposed in [24], is an end-to-end state-of-the-art deep learning approach without using region proposals. The pipeline of YOLO [24] is relatively straightforward: Given an input image, YOLO [24] passes it through the neural network only once, as its name implies (You Only Look Once), and outputs the detected bounding boxes and class probabilities in prediction. Figure 2.4 demonstrates the detection model and system of YOLO [24]. YOLO [24] is orders of magnitude faster (45 frames per second) than other object detection approaches, which means it can process streaming video in real-time. Compared to other systems, it also achieves a higher mean average precision. In this work, we leverage the extension of YOLO [24], Darknet-19, a classification model used as the basis of YOLOv2 [47]. Darknet-19 [47] consists of 19 convolutional layers and five max-pooling layers, where batch normalization is utilized to stabilize training, speed up convergence, and regularize the model [48].

SORT [49] is a simple, popular, fast multiple object tracking (MOT) algorithms. The core idea combines Kalman filtering [50] and frame-by-frame data association. The data association is implemented with the Hungarian method [51] by measuring the bounding box overlap. With this rudimentary combination, SORT [49] achieves a state-of-the-art performance compared to other online trackers. Moreover, due to its simplicity, SORT [49] can update at a rate of 260 Hz on a single machine, which is over 20 times faster than other state-of-the-art trackers.

DeepSORT [52] is an extension of SORT [49]. DeepSORT integrates appearance information to improve the performance of SORT [49] by adding one pre-trained association metric. DeepSORT [52] helps solve many identity-switching problems in SORT [49], and it can track occluded objects in a longer term. The measurement-to-track association is established in visual appearance space during the online application, using nearest-neighbor queries.

This section presents our computer vision-based two-stream architecture for real-time near-miss detection. The architecture is primarily driven by real-time object detection and multiple object tracking (MOT). The goal of near-accident detection is to detect likely collision scenarios across video frames and report these near-miss records. Because videos have spatial and temporal components, we divide our framework into a two-stream architecture, as shown in Figure 2.3. The spatial aspect comprises individual frame appearance information about scenes and objects. The temporal element comprises motion information of objects. For the spatial stream convolutional neural network we utilize a standard convolutional network designed for state-of-the-art object detection [24] to detect individual vehicles and mark near-miss regions at the single-frame level. The temporal stream network leverages object candidates from object detection CNNs and integrate their appearance information with a fast MOT method to extract motion features and compute trajectories. When two trajectories of individual objects intersect or come closer than a certain threshold (whose estimation is described below), we label the region covering the two entities as a high probability near-miss area. Finally, we take the average near-miss likelihood of both the spatial and temporal stream networks and report the near-miss record.

Each stream is implemented using a deep convolutional neural network in our framework. Near-accident scores are combined by averaging. Because our spatial stream ConvNet is essentially an object detection architecture, we base it on recent advances in object detectionessentially the YOLO detector [24]—and pre-train the network from scratch on our dataset containing multiscale drone, fisheye, and simulation videos. As most of our videos have traffic scenes with vehicles and movement captured in a top-down view, we specify different vehicle classes such as motorbike, car, bus, and truck as object classes for training the detector. Additionally, near-misses or collisions can be detected from single still frames or stopped vehicles associated with an accident, even at the beginning of a video. Therefore, we train our detector to localize these likely near-miss scenarios. Since static appearance is a valuable cue, the spatial stream network performs object detection by only operating on individual video frames.

The spatial stream network regresses the bounding boxes and predicts the class probabilities associated with these boxes using a simple end-to-end convolutional network. It first splits the image into a S �S grid. For each grid cell,

| Figure 2.4.: | Object detection pipeline of our spatial stream [24]: (1) resizes the input video frame, (2) runs convolutional network on the frame, and (3) thresholds the resulting detection by the model’s confidence. |

For each bounding box, the CNN outputs a class probability and offset values for the bounding box. Then, it selects bounding boxes that have the class probability above a threshold value and uses them to locate the object within the image. In essence, each boundary box contains five elements: �x;y;w;h� and box confidence. The �x;y� are coordinates that represent the box’s center relative to the grid cell’s bounds. The �w;h� parameters are the width and height of the object. These elements are normalized such that x, y, w and h lie in the interval �0;1�. The intersection over union (IoU) between the predicted bounding box and the ground truth box is used in confidence prediction, which reflects the likelihood that the box contains an object (objectness) and the accuracy of the boundary box. The mathematical definitions of the scoring and probability terms are:

box confidence score Pr�object��IoU

conditional class probability Pr�classijobject�

class confidence score Pr�classi��IoU

class confidence score = box confidence score � conditional class probability

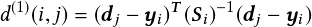

where Pr�object� is the probability that the box contains an object. IoU is the intersection over union (IoU) between the predicted and ground truth boxes. Pr�classi� is the probability that the object belongs to classi. Pr�classijobject� is the probability that the object belongs to classi given an object is present. The network architecture of the spatial stream contains 24 convolutional layers followed by two fully connected layers reminiscent of AlexNet and even earlier convolutional architectures. The last convolution layer is flattened, which outputs a �7;7;1024� tensor. It performs a linear regression using two fully connected layers to make boundary box predictions and final predictions using the threshold of box confidence scores. The last loss adds the localization (the 1st and the 2nd terms), confidence (the 3rd and the 4th terms), and classification (the 5th term) losses together. The objective function is

| (2.2) |

where 1iobj denotes if the object appears in cell i and 1ijobj denotes that the jth bounding box predictor in cell i is “responsible” for that prediction. The remaining variables are as follows: �coord and �noobj are the bounding box coordinate predictions and the confidence score predictions for boxes without objects. S is the number of cells an image is split along an axis, resulting in S �S cells. B is the number of bounding box locations predicted by each cell. A tuple of four values defines the coordinates of the bounding box (x,y,w,h) where x and y are the centers of the bounding boxes xi (yi, wi, hi), and xi (ŷi, ŵi, ĥi) are the ground truth and prediction, respectively, of x (y, w, h). Ci is the confidence score of cell i, whereas Ĉi is the predicted confidence score. Finally, Pi is the conditional probability of cell i containing an object of a class, whereas Pi is the predicted conditional class probability.

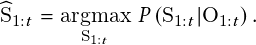

MOT can generally be regarded as a multivariable estimation problem [53]. The objective of MOT can be modeled by performing MAP (maximum a posteriori) estimation to find the optimal sequential states of all the objects from the conditional distribution of the sequential states, given all the observations:

| (2.3) |

where St = �st1;st2;:::;stMt� denotes states of all the Mt objects in the t-th frame, and sti denotes the state of the i-th object in the t-th frame. S1:t = fS1;S2;…;Stg denotes all the sequential states of all the objects from the first frame to the t-th frame. In tracking-by-detection, oti denotes the collected observations for the i-th object in the t-th frame. Ot = �ot1;ot2;…;otMt� denotes the collected observations for all the Mt objects in the t-th frame. O1:t = fO1;O2;…;Otg denotes all the collected sequential observations of all the objects from the first frame to the t-th frame.

Due to single-frame inputs, the spatial stream network cannot extract motion features and compute trajectories. To leverage this helpful information, we present our temporal stream network. This ConvNet model implements a tracking-by-detection MOT algorithm [49, 52] with a data association metric that combines deep appearance features. The inputs are identical to the spatial stream network using the original video. Detected object candidates (only vehicle classes) are used for tracking, state estimation, and frame-by-frame data association using SORT [49] and DeepSORT [52]—the real-time MOT method. MOT models each object’s state and describes objects’ motion across video frames. The tracking information allows us to stack trajectories of moving objects across several consecutive frames, which are useful cues for near-accident detection.

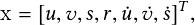

Estimation Model For each target, its state is modeled as

| (2.4) |

where u;v denotes the 2D pixel location of the target’s center. The variable s denotes the scale of the target’s bounding box, and r is the aspect ratio (usually considered constant). The target’s state is updated when a newly detected bounding box is associated with it by solving the velocity via a Kalman filter [50]. The target’s state is predicted (without correction via the Kalman filter if no bounding box is associated.

Data Association To assign all new detection boxes to existing targets, we predict each target’s new location (one predicted box) in the current frame and compare the IoU distance between new detection boxes and all predicted boxes (the detection-to-target overlaps), forming the assignment cost matrix. The matrix is further used in the Hungarian algorithm [51] to solve the assignment problem. One assignment is rejected if the detection-to-target overlap is less than a threshold (the minimum IoU, IoUmin). The IoU distances of the bounding boxes are utilized to handle the short-term occlusion caused by passing targets.

Creation and Deletion of Track Identities. When new objects enter or old objects vanish in video frames, we must add or remove certain tracks (or objects) to maintain unique identities. We treat any detection that fails to associate with existing targets as one potential untracked object. This target undergoes a probationary period to accumulate enough evidence by associating the target with detection. This strategy can prevent the tracking of false positives. The track object is removed from the tracking list if it remains undetected for TLost frames, which enables maintenance of a relatively unbounded number of trackers and reduces localization errors accumulated over a long duration.

Track Handling and State Estimation. This part is mostly identical to SORT [49]. The tracking scenario is represented as an eight-dimensional state space �u;v;�;h;ẋ;ẏ;�;ḣ�. The pair �u;v� represents the bounding box center location. � is the aspect ratio, and h is the height. The rest are the bounding box’s velocities relative to other bounding boxes. This representation is used to describe the constant velocity motion, (ẋ;ẏ;�;ḣ�, and linear observation model, (u;v;�;h), in Kalman filtering [50].

| Figure 2.5.: | Vehicle samples selected from the VeRi dataset [54]. All images are the same size, and we resize them to 64 � 128 for training. Left: Diversity of vehicle colors and types; right: variation of the viewpoints, illuminations, resolutions, and occlusions for the vehicles. |

Data Association To solve the frame-by-frame association problem, SORT uses the Hungarian algorithm [51], where both motion and appearance information is considered.

The (squared) Mahalanobis distance is utilized to measure the distance between newly arrived measurements and Kalman states:

| (2.5) |

where yi and dj denote the i-th track distribution and the j-th bounding box detection, respectively. The smallest cosine distance between the i-th track and j-th detection is considered to handle appearance information:

| (2.6) |

where both motion and appearance are combined linearly, and a hyperparameter, �, controls the influence of each:

| (2.7) |

Matching Cascade. Previous methods tried to solve measurement-to-track associations globally, while SORT adopts a matching cascade introduced in [52] to solve a series of subproblems. In some situations, when an object is occluded for a more extended period, the subsequent Kalman filter [50] predictions would increase the uncertainty associated with the object location. Consequently, the probability mass will spread out in the state space and the observation likelihood will decrease. The measurement-to-track distance should be increased by considering the spread of probability mass. Therefore, objects seen more frequently are prioritized in the matching cascade strategy to encode the notion of probability spread in the association likelihood.

Metric learning and various hand-crafted features are widely used in person re-identification problems. We apply a cosine metric learning method to train a neural network for vehicle re-identification and use it to make our temporal stream more robust regarding tracking performance. Metric learning aims to solve the clustering problem by constructing an embedding in which the metric distance corresponding to the same identity is likely closer than features from different identities. The cosine metric measures the degree of similarity by calculating the cosine distance between two objects. We observe that our tracking algorithm produces switched road user identities in traffic video, especially in crowded traffic scenes or scenes with heavy occlusion. In order to generate accurate and consistent track data, we introduce a deep cosine metric learning method to learn the cosine distance between objects. The cosine distance involves appearance information that provides valuable cues for recovering identities in crowded scenes or after long-term occlusion when motion information is less discriminative. Through this deep network, the feature expression vector obtained by any object is placed in the cluster corresponding to the nearest neighbor. We trained the network on a vehicle re-identification dataset (VeRi dataset) [54] (Figure 2.5) and integrated it as the second metric measure for the assignment problem of our temporal stream.

We aim to use a unified end-to-end framework that automatically learns the best metrics. Compared to the standard distance metric (L1, L2), the learned metric can obtain more discriminative features for re-identification and is more robust to cross-view vehicle images. We adopt the architecture of [55] and train a deep network with a cosine softmax classifier that can generate feature vectors of fixed length (128) for the input images (vehicles). The cosine metric finds the nearest cluster exemplar and matches the vehicle on (sometimes far-flung) different video frames to solve tracking problems in heavy occlusion.

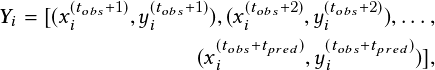

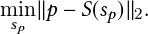

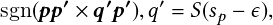

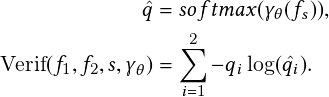

Given a re-identification dataset D = f�xi;yi�gi=1N of N training images xi 2 RD and associated class labels yi 2 f1;…;Cg, deep metric learning is used to find a parameterized encoder function. The deep metric neural network is r = f�� input� with parameters �, which projects the input images x 2 RD into a feature representation, r 2 Rd, that follows a predefined notion of cosine similarity.

The cosine softmax classifier can be adapted from a standard softmax classifier and is expressed as

| (2.8) |

where � is a free scale parameter. To generate compact clusters in the feature representation space, the first modification is to apply the `2 normalization to the final layer of the encoder network so that the representation has unit length kf��x�k2 = 1;[x 2 RD. The second modification is to normalize the weights to unit length as well, i.e., wk = wk�kwkk2;[k = 1;…;C. The training of the encoder network can be carried out using the cross-entropy loss as usual. In particular, the authors in [56] have proposed accelerating the convergence of stochastic gradient descent by decoupling the length of the weight vector � from its direction.

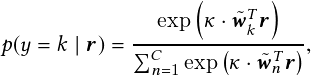

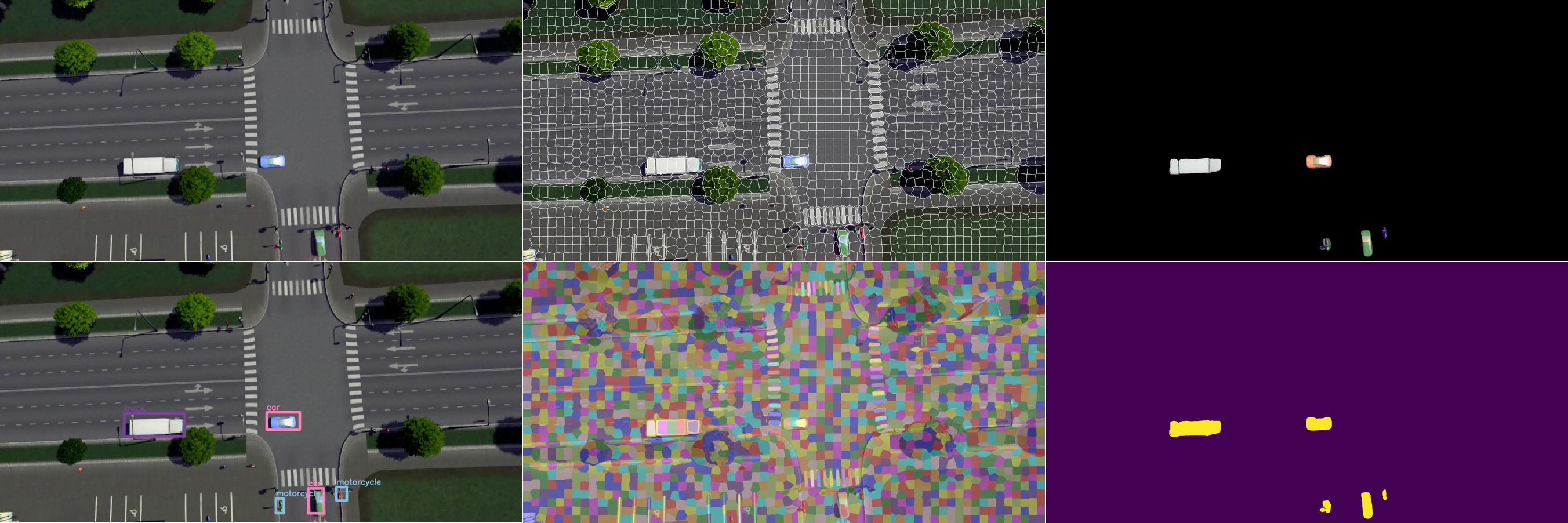

| Figure 2.6.: | 2D image superpixel segmentation using SLIC [57]. Top: original image; bottom-left: SLIC segmentation for drone video (1,600 superpixels); bottom-middle: SLIC segmentation for fisheye video (1,600 superpixels); bottom-right: SLIC segmentation for simulation video (1,600 superpixels). |

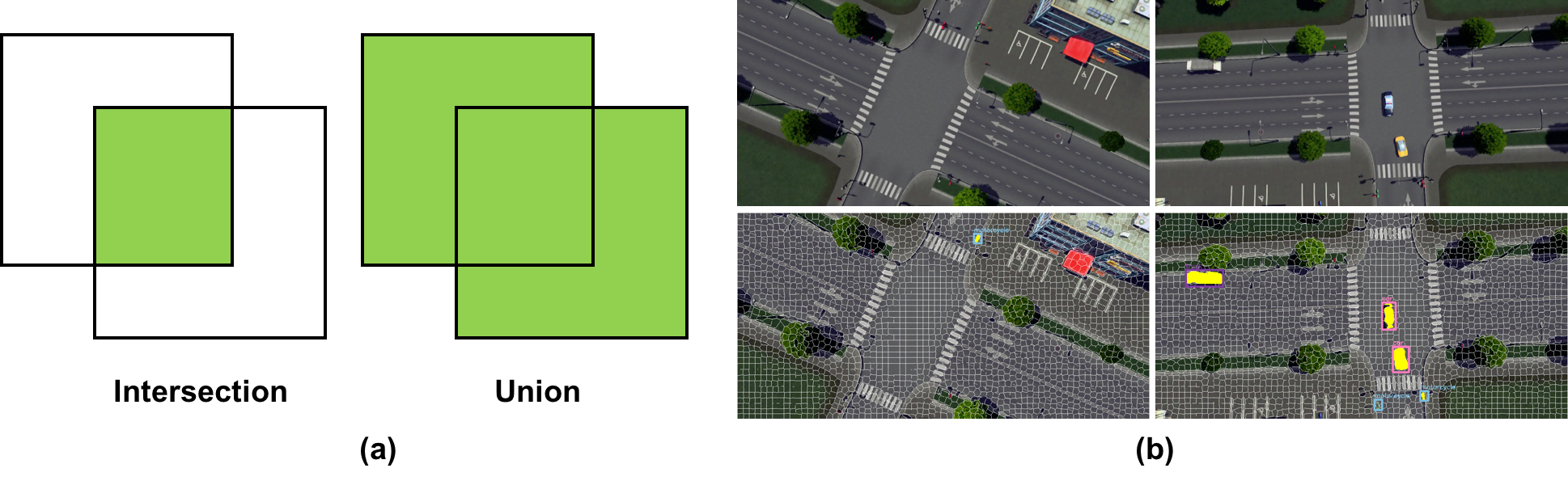

| Figure 2.7.: | An illustration depicting the segmentation mask. (a) An illustration depicting the definitions of intersection and union. (b) Top: original video frames; bottom: object detections and object masks produced by our method. |

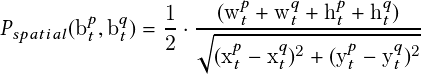

To present our method fully end-to-end, we apply a learning-based dynamic distance threshold rather than a manually defined one to determine near-accident detection. A near-accident is detected if the distance between two objects in the image space is below the threshold. Using object segmentation, we introduce a threshold-learning method by estimating the gap between objects. We present a supervised learning method, using the gap distance between road users in the temporal stream to determine a near-accident while continuing to update the threshold for more convergence and precision. The straightforward way is to compute the gap using bounding boxes from detections. Still, the bounding boxes need to be more accurate in representing the boundaries of objects, particularly when rotation is involved. Therefore, we need a more compact representation and combine detections with superpixel segmentation on the video frame to get an object mask for gap distance estimation.

The main steps of our gap estimation method can be summarized as follows:

In our gap estimation methods, the Intersection over Union (IoU) metric of boxes and superpixels is given by

| (2.9) |

where Bt = �bt1;bt2;:::;btMt� denotes the collected detection observations for all the Mt objects in the t-th frame, and bti denotes the detection for the i-th object in the t-th frame. B1:t = fB1;B2;:::;Btg denotes all the collected sequential detection observations of all the objects from the first frame to the t-th frame. Similarly, Spt = �spt1;spt2;:::;sptNt� denotes all the Nt superpixels in the t-th frame, and spti denotes the i-th superpixel in the t-th frame. Sp1:t = fSp1;Sp2;:::;Sptg denotes all the collected sequential superpixels from the first frame to the t-th frame.

In 2D superpixel segmentation, the popular SLIC (simple linear iterative clustering) [57] and ultrametric contour map (UCM) [58] methods have established themselves as the state-of-the-art. Recently, we have seen deep neural networks [59] and generative adversarial networks (GANs) [60] integrated with these methods. In our architecture, we adopt the GPU-based SLIC (gSLICr) [22] approach to produce the tessellation of the image data to achieve an excellent frame rate (400 fps). SLIC is widely applicable to many computer vision applications, such as segmentation, classification, and object recognition, often achieving state-of-the-art performance. The contours forming the homogeneous superpixels are shown in Figure 2.6. In Figure 2.7, we illustrate the definition of intersection and union and present some object masks we generated from detections and superpixels.

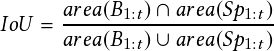

| Figure 2.8.: | The stacking trajectories extracted from multiple object tracking of the temporal stream. Consecutive frames and the corresponding displacement vectors are shown with the same color. |

The most typical motion cue is optical flow, which is widely utilized in video processing tasks such as video segmentation [61]. A trajectory is a data sequence containing several concatenated state vectors from tracking and an indexed sequence of positions and velocities over a given time window.

When utilizing the multiple object tracking algorithm, we compute the center of each object in several consecutive frames to form stacking trajectories as our motion representation. These stacking trajectories can provide accumulated information through image frames, including the number of objects, their motion history, and the timing of their interactions, such as near-accidents. We stack the trajectories of all objects for L consecutive frames, as illustrated in Figure 2.8, where pti denotes the center position of the i-th object in the t-th frame. Pt = �pt1;pt2;…;ptMt� denotes trajectories of all the Mt objects in the t-th frame. P1:t = fP1;P2;…;Ptg denotes the sequence of trajectories of all the objects from the first frame to the t-th frame. For L consecutive frames, the stacking trajectories are defined by the sequence

| (2.10) |

Ot = �ot1;ot2;…;otMt� denotes the collected observations for all the Mt objects in the t-th frame. O1:t = fO1;O2;…;Otg denotes all the collected sequential observations of all the objects from the first frame to the t-th frame. We use a simple detection algorithm that finds collisions between simplified forms of the objects using the center of bounding boxes.

Our algorithm is depicted in Algorithm 1. Once a collision is detected, we set the region covering collision-associated objects as a new bounding box with a class probability of near-accident as 1. We can obtain a final confidence measure of near-accident detection by averaging the near-accident probabilities from the spatial stream network and the temporal stream network.

Here, we present qualitative and quantitative evaluations regarding object detection, MOT, and near-accident detection performance. We also compare other methods with our framework.

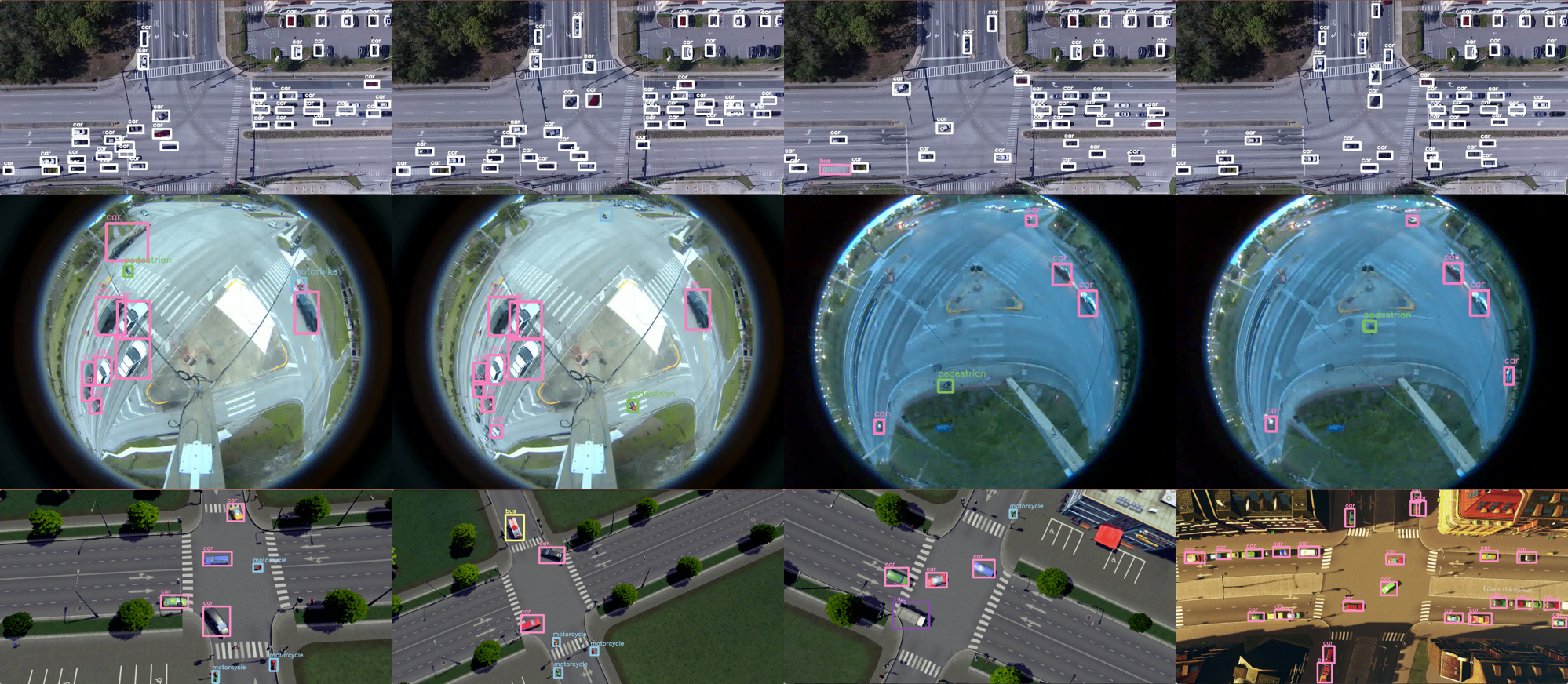

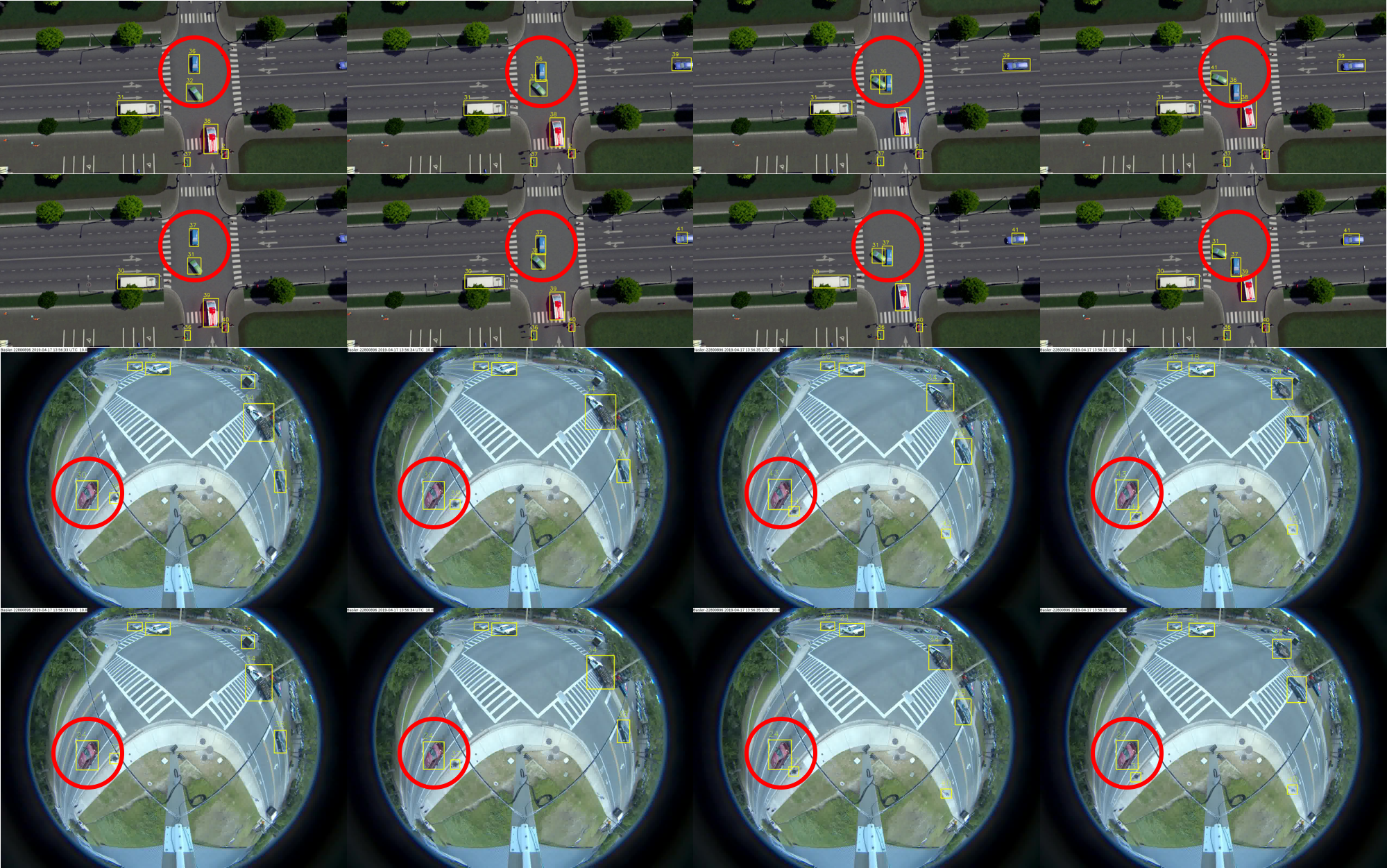

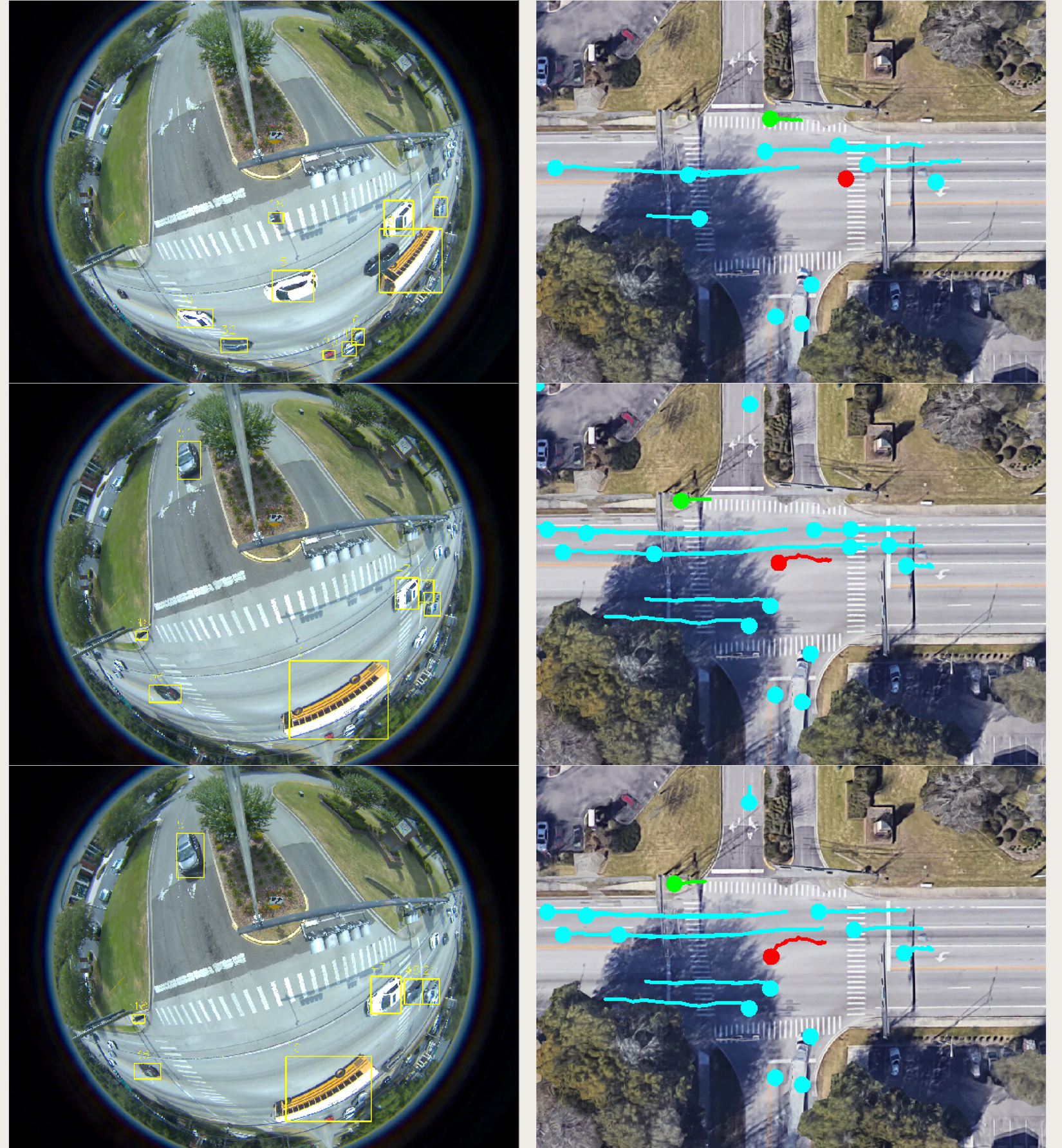

| Figure 2.9.: | Samples of traffic near-accident: Our data consists of many diverse intersection surveillance videos and near-accidents (cars and motorbikes). Yellow rectangles and lines represent the same object in video from multiple cameras. White circles represent the near-accident regions. |

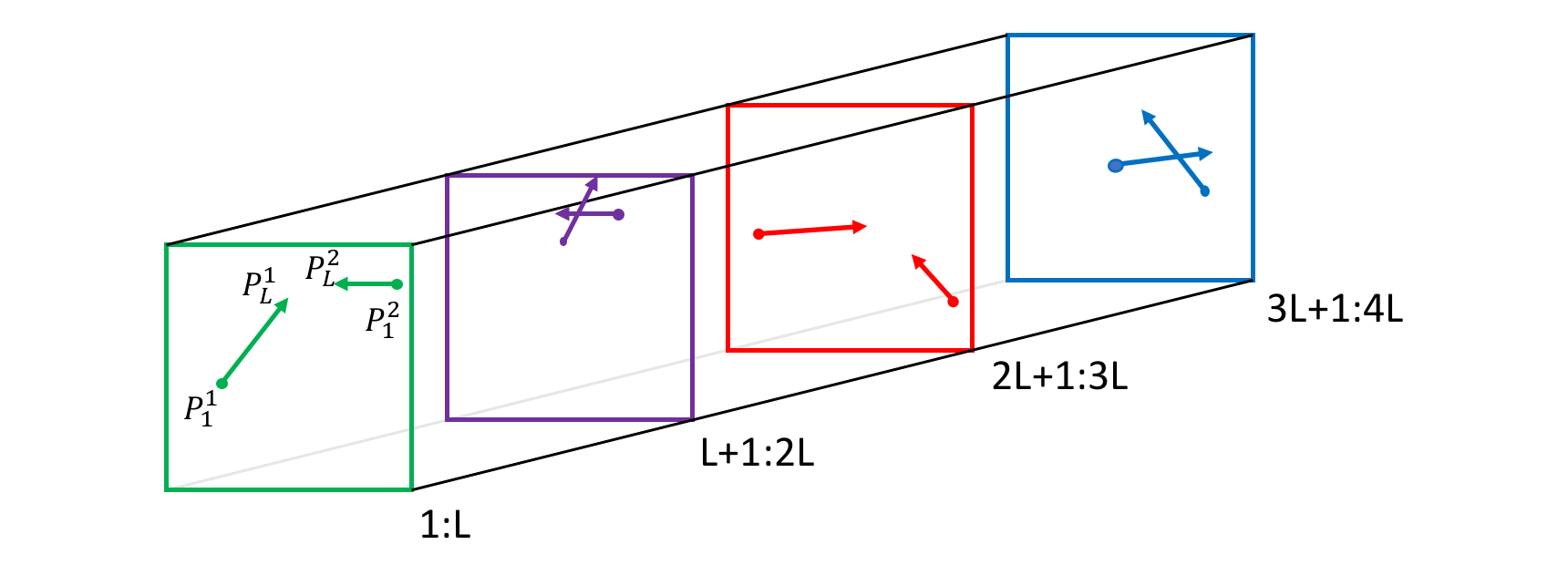

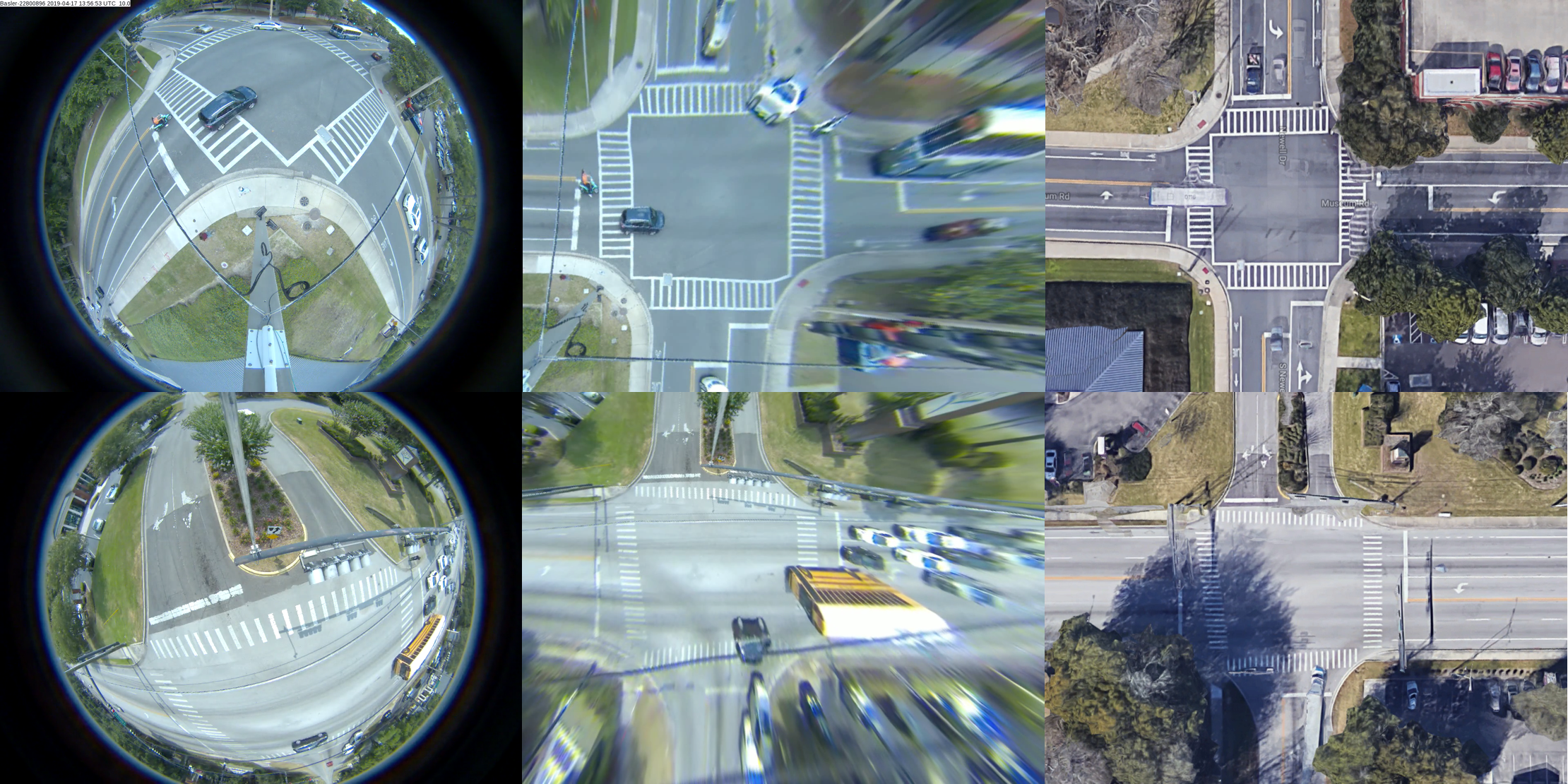

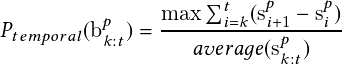

To our best knowledge, we know of no comprehensive traffic near-accident dataset containing top-down-view videos such as drone or UAV videos or omnidirectional camera videos for traffic analysis. Therefore, we have built our traffic near-accident dataset (TNAD), depicted in Figure 2.9. Intersections tend to experience more near-accidents and more potentially severe ones due to factors such as angles and turning collisions. The traffic near-accident dataset contains three types of video data from traffic intersections that could be utilized for near-accident detection and other traffic surveillance tasks, including turn movement counting.

The first type is drone video that monitors an intersection with a top-down view. The second type of intersection video is real traffic video acquired by omnidirectional fisheye cameras that monitor small or large intersections. They are widely used in transportation surveillance. These video data can be directly used as input for our vision-based intelligent framework. Furthermore, preprocessing and fisheye correction can be applied for better surveillance performance. The third type of video is video game-engine simulations that train on near-accident samples as they are accumulated. The dataset consists of 106 videos with a total duration of over 75 minutes, with frame rates between 20 and 50 fps. The drone and fisheye surveillance videos were recorded in Gainesville, Florida, at several intersections. Our videos are more challenging than videos in other datasets for the following reasons:

We manually annotated the spatial and temporal locations of near-accidents, the still or moving objects, and their vehicle class in each video. Thirty-two videos with sparsely sampled frames (only 20% of the frames in these 32 videos are used for supervision) were used, but only for training the object detector. The remaining 74 videos were used for testing.

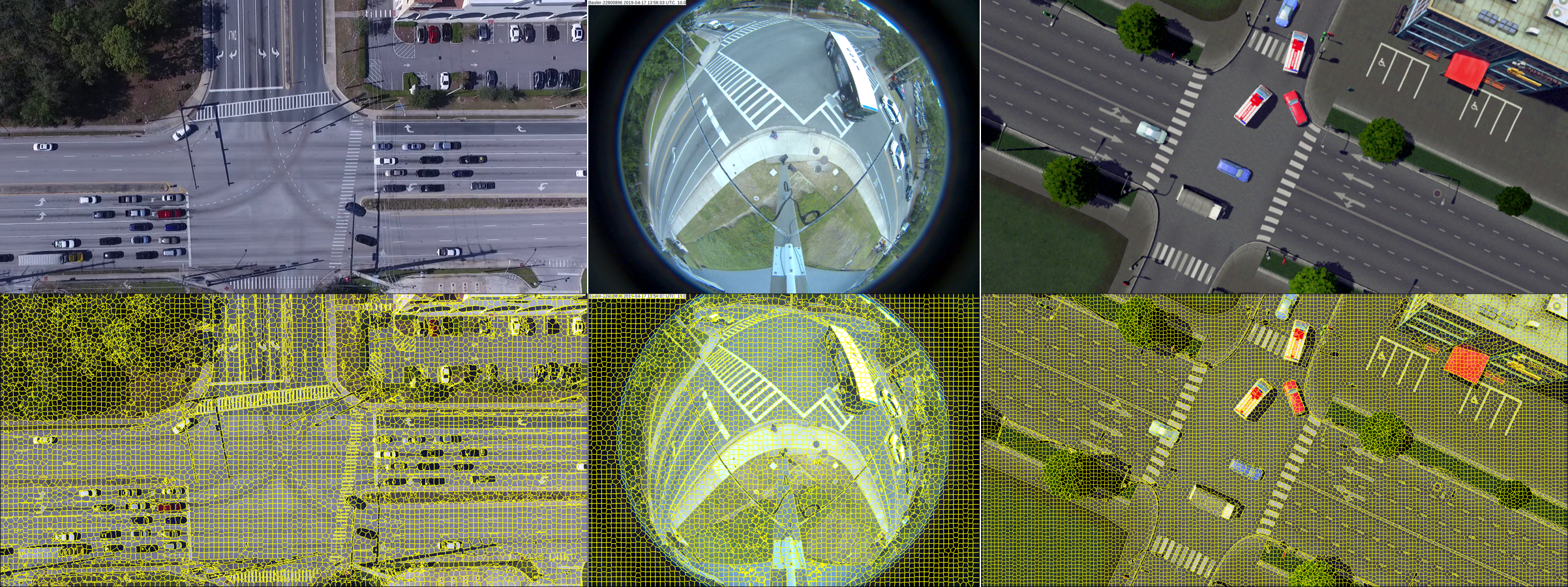

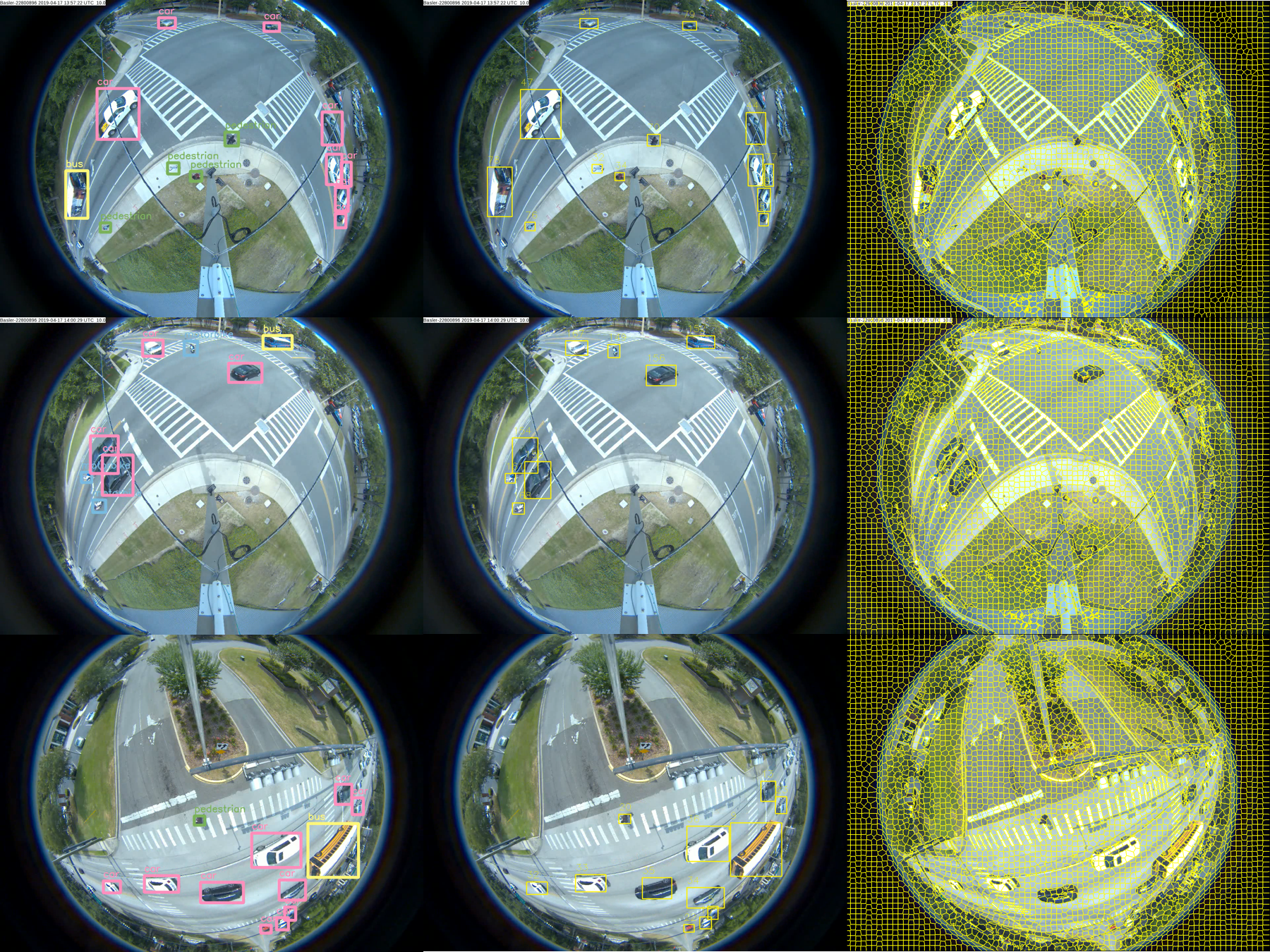

| Figure 2.10.: | object detection result samples of our spatial network on TNAD dataset. Top: samples for drone video; middle: samples for fisheye video; bottom: samples for simulation video. |

We have large amounts of fisheye traffic videos from across the city. The fisheye surveillance videos were recorded from real traffic data in Gainesville. We collected 29 single-camera fisheye surveillance videos and 19 multi-camera fisheye surveillance videos monitoring a large intersection. We conducted two experiments, one directly using these raw videos as input for our system and another in which we first preprocessed the video to correct fisheye distortion and then fed them into our system. As the original surveillance video has many visual distortions, especially near the circular boundaries of the cameras, our system performed better on these after preprocessing. In this chapter, we do not discuss issues related to fisheye unwarping, leaving these for future work.

For large intersections, two fisheye cameras placed opposite each other are used for surveillance, showing almost half the roads and real traffic. We apply a simple object-level stitching method by assigning the object identifier for the same objects across the left and right videos using similar features and appearing and vanishing positions.

We adopt Darknet-19 [52] for classification and detection with DeepSORT, using a data association metric that combines deep appearance features. We implement our framework on Tensorflow and perform multiscale training and testing with a single GPU (NVIDIA Titan X Pascal). Training a single spatial convolutional network takes one day on our system with one NVIDIA Titan X Pascal card. We use the same training strategy for classification and detection training as YOLO9000 [52]. We train the network on our dataset with four classes of vehicle (motorbike, bus, car, and truck) for 160 epochs, using stochastic gradient descent with a starting learning rate of 0.1 for classification and 10�3 for detection (dividing it by ten at 60 and 90 epochs.), weight decay of 0.0005, and momentum of 0.9 using the darknet neural network framework [52].

![]()

| Figure 2.11.: | Tracking and trajectory comparison with Urban Tracker [62] and TrafficIntelligence [63] on drone videos of TNAD dataset. Left: tracking results of Urban Tracker [62] (BSG with Multilayer and Lobster Model; middle: tracking results of TrafficIntelligence [63]; right: tracking results of our temporal stream network. |

We present some example experimental results of object detection MOT, and near-accident detection on our traffic near-accident dataset (TNAD) for drones, fisheye, and simulation videos. For object detection (Figure 2.10), we present some detection results of our spatial network with multiscale training based on YOLOv2 [47]. These visual results demonstrate that the spatial stream is able to detect and classify road users with reasonable accuracy, even if the original fisheye videos have significant distortions and occlusions. Unlike drone and simulation videos, we have added pedestrian detection on real traffic fisheye videos. From Figure 2.10, our detector performs well on pedestrian detection for daylight and dawn fisheye videos. The vehicle detection capabilities are good on top-down view surveillance videos, even for small objects. In addition, we can achieve a fast detection rate of 20 to 30 frames per second. Overall, this demonstrates the effectiveness of our spatial neural network.

| Figure 2.12.: | Multiple Object Tracking (MOT) comparisons of our baseline temporal stream (SORT) with cosine metric learning-based temporal stream. Row 1: results from SORT (ID switching issue); row 2: results from cosine metric learning + SORT (ID consistency); row 3: results from SORT (ID switching issue); row 4: results from cosine metric learning + SORT (ID consistency). |

For MOT (Figure 2.11), we present a comparison of our temporal network based on DeepSORT [52] with Urban Tracker [62] and TrafficIntelligence [63]. We also present more visual MOT comparisons of our baseline temporal stream (DeepSORT) with cosine metric learning-based temporal stream (Figure 2.12). For the tracking part, we use a tracking-by-detection paradigm; thus, our methods can handle still objects and measure their state. This is especially useful because Urban Tracker [62] and TrafficIntelligence [63] can only track moving objects. On the other hand, Urban Tracker [62] and TrafficIntelligence [63] can compute dense trajectories of moving objects with reasonable accuracy, but they have slower tracking speed—around one frame per second. Our two-stream convolutional networks can do spatial and temporal localization for accident detection for diverse accident regions involving cars and motorbikes. The three subtasks (object detection MOT, and near-accident detection) can consistently achieve real-time performance at a high frame rate—40 to 50 frames per second—and this depends on the frame resolution (e.g., 50 fps for 960�480 image frames).

| Figure 2.13.: | Object segmentation using detections and superpixels. Top-left: original image; top-middle: superpixels generated by SLIC [57]; top-right: final object mask; bottom-left: object detections; bottom-middle: colored superpixels generated by SLIC [57]; bottom right: final binary object mask. |

| Figure 2.14.: | Sample results of tracking, trajectory, and near-accident detection of our two-stream convolutional networks on simulation videos of TNAD dataset. Left: tracking results from the temporal stream; middle: trajectory results from the temporal stream; right: final near-accident detection results from the two-stream convolutional networks. |

In Figure 2.12, we demonstrate the effectiveness of applying cosine metric learning with the temporal stream for solving ID switching (and for the case where new object IDs emerge). For example, the first row shows that the ID of the green car (inside the red circle) got changed from 32 to 41. The third row shows that the IDs of the red car and a pedestrian (inside the red circle) were changed (from 30 to 43 and 38 to 44, respectively). The second and fourth rows demonstrate that with cosine metric learning, the IDs of tracks are kept consistent. In Figure 2.13, we show an example of a more accurate and compact road user mask generated by superpixel segmentation and detections for the learning-based gap estimation. With a segmentation mask, we can estimate the gap distance between road users more accurately than directly using object detections. Segmentation would be more beneficial for fisheye videos where the distortion is significant, and occlusion is heavier. For near-accident detection (Figure 2.14), we present final near-accident detection results along with tracking and trajectories using our two-stream convolutional networks method. Overall, the qualitative results demonstrate the effectiveness of our spatial and temporal networks.

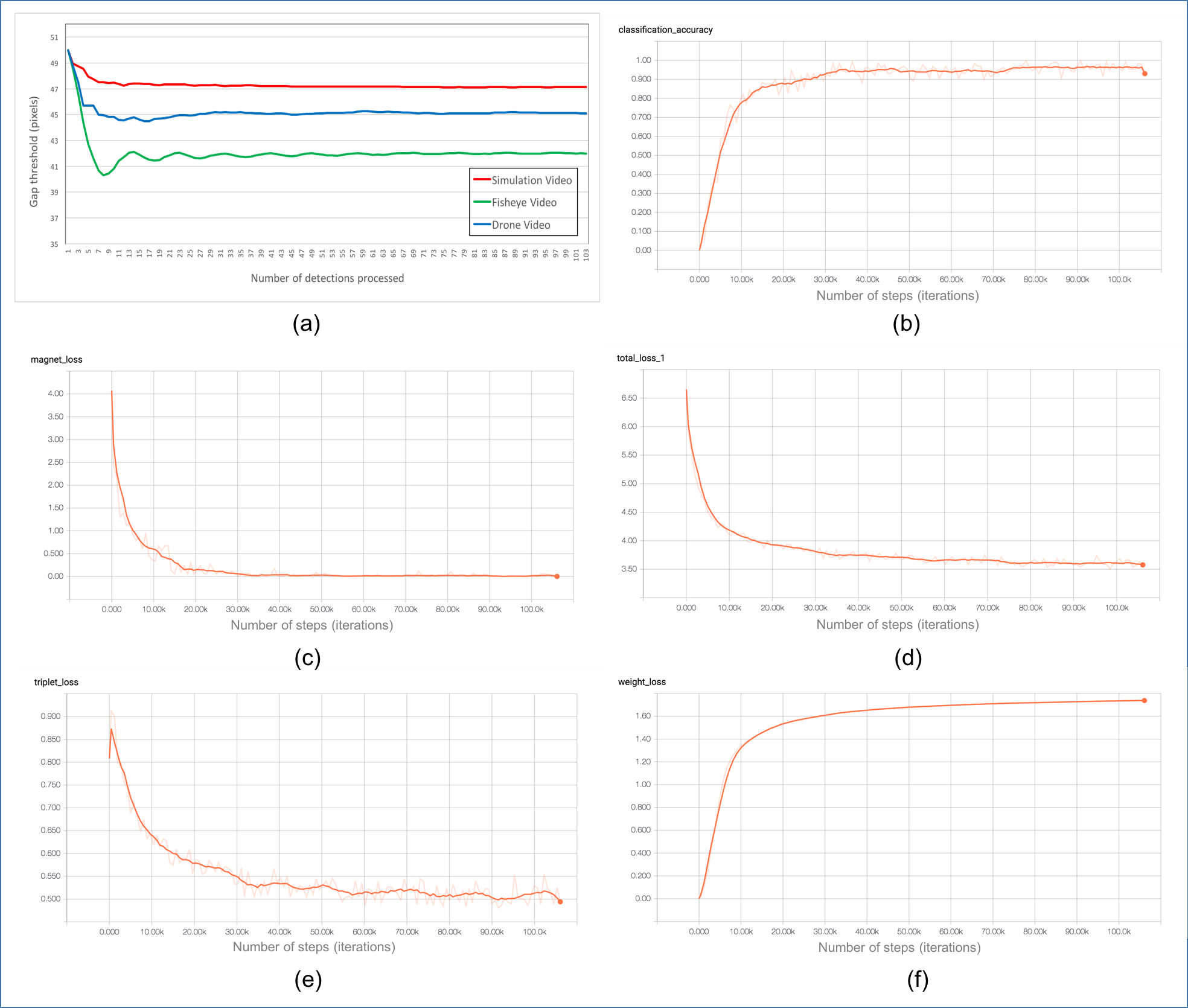

| Figure 2.15.: | Gap estimation and cosine metric learning. (a) Evolution of gap threshold for three types of videos; (b) classification accuracy for training VeRi dataset; (c) evolution of total loss for training; (d) evolution of magnet loss for training; (e) evolution of triplet loss for training; (f) evolution of weight loss for training. |

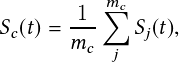

We present timing results for the tested methods in Table 2.1. All experiments have been performed on a single GPU (NVIDIA Titan V). The GPU-based SLIC segmentation [57] has excellent speed and runs from 110 to 400 fps on videos of different resolutions. The two-stream CNNs (object detection, MOT, and near-accident detection) can achieve real-time performance at a high frame rate, 33–50 fps, depending on the frame resolution.

We present some quantitative results for gap estimation and cosine metric learning in Figure 2.15. The results for cosine metric learning have been established after training the network for a fixed number of steps (100,000 iterations). The batch size was set to 120 images, and the learning rate was 0:001. All configurations after training have fully converged, as depicted in Figure 2.15.

| Table 2.1.: | Quantitative evaluation of timing results for the tested methods. |

|

Methods |

GPU | Drone | Simulation | Fisheye | |

| 1920 � 960 | 2560 � 1280 | 3360 � 1680 | 1280 � 960 | ||

| SLIC segmentation | Nvidia Titan V | 300 fps | 178 fps | 110 fps | 400 fps |

| Two-Stream CNNs | Nvidia Titian V | 43 fps | 38 fps | 33 fps | 50 fps |

| Table 2.2.: | Quantitative evaluation of all tasks for our two-stream method. |

| Task or Methods | Data | Precision | recall | F1-score | |

|

object detection | Simulation | 0.92670 | 0.95255 | 0.93945 | |

| Fisheye | 0.93871 | 0.87978 | 0.90829 | ||

|

multiple object tracking | Simulation | 0.89788 | 0.86617 | 0.88174 | |

| Fisheye | 0.91239 | 0.84900 | 0.87956 | ||

| Simulation | 0.91913 | 0.90310 | 0.91105 | |

| Fisheye | 0.92663 | 0.87725 | 0.90127 | ||

| SLIC Segmentation Mask | Simulation | 0.93089 | 0.81321 | 0.86808 | |

| Simulation | 0.90395 | 0.86022 | 0.88154 | |

| Simulation | 0.83495 | 0.92473 | 0.84108 | |

| Simulation | 0.92105 | 0.94086 | 0.93085 | |

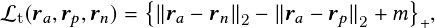

Triplet Loss. The triplet loss for cosine metric learning network [64] is defined by

| (2.11) |

where ra, rp, and rn are three samples in which a positive pair, ya = yp, and a negative pair, ya < yn, are included. With a predefined margin m 2 R, the triplet loss demands the distance between the positive and negative is larger than it. In this experiment, we introduce a soft-margin–based triplet loss where we replace the hinge of the original triplet loss [65] by a soft plus function,  � = log�1 � exp�x��, to resolve non-smoothness [66] issues. Further, to avoid potential issues

in the sampling strategy, we directly generate the triplets on GPU as proposed by [65].

� = log�1 � exp�x��, to resolve non-smoothness [66] issues. Further, to avoid potential issues

in the sampling strategy, we directly generate the triplets on GPU as proposed by [65].

Magnet Loss. Magnet loss is defined as a likelihood ratio measure that demands the separation of all samples away from the means of other classes. Instead of using a multimodal form in its original proposition [66], we use a unimodal, adapted version of this loss to fit the vehicle re-identification problem:

| (2.12) |

where �y�= f1;…;Cgnfyg, and the m is the predefined margin parameter, �y is used to represent the sample mean of class y, and �2 denotes the variance of each sample separated away from their class mean. They are all established for each batch individually on GPU.

For learning the gap threshold with superpixel segmentation and detections, the plot (Figure 2.15, top left) demonstrates the converging evolution of gap distance for three types of video. The initial gap threshold is set to be 50 pixels; the average gap thresholds from experiments are about 47 pixels, 45 pixels, and 42 pixels for simulation video, fisheye video and drone video, respectively.

The evaluation method we use, for instance segmentation, is quite similar to object detection, except that we now calculate the IoU of masks instead of bounding boxes.

Mask Precision, recall, and F1 Score. To evaluate our collection of predicted masks, we’ll compare each of our predicted masks with each of the available target masks for a given input.

Since our framework has three tasks and our dataset is quite different from other object detection datasets, tracking datasets, and near-accident datasets such as dashcam accident dataset [67], it isn’t easy to compare the individual quantitative performance for all three tasks with other methods. One of our motivations was to propose a vision-based solution for ITS; therefore, we focus more on near-accident detection and present a quantitative analysis of our two-stream convolutional networks. In Table 2.2, we present quantitative evaluations of three subtasks: object detection; multiple object tracking (with and without cosine metric learning); SLIC segmentation (mask vs. bounding box); and near-accident detection (spatial stream only, temporal stream only, and two-stream model). We present evaluations regarding object detection and multiple objects tracking for the fisheye video. The simulation videos are for training and testing with more near-accident samples, and we have 57 simulation videos totaling over 51,123 video frames. We sparsely sample only 1,087 frames from them for training processing. We present the analysis of near-accident detection for 30 testing videos (18 had positive near-accident; 12 had negative near-accident).

Near-accident Detection Precision, recall, and F1 Score. We’ll compare our predicted detections with each ground truth for a given input to evaluate our prediction of near-accidents.

We have proposed a two-stream convolutional network architecture that performs real-time detection, tracking, and near-accident detection for vehicles in traffic video data. The two-stream convolutional network comprises a spatial and temporal stream network. The spatial stream network detects individual vehicles and likely near-accident regions at the single-frame level by capturing appearance features with a state-of-the-art object detection method. The temporal stream network leverages motion features of detected candidates to perform multiple object tracking and generates individual trajectories of each tracked target. We detect near-accidents by incorporating appearance and motion features to compute probabilities of near-accident candidate regions. Experiments have demonstrated the advantage of our framework with an overall competitive qualitative and quantitative performance at high frame rates. Future work will include image stitching methods deployed on multi-camera fisheye videos.

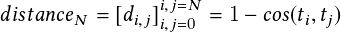

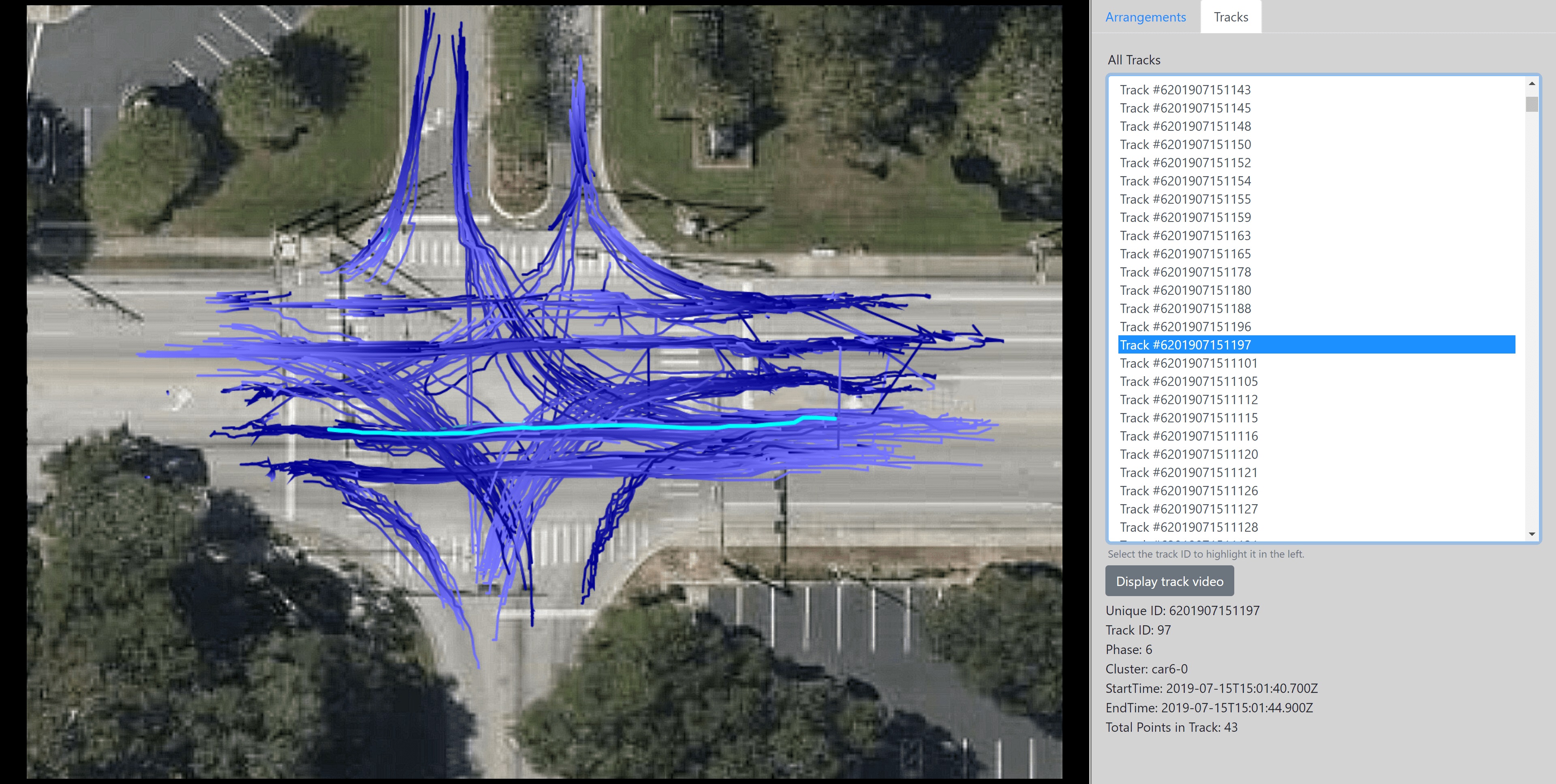

We develop a novel workflow for analyzing vehicular and pedestrian traffic at an intersection, beginning with ingesting video data and controller logs, followed by data storage and processing to generate dominant and anomalous behavior at the intersection, which helps in various applications such as near-miss detection [68], which is explained in detail in this chapter. The critical contributions of the work in this chapter are as follows:

Extensive results are provided on video and signal data collected at an intersection. These results demonstrate that video and signal timing information is useful in quantifying

The rest of the chapter is organized as follows. Section 3.2 describes the trajectory generation in brief, and Section 3.3 describes the recording of the current signal state of an intersection. Finally, Section 3.4 presents a detailed comparison of the candidate distance measures. Sections 3.7-3.9 presents an exhaustive treatise on near-miss detection.

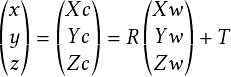

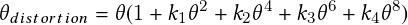

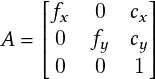

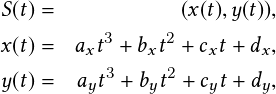

Video processing software processes object locations frame by frame from a video and outputs the location coordinates along with the corresponding timestamp. The video is captured by a camera installed at an intersection. To accurately locate the coordinates of an object, the video processing software must account for the different types of distortions that creep into the system. For example, a fisheye lens would have significant radial distortion. After taking into account the intrinsic and extrinsic properties of the camera, a mapping is created, which is used by the video processing software to map observed coordinates to modified coordinates that are nearly free of any distortion. To represent the location of a 3D object using a dimensionless point, one looks to find the object’s center of mass. A bounding box is drawn, enclosing the object. The center of the box is approximated to be the object’s center of mass. After generating timestamped trajectory coordinates, the software computes other properties, such as speed and direction of movement.

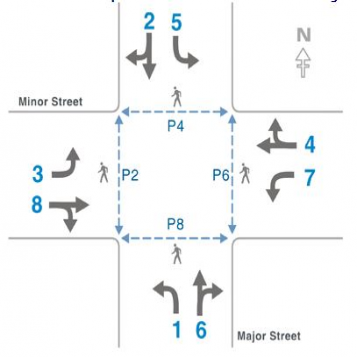

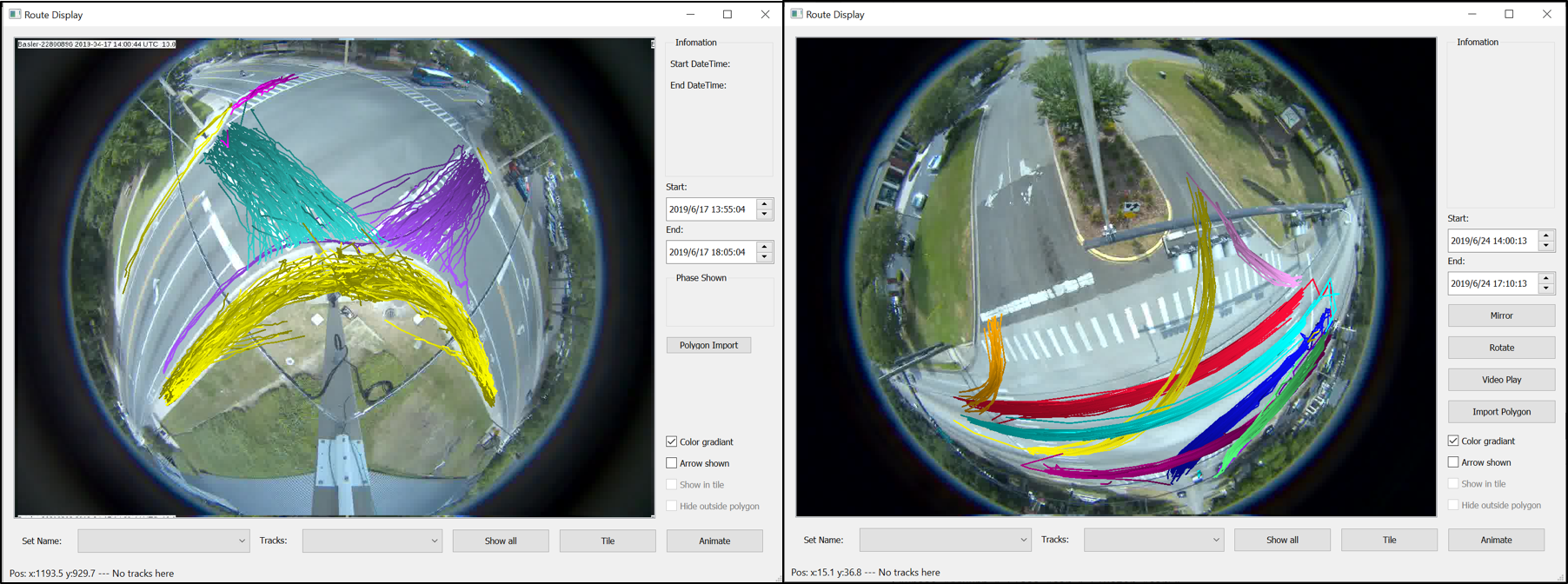

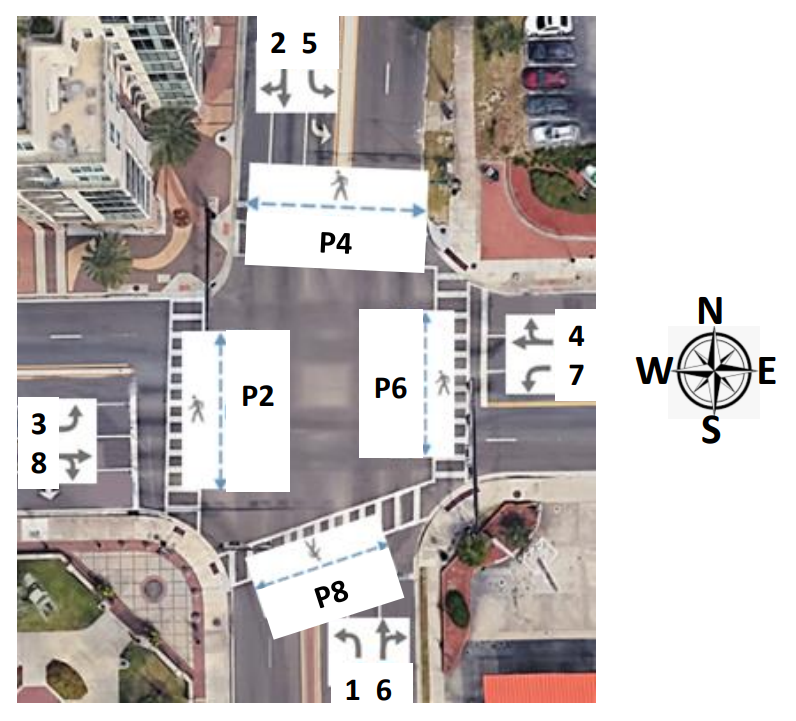

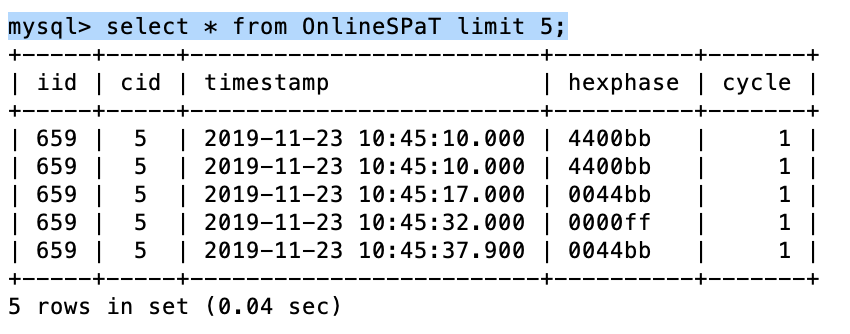

An intersection almost always has traffic lights to control traffic flow safely. The signal changes from green to yellow to red are events captured in controller logs called signal data. To specify a particular signal and, in a more general sense, the direction of movement, the traffic engineers define a standard that assigns phases 2 and 6 to the two opposite directions of the major street and 4 and 8 to those of the minor street. Figure 3.1 shows these phase numbers and the phase numbers for the turning vehicles and pedestrians.

Our application stores the current signal phase in a compact 6-digit hexadecimal encoding. To explain the formatting, let us consider the corresponding 24-bit binary equivalent. The bits 1–8 are programmed to be 1 if the corresponding phase is green and 0 otherwise. Similarly, bits 9–16 and 17–24 are reserved for programming the yellow and red status for the eight-vehicle phases, respectively. For example, green on phases 2 and 6 at an intersection would have a binary encoding of 0100 0100 for the first 8 bits, the next eight bits would be 0000 0000 for yellow, and the last set of 8 bits for red would be one where the second and sixth bits are 0 represented as 1011 1011. Thus, the overall 24-bit binary representation of the current signaling state is 0100 0100 0000 0000 1011 1011, or 4400bb.

| Figure 3.1.: | Phase Diagram showing vehicular and pedestrian movement at four-way intersections. The solid gray arrows show vehicle movements while the blue dotted arrows show pedestrian movements [69]. |

The first step toward clustering a set of trajectories is applying a suitable distance measure that will, for any two trajectories, tell how close the trajectories are to each other in space and time. Two potential candidates for distance measures of the intersection trajectories are euclidean distance (ED) and dynamic time warping (DTW). Among these, ED is the square root of the sum of the squared length of vertical or horizontal hatched lines. The disadvantage of using ED is that it cannot calculate their distance reliably for trajectories of different sizes.

DTW can compute distances between trajectories when they vary in time, speed, or path length. Although DTW utilizes a dynamic programming approach for an optimal distance and has time complexity O�N2�, where N is the number of coordinates in the two trajectories, there are approximate approaches such as FastDTW that realize a near-optimal solution and has a space and time complexity of O�N�. FastDTW is based on a multilevel iterative approach. FastDTW returns a distance and a list of pairs of points, also known as the warp path. A pair of points consists of coordinates on the first and second trajectories and represents the best match between the points after the trajectories are warped. The distance returned by FastDTW is the sum of the distances between each pair of points on the path. Quite naturally, the distance is negligible if the trajectories occur in the same geographical coordinates and are traversed at similar speeds.

| Figure 3.3.: | An example where two similar trajectories have a high distance value when DTW/FastDTW is directly used to compute distance. This is potentially due to differential tracking of vehicles due to potential occlusion. |

DTW and FastDTW work well for trajectories entirely captured by the sensor system. In reality, the sensor system and the processing software may not wholly capture the trajectory. In that case, the distance the dynamic time-warping algorithm returns does not represent the actual distance. Figure 3.3 highlights two example trajectories for which DTW returns a high value for the distance suggesting the tracks are dissimilar. For example, for the two tracks

going straight, if the starting portion of one of the tracks is truncated due to a processing error, as shown in Figure 3.4, the distance between the tracks will be  . Thus, the distance computed results in a high value, which often falls in the distance range between two unrelated trajectories. Hence, we developed a new distance measure by utilizing the warp path returned by the

FastDTW.

. Thus, the distance computed results in a high value, which often falls in the distance range between two unrelated trajectories. Hence, we developed a new distance measure by utilizing the warp path returned by the

FastDTW.

We describe our trajectory clustering method in this section. There are two main components to clustering; the first is to use an efficient distance measure for the trajectories to be clustered, and the second is to use a clustering algorithm that uses the distance measure to create clusters of trajectories that behave similarly. We describe the novel distance measure developed as part of this work in Section 3.5.1 and present our clustering algorithm in Section 3.5.2.

The new distance measure developed in this section applies to trajectories captured using real-time video processing.

| Figure 3.4.: | Two trajectories represented by ABCDE and FGH. The dashed lines show the point correspondence (warp path) obtained using FastDTW. The trajectory FGH is shorter because the beginning part of the trajectory was not captured. |

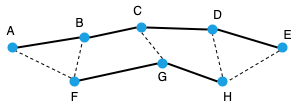

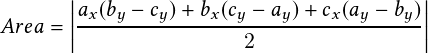

The first step in the computation of the distance measure is to obtain the warp path using a time-warping algorithm, e.g., FastDTW. Triangles are constructed using the warp path as shown in Figure 3.4, where the triangles are 4ABF, 4BCF, 4CDF, 4DFG, 4DEG, and 4EGH. Since the coordinates of the vertices (A, B, C, D, E, F, G, and H) are known, the area of each may be computed using the following formula from coordinate geometry.

| (3.1) |

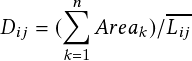

where the vertices of the triangle have coordinates: �ax;ay�;�bx;by�; and �cx;cy�. The sum of the area of all triangles is computed, and finally, the distance, Dij, between two trajectories Ti and Tj, is computed as:

| (3.2) |

where Areak is the area of the kth triangle and Lij is the average length of the two trajectories, Ti and Tj, and n is the total number of triangles. If there is no warping, and the number of matched pairs is m, then n = 2m, by construction. However, if there is warping, then n < 2m. Dij is the average perpendicular distance between the trajectories, which intuitively is the average height of the triangles.

To get a more accurate local distance measure, we segment the trajectories and compute the distance of the starting and the finishing segments. Let SDij and FDij be the distances of the starting and the finishing segments, respectively. Then, SDij is the distance between the first pair of matching points that are not warped (CF and DG in Figure 3.4), and correspondingly, FDij is the distance between the last pair of matching points that are not warped (DG and EF in Figure 3.4). SDij may be computed as the average height of triangles 4CFG and 4CDG, and FDij as that of triangles 4DGH and 4DEH. Thus, we use triplets of (Dij, SDij, FDij) to represent the distance between two trajectories. If required, these three portions of the distance measure can be suitably weighted for computing a scalar distance.

The similarity matrix, S, is computed from the distance measures by setting up empirical thresholds for the magnitude of the distance between two trajectories. For example, given two trajectories Ti and Tj, if their average distance, Dij, from Equation 3.2 is less than a threshold �x and the start section distance, SDij, is less than a threshold �y and the last section distance, FDij, is less than a threshold �z, then Ti and Tj are considered similar. In that case, the corresponding entry in the similarity matrix would be Sij = Sji = 1. If any distance value exceeds the corresponding threshold �x, �y, or �z, the trajectories would be considered dissimilar, and in that case, Sij = Sji = 0. In this manner, the similarity matrix is computed and is ready to be used in spectral clustering.

Clustering a large set of N trajectories is a O�N2� operation because pairwise distance needs to be computed to prepare a distance matrix. A two-level hierarchical clustering scheme is proposed here to address the quadratic complexity. Clustering at the first level partitions the trajectories into homogeneous clusters based on their direction of movement. Then spectral clustering is applied to cluster the trajectories for each phase separately and to detect anomalies. The clustering scheme is explained in detail in this section.

Any object at an intersection must obey the traffic rules; hence, its trajectory is constrained in time and space. One of the goals of the software is to detect traffic violations and the underlying causes to make the intersection safer ultimately. These violations may appear as spatial outliers or as timing violations. Figure 3.1 shows the phases for pedestrian and vehicular movements. A given set of trajectories is partitioned into eight bins aligned with the eight phases and into four additional bins corresponding to the right turns for phases 2, 4, 6, and 8.

Because the trajectories are essentially a series of coordinates, it is possible to use basic vector algebra and trigonometry to get their general direction. For example, the spatial coordinates of a track Ti is given as (x1, y1), (x2, y2),  , (xn, yn). Let A = �x1;y1� and B = �xn;yn� be two vectors connecting the origin to the start and end points respectively, of Ti, with their direction away from the origin. Let AB be a vector connecting the start and the endpoints, with its head at the

endpoint, �xn;yn�. Then, using the rules of vector addition, A � AB = B, which implies AB = B �A . Thus, AB = �xn �x1;yn �y1�.

, (xn, yn). Let A = �x1;y1� and B = �xn;yn� be two vectors connecting the origin to the start and end points respectively, of Ti, with their direction away from the origin. Let AB be a vector connecting the start and the endpoints, with its head at the

endpoint, �xn;yn�. Then, using the rules of vector addition, A � AB = B, which implies AB = B �A . Thus, AB = �xn �x1;yn �y1�.

Once constructed, a trajectory vector may be compared with a reference vector to obtain the direction of the trajectory. The start and end points of these reference vectors may be obtained using CAD tools supported by visualization software, and the coordinates of the start and endpoints are specified in a configuration file.

![]()

| Figure 3.5.: | Snippet of a configuration file that shows the user inputs needed for an intersection. The coordinates may be obtained using a visualization tool [70]. |

The cosine of the acute angle between a trajectory vector u and a reference vector v is given by

| (3.3) |

When the directions nearly match, the value of the cosine is close to 1:0.

A snippet of a configuration file used to specify the reference vectors is shown in Figure 3.5. The user can specify the direction of the phase 2 movement using the phase2 parameter of this file, which has a value WB (westbound) in this example. The other phases, as described in Figure 3.1, may be derived with reference to the phase 2 direction. The parameters SN, NS, WE, and EW specify the start and endpoints of reference vectors for through lanes along south-north, north-south, west-east, and east-west directions, respectively. Figure 3.6 shows the corresponding reference vectors. The start and endpoint coordinates of the stop bars are also needed to differentiate left- and right-turn movements that otherwise align with each other. An example of this is given later in this section.

Given a trajectory, the cosine value in Equation 3.3 may be computed for the trajectory vector and the reference vectors, and if the value calculated is close to 1 for any of these vectors, the trajectory may be assigned the corresponding through a phase (one of 2, 4, 6 or 8). For any intersection, the coordinates of the reference vectors have to be determined once, and the configuration file may be reused over time until the geometry of the intersection is changed.

The next step in the hierarchical clustering scheme is to cluster the trajectories with the same movement direction. The spectral clustering algorithm is applied in this step. Prior experimentation with a simple K-means clustering approach for this problem highlights the benefits of using spectral clustering instead. A pure K-means algorithm requires user input for the number of possible clusters, which is impossible for the user to know in advance. Spectral clustering, through its smart use of standard linear algebra methods, gives the user objective feedback about possible clusters and further accentuates the trajectories’ features to make cluster separation easier.

The inputs to a spectral clustering algorithm are a set of trajectories, say tr1, tr2,  , trn, and a similarity matrix S, where any element sij of the matrix S denotes the similarity between trajectories tri and trj. It is to be noted that we consider sij = sji � 0, where sij = 0 if tri and trj are not similar. Given these two inputs, spectral clustering creates clusters of trajectories such that all trajectories in the same cluster are similar. In contrast, two trajectories belonging to different clusters

are not so similar. For example, for the given set of trajectories, spectral clustering creates separate clusters for trajectories following different lanes or those that change lanes at the intersection. As a result, spectral clustering also helps identify the outliers and anomalous trajectories that are not similar to any other trajectories in the set. The two inputs to a spectral clustering algorithm may be represented as a graph G = �V ;E�, where vertex vi in this graph represents a trajectory tri.

Two vertices are connected if the similarity sij between the corresponding trajectories tri and trj is greater than a certain threshold �, and the edge is weighted by sij. Thus, the clustering problem may be recast as finding connected components in the graph such that the sum of the weights of edges between different components is negligible. Hence, by obtaining the number of connected components, we know the number of clusters and then get the clusters by applying a K-means

algorithm.

, trn, and a similarity matrix S, where any element sij of the matrix S denotes the similarity between trajectories tri and trj. It is to be noted that we consider sij = sji � 0, where sij = 0 if tri and trj are not similar. Given these two inputs, spectral clustering creates clusters of trajectories such that all trajectories in the same cluster are similar. In contrast, two trajectories belonging to different clusters

are not so similar. For example, for the given set of trajectories, spectral clustering creates separate clusters for trajectories following different lanes or those that change lanes at the intersection. As a result, spectral clustering also helps identify the outliers and anomalous trajectories that are not similar to any other trajectories in the set. The two inputs to a spectral clustering algorithm may be represented as a graph G = �V ;E�, where vertex vi in this graph represents a trajectory tri.

Two vertices are connected if the similarity sij between the corresponding trajectories tri and trj is greater than a certain threshold �, and the edge is weighted by sij. Thus, the clustering problem may be recast as finding connected components in the graph such that the sum of the weights of edges between different components is negligible. Hence, by obtaining the number of connected components, we know the number of clusters and then get the clusters by applying a K-means

algorithm.

We used the linalg library provided by NumPy to perform the linear algebra operations in spectral clustering. Once the number k of connected components is known, a K-means algorithm is run on the first k eigenvectors to generate the clustering results. The function KMeans is a K-means clustering algorithm from the Python sklearn.cluster library.

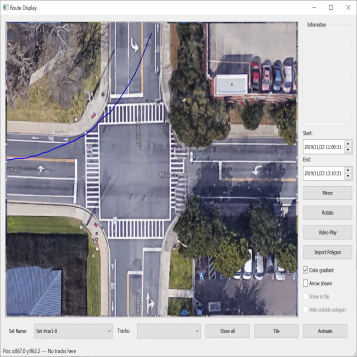

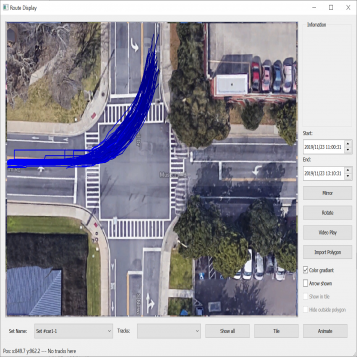

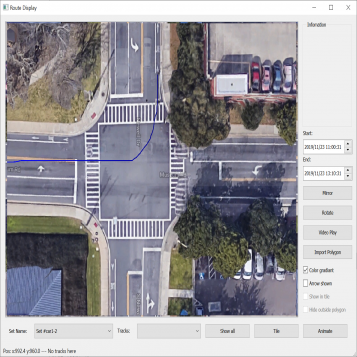

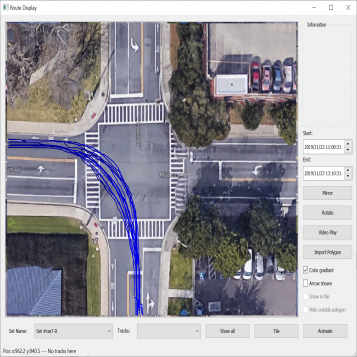

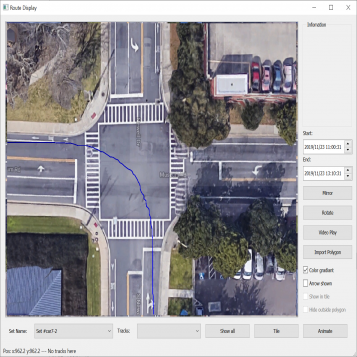

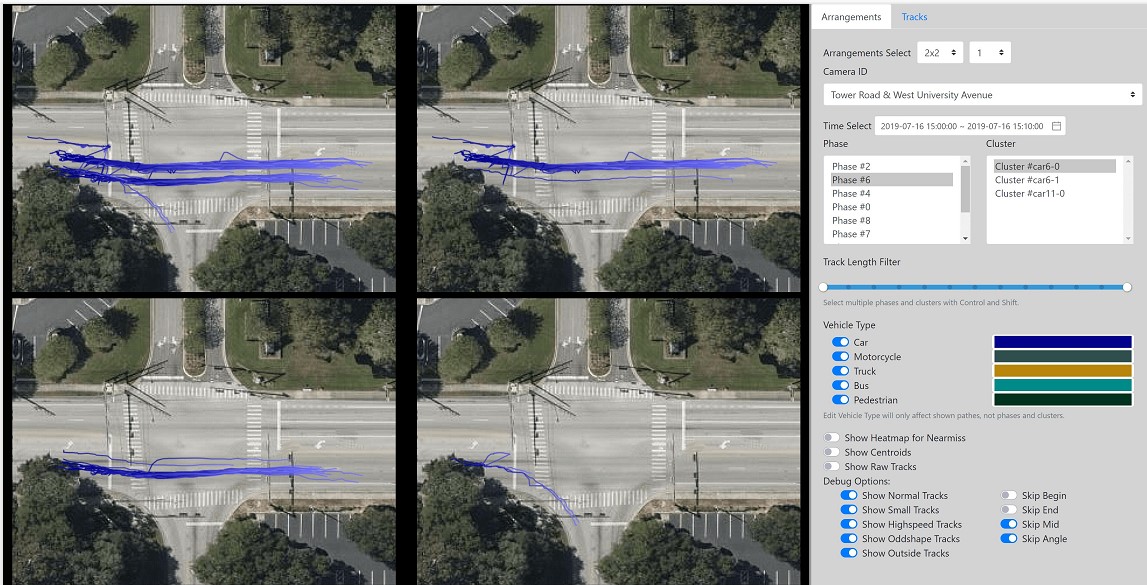

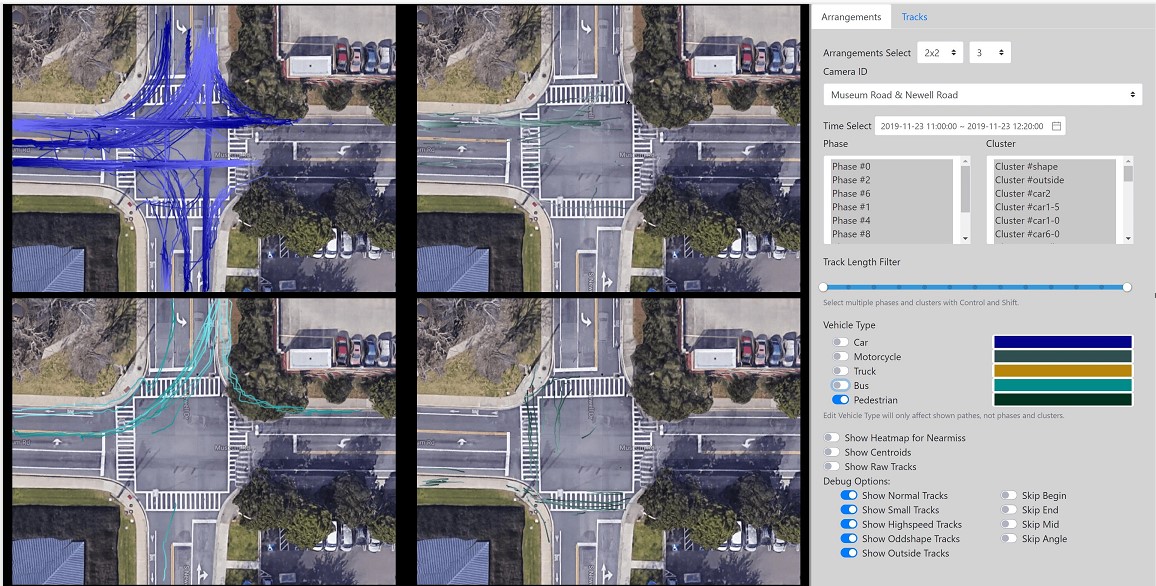

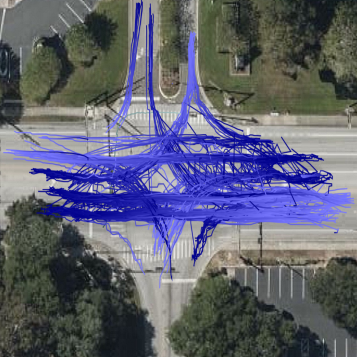

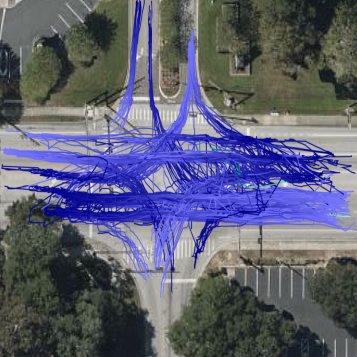

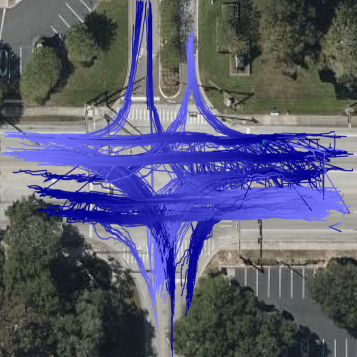

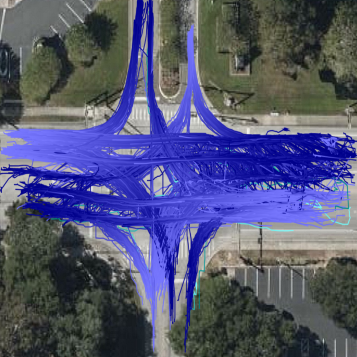

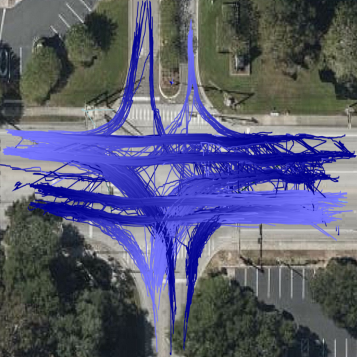

The clusters generated by spectral clustering for all the left-turn trajectories are shown in Figure 3.7. The straight and right-turn trajectories clusters are omitted here due to space constraints.

| Figure 3.7.: | Second level of the two-level hierarchical clustering scheme where spectral clustering is applied to trajectories with the same direction of motion. The clusters of some left-turn trajectories are presented in this figure. |

After the trajectories are clustered, we identify a trajectory in each cluster that is representative of that cluster. The representative for each cluster is computed as the trajectory t belonging to the cluster with the least average distance from all the other trajectories.

Detecting anomalous traffic behavior is one of the top goals for clustering trajectories. An anomalous trajectory may violate the spatial or temporal constraints at an intersection. The spatial constraints amount to the restrictions a vehicle must follow at an intersection, such as never going the wrong way. Temporal constraints, on the other hand, are the restrictions imposed by the signaling system at an intersection. We consider these two types of anomalous behavior in the rest of this section.

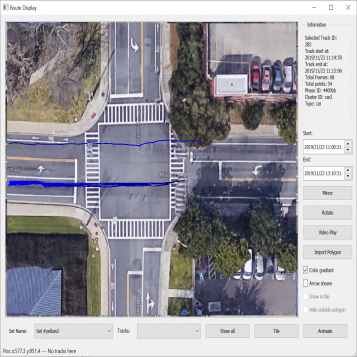

The fusion of video and signal data allows us to detect the validity of the trajectories with reference to the current signaling phase of the intersection. The video and signal clocks are sometimes off by a few seconds. Adding an offset to the trajectories may treat the clocks as synchronous. This offset may be computed manually by comparing the time a signal in the video transitions to green and the time in the signaling when there is a “Phase Begin Green” event for the corresponding phase. It is also possible to compute the offset automatically in software by checking the timestamp of the first trajectory that crosses, say, the phase 2 stop bar (Figure 3.6) and the timestamp in signal data when the phase 2 signal becomes green and then adding 2.5 seconds of driver reaction time to the signal transition timestamp. Figure 3.8 shows the trajectories during a yellow light.

| Figure 3.8.: | Collection of tracks representing vehicles that enter the intersection on a yellow light. |

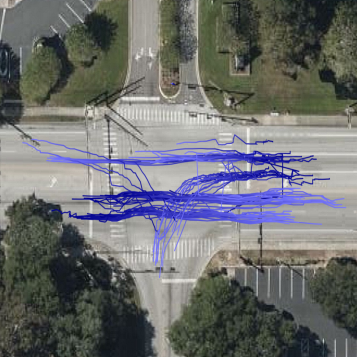

| Figure 3.9.: | Collection of tracks representing vehicles with anomalous behavior because of their shape. |

Figure 3.9 shows the anomalous trajectories. In all the cases here, the trajectories are turn movements. Sometimes these trajectories take a very wide turn. At other times, the trajectories turn left from a through lane, and at still other times, the trajectories start taking a turn much before the actual stop bar, causing wrong-way access to the adjacent lane.

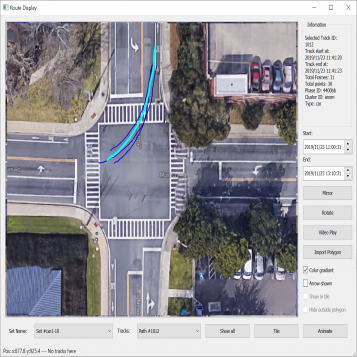

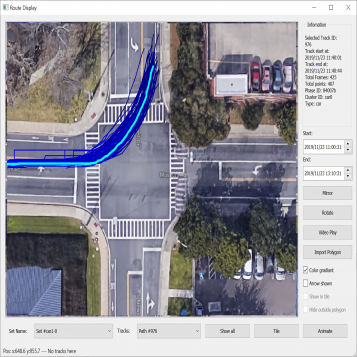

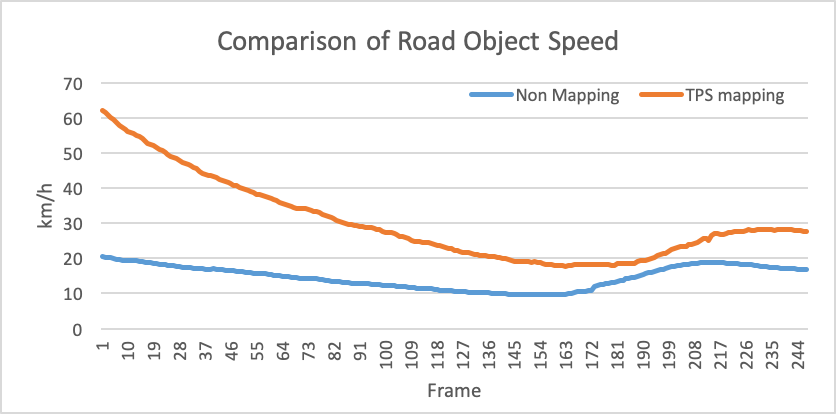

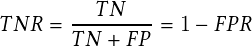

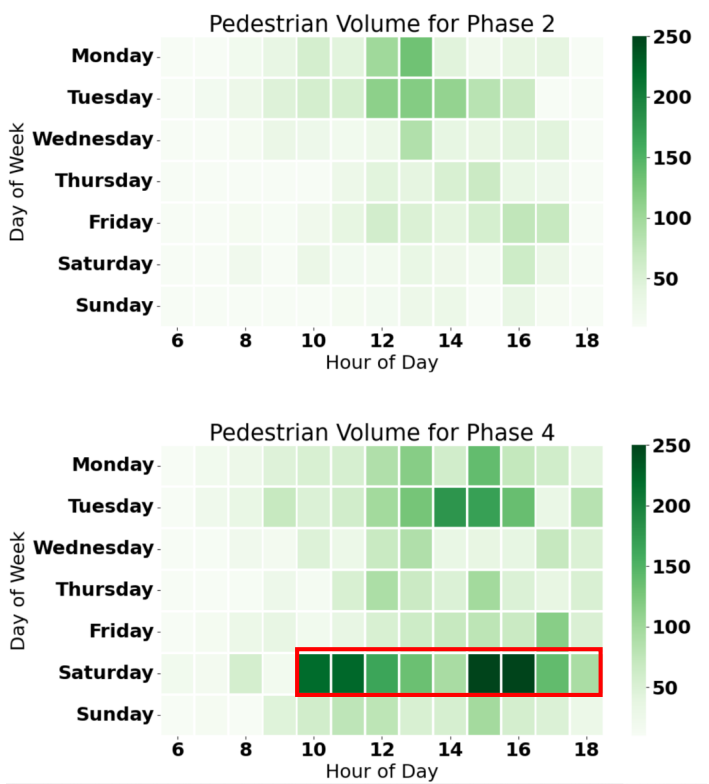

Our video processing software allows one to collect sufficient samples and visual cues corresponding to near-misses, intending to detect and even anticipate dangerous scenarios in real-time so that appropriate preventive steps can be undertaken. In particular, we focus on near-miss problems from large-scale intersection videos collected from fisheye cameras. The goal is to temporally and spatially localize and recognize near-miss cases from the fisheye video. The primary motivation for resolving distortion instead of using original fisheye videos is to compute accurate distance among objects and their proper speeds using rectangular coordinates that better represent the real world. The projections are made on an overhead satellite map of the intersection. We specify five categories of objects of interest: pedestrians, motorbikes, cars, buses, and trucks. The overhead satellite maps of intersections are derived from Google Earth�. The main steps of our detection framework (Figure 3.11) can be summarized as follows:

Figure 3.12 demonstrates the pipeline and the overall architecture of the proposed method.